Physics

- 't Hooft, Gerard and Stefan Vandoren. Time in Powers of Ten. Singapore: World Scientific, 2014. ISBN 978-981-4489-81-2.

-

Phenomena in the universe take place over scales ranging from the unimaginably small to the breathtakingly large. The classic film, Powers of Ten, produced by Charles and Ray Eames, and the companion book explore the universe at length scales in powers of ten: from subatomic particles to the most distant visible galaxies. If we take the smallest meaningful distance to be the Planck length, around 10−35 metres, and the diameter of the observable universe as around 1027 metres, then the ratio of the largest to smallest distances which make sense to speak of is around 1062. Another way to express this is to answer the question, “How big is the universe in Planck lengths?” as “Mega, mega, yotta, yotta big!”

But length isn't the only way to express the scale of the universe. In the present book, the authors examine the time intervals at which phenomena occur or recur. Starting with one second, they take steps of powers of ten (10, 100, 1000, 10000, etc.), arriving eventually at the distant future of the universe, after all the stars have burned out and even black holes begin to disappear. Then, in the second part of the volume, they begin at the Planck time, 5×10−44 seconds, the shortest unit of time about which we can speak with our present understanding of physics, and again progress by powers of ten until arriving back at an interval of one second.

Intervals of time can denote a variety of different phenomena, which are colour coded in the text. A period of time can mean an epoch in the history of the universe, measured from an event such as the Big Bang or the present; a distance defined by how far light travels in that interval; a recurring event, such as the orbital period of a planet or the frequency of light or sound; or the half-life of a randomly occurring event such as the decay of a subatomic particle or atomic nucleus.

Because the universe is still in its youth, the range of time intervals discussed here is much larger than those when considering length scales. From the Planck time of 5×10−44 seconds to the lifetime of the kind of black hole produced by a supernova explosion, 1074 seconds, the range of intervals discussed spans 118 orders of magnitude. If we include the evaporation through Hawking radiation of the massive black holes at the centres of galaxies, the range is expanded to 143 orders of magnitude. Obviously, discussions of the distant future of the universe are highly speculative, since in those vast depths of time physical processes which we have never observed due to their extreme rarity may dominate the evolution of the universe.

Among the fascinating facts you'll discover is that many straightforward physical processes take place over an enormous range of time intervals. Consider radioactive decay. It is possible, using a particle accelerator, to assemble a nucleus of hydrogen-7, an isotope of hydrogen with a single proton and six neutrons. But if you make one, don't grow too fond of it, because it will decay into tritium and four neutrons with a half-life of 23×10−24 seconds, an interval usually associated with events involving unstable subatomic particles. At the other extreme, a nucleus of tellurium-128 decays into xenon with a half-life of 7×1031 seconds (2.2×1024 years), more than 160 trillion times the present age of the universe.

While the very short and very long are the domain of physics, intermediate time scales are rich with events in geology, biology, and human history. These are explored, along with how we have come to know their chronology. You can open the book to almost any page and come across a fascinating story. Have you ever heard of the ocean quahog (Arctica islandica)? They're clams, and the oldest known has been determined to be 507 years old, born around 1499 and dredged up off the coast of Iceland in 2006. People eat them.

Or did you know that if you perform carbon-14 dating on grass growing next to a highway, the lab will report that it's tens of thousands of years old? Why? Because the grass has incorporated carbon from the CO2 produced by burning fossil fuels which are millions of years old and contain little or no carbon-14.

This is a fascinating read, and one which uses the framework of time intervals to acquaint you with a wide variety of sciences, each inviting further exploration. The writing is accessible to the general reader, young adult and older. The individual entries are short and stand alone—if you don't understand something or aren't interested in a topic, just skip to the next. There are abundant colour illustrations and diagrams.

Author Gerard 't Hooft won the 1999 Nobel Prize in Physics for his work on the quantum mechanics of the electroweak interaction. The book was originally published in Dutch in the Netherlands in 2011. The English translation was done by 't Hooft's daughter, Saskia Eisberg-'t Hooft. The translation is fine, but there are a few turns of phrase which will seem odd to an English mother tongue reader. For example, matter in the early universe is said to “clot” under the influence of gravity; the common English term for this is “clump”. This is a translation, not a re-write: there are a number of references to people, places, and historical events which will be familiar to Dutch readers but less so to those in the Anglosphere. In the Kindle edition notes, cross-references, the table of contents, and the index are all properly linked, and the illustrations are reproduced well.

- Adams, Fred and Greg Laughlin. The Five Ages of the Universe. New York: The Free Press, 1999. ISBN 0-684-85422-8.

- Barrow, John D. The Constants of Nature. New York: Pantheon Books, 2002. ISBN 0-375-42221-8.

- This main body copy in this book is set in a type font in which the digit “1” is almost indistinguishable from the capital letter “I”. Almost—look closely at the top serif on the “1” and you'll note that it rises toward the right while the “I” has a horizontal top serif. This struck my eye as ugly and antiquated, but I figured I'd quickly get used to it. Nope: it looked just as awful on the last page as in the first chapter. Oddly, the numbers on pages 73 and 74 use a proper digit “1”, as do numbers within block quotations.

- Barrow, John D. The Infinite Book. New York: Vintage Books, 2005. ISBN 1-4000-3224-5.

- Don't panic—despite the title, this book is only 330 pages! Having written an entire book about nothing (The Book of Nothing, May 2001), I suppose it's only natural the author would take on the other end of the scale. Unlike Rudy Rucker's Infinity and the Mind, long the standard popular work on the topic, Barrow spends only about half of the book on the mathematics of infinity. Philosophical, metaphysical, and theological views of the infinite in a variety of cultures are discussed, as well as the history of the infinite in mathematics, including a biographical portrait of the ultimately tragic life of Georg Cantor. The physics of an infinite universe (and whether we can ever determine if our own universe is infinite), the paradoxes of an infinite number of identical copies of ourselves necessarily existing in an infinite universe, the possibility of machines which perform an infinite number of tasks in finite time, whether we're living in a simulation (and how we might discover we are), and the practical and moral consequences of immortality and time travel are also explored. Mathematicians and scientists have traditionally been very wary of the infinite (indeed, the appearance of infinities is considered an indication of the limitations of theories in modern physics), and Barrow presents any number of paradoxes which illustrate that, as he titles chapter four, “infinity is not a big number”: it is fundamentally different and requires a distinct kind of intuition if nonsensical results are to be avoided. One of the most delightful examples is Zhihong Xia's five-body configuration of point masses which, under Newtonian gravitation, expands to infinite size in finite time. (Don't worry: the finite speed of light, formation of an horizon if two bodies approach too closely, and the emission of gravitational radiation keep this from working in the relativistic universe we inhabit. As the author says [p. 236], “Black holes might seem bad but, like growing old, they are really not so bad when you consider the alternatives.”) This is an enjoyable and enlightening read, but I found it didn't come up to the standard set by The Book of Nothing and The Constants of Nature (June 2003). Like the latter book, this one is set in a hideously inappropriate font for a work on mathematics: the digit “1” is almost indistinguishable from the letter “I”. If you look very closely at the top serif on the “1” you'll note that it rises toward the right while the “I” has a horizontal top serif. But why go to the trouble of distinguishing the two characters and then making the two glyphs so nearly identical you can't tell them apart without a magnifying glass? In addition, the horizontal bar of the plus sign doesn't line up with the minus sign, which makes equations look awful. This isn't the author's only work on infinity; he's also written a stage play, Infinities, which was performed in Milan in 2002 and 2003.

- Barrow, John D., Paul C.W. Davies, and Charles L. Harper, Jr., eds. Science and Ultimate Reality. Cambridge: Cambridge University Press, 2004. ISBN 0-521-83113-X.

- These are the proceedings of the festschrift at Princeton in March 2002 in honour of John Archibald Wheeler's 90th year within our light-cone. This volume brings together the all-stars of speculative physics, addressing what Wheeler describes as the “big questions.” You will spend a lot of time working your way through this almost 700 page tome (which is why entries in this reading list will be uncharacteristically sparse this month), but it will be well worth the effort. Here we have Freeman Dyson posing thought-experiments which purport to show limits to the applicability of quantum theory and the uncertainty principle, then we have Max Tegmark on parallel universes, arguing that the most conservative model of cosmology has infinite copies of yourself within the multiverse, each choosing either to read on here or click another link. Hideo Mabuchi's chapter begins with an introductory section which is lyrical prose poetry up to the standard set by Wheeler, and if Shou-Cheng Zhang's final chapter doesn't make you re-think where the bottom of reality really lies, you're either didn't get it or have been spending way too much time reading preprints on ArXiv. I don't mean to disparage any of the other contributors by not mentioning them—every chapter of this book is worth reading, then re-reading carefully. This is the collected works of the 21th century equivalent of the savants who attended the Solvay Congresses in the early 20th century. Take your time, reread difficult material as necessary, and look up the references. You'll close this book in awe of what we've learned in the last 20 years, and in wonder of what we'll discover and accomplish the the rest of this century and beyond.

- Behe, Michael J., William A. Dembski, and Stephen C. Meyer. Science and Evidence for Design in the Universe. San Francisco: Ignatius Press, 2000. ISBN 0-89870-809-5.

- Bell, John S. Speakable and Unspeakable in Quantum Mechanics. Cambridge: Cambridge University Press, [1987] 1993. ISBN 0-521-52338-9.

- This volume collects most of Bell's papers on the foundations and interpretation of quantum mechanics including, of course, his discovery of “Bell's inequality”, which showed that no local hidden variable theory can reproduce the statistical results of quantum mechanics, setting the stage for the experimental confirmation by Aspect and others of the fundamental non-locality of quantum physics. Bell's interest in the pilot wave theories of de Broglie and Bohm is reflected in a number of papers, and Bell's exposition of these theories is clearer and more concise than anything I've read by Bohm or Hiley. He goes on to show the strong similarities between the pilot wave approach and the “many world interpretation” of Everett and de Witt. An extra added treat is chapter 9, where Bell derives special relativity entirely from Maxwell's equations and the Bohr atom, along the lines of Fitzgerald, Larmor, Lorentz, and Poincaré, arriving at the principle of relativity (which Einstein took as a hypothesis) from the previously known laws of physics.

- Benford, Gregory ed. Far Futures. New York: Tor, 1995. ISBN 0-312-86379-9.

- Bernstein, Jeremy. Plutonium. Washington: Joseph Henry Press, 2007. ISBN 0-309-10296-0.

- When the Manhattan Project undertook to produce a nuclear bomb using plutonium-239, the world's inventory of the isotope was on the order of a microgram, all produced by bombarding uranium with neutrons produced in cyclotrons. It wasn't until August of 1943 that enough had been produced to be visible under a microscope. When, in that month, the go-ahead was given to build the massive production reactors and separation plants at the Hanford site on the Columbia River, virtually nothing was known of the physical properties, chemistry, and metallurgy of the substance they were undertaking to produce. In fact, it was only in 1944 that it was realised that the elements starting with thorium formed a second group of “rare earth” elements: the periodic table before World War II had uranium in the column below tungsten and predicted that the chemistry of element 94 would resemble that of osmium. When the large-scale industrial production of plutonium was undertaken, neither the difficulty of separating the element from the natural uranium matrix in which it was produced nor the contamination with Pu-240 which would necessitate an implosion design for the plutonium bomb were known. Notwithstanding, by the end of 1947 a total of 500 kilograms of the stuff had been produced, and today there are almost 2000 metric tons of it, counting both military inventories and that produced in civil power reactors, which crank out about 70 more metric tons a year. These are among the fascinating details gleaned and presented in this history and portrait of the most notorious of artificial elements by physicist and writer Jeremy Bernstein. He avoids getting embroiled in the building of the bomb, which has been well-told by others, and concentrates on how scientists around the world stumbled onto nuclear fission and transuranic elements, puzzled out what they were seeing, and figured out the bizarre properties of what they had made. Bizarre is not too strong a word for the chemistry and metallurgy of plutonium, which remains an active area of research today with much still unknown. When you get that far down on the periodic table, both quantum mechanics and special relativity get into the act (as they start to do even with gold), and you end up with six allotropic phases of the metal (in two of which volume decreases with increasing temperature), a melting point of just 640° C and an anomalous atomic radius which indicates its 5f electrons are neither localised nor itinerant, but somewhere in between. As the story unfolds, we meet some fascinating characters, including Fritz Houtermans, whose biography is such that, as the author notes (p. 86), “if one put it in a novel, no one would find it plausible.” We also meet stalwarts of the elite 26-member UPPU Club: wartime workers at Los Alamos whose exposure to plutonium was sufficient that it continues to be detectable in their urine. (An epidemiological study of these people which continues to this day has found no elevated rates of mortality, which is not to say that plutonium is not a hideously hazardous substance.) The text is thoroughly documented in the end notes, and there is an excellent index; the entire book is just 194 pages. I have two quibbles. On p. 110, the author states of the Little Boy gun-assembly uranium bomb dropped on Hiroshima, “This is the only weapon of this design that was ever detonated.” Well, I suppose you could argue that it was the only such weapon of that precise design detonated, but the implication is that it was the first and last gun-type bomb to be detonated, and this is not the case. The U.S. W9 and W33 weapons, among others, were gun-assembly uranium bombs, which between them were tested three times at the Nevada Test Site. The price for plutonium-239 quoted on p. 155, US$5.24 per milligram, seems to imply that the plutonium for a critical mass of about 6 kg costs about 31 million dollars. But this is because the price quoted is for 99–99.99% isotopically pure Pu-239, which has been electromagnetically separated from the isotopic mix you get from the production reactor. Weapons-grade plutonium can have up to 7% Pu-240 contamination, which doesn't require the fantastically expensive isotope separation phase, just chemical extraction of plutonium from reactor fuel. In fact, you can build a bomb from so-called “reactor-grade” plutonium—the U.S. tested one in 1962.

- Bethell, Tom. Questioning Einstein. Pueblo West, CO: Vales Lake Publishing, 2009. ISBN 978-0-9714845-9-7.

- Call it my guilty little secret. Every now and then, I enjoy nothing more than picking up a work of crackpot science, reading it with the irony lobe engaged, and figuring out precisely where the author went off the rails and trying to imagine how one might explain to them the blunders which led to the poppycock they expended so much effort getting into print. In the field of physics, for some reason Einstein's theory of special relativity attracts a disproportionate number of such authors, all bent on showing that Einstein was wrong or, in the case of the present work's subtitle, asking “Is Relativity Necessary?”. With a little reflexion, this shouldn't be a surprise: alone among major theories of twentieth century physics, special relativity is mathematically accessible to anybody acquainted with high school algebra, and yet makes predictions for the behaviour of objects at high velocity which are so counterintuitive to the expectations based upon our own personal experience with velocities much smaller than that they appear, at first glance, to be paradoxes. Theories more dubious and less supported by experiment may be shielded from crackpots simply by the forbidding mathematics one must master in order to understand and talk about them persuasively. This is an atypical exemplar of the genre. While most attacks on special relativity are written by delusional mad scientists, the author of the present work, Tom Bethell, is a respected journalist whose work has been praised by, among others, Tom Wolfe and George Gilder. The theory presented here is not his own, but one developed by Petr Beckmann, whose life's work, particularly in advocating civil nuclear power, won him the respect of Edward Teller (who did not, however, endorse his alternative to relativity). As works of crackpot science go, this is one of the best I've read. It is well written, almost free of typographical and factual errors, clearly presents its arguments in terms a layman can grasp, almost entirely avoids mathematical equations, and is thoroughly documented with citations of original sources, many of which those who have learnt special relativity from modern textbooks may not be aware. Its arguments against special relativity are up to date, tackling objections including the Global Positioning System, the Brillet-Hall experiment, and the Hafele-Keating “travelling clock” experiments as well as the classic tests. And the author eschews the ad hominem attacks on Einstein which are so common in the literature of opponents to relativity. Beckmann's theory posits that the luminiferous æther (the medium in which light waves propagate), which was deemed “superfluous” in Einstein's 1905 paper, in fact exists, and is simply the locally dominant gravitational field. In other words, the medium in which light waves wave is the gravity which makes things which aren't light heavy. Got it? Light waves in any experiment performed on the Earth or in its vicinity will propagate in the æther of its gravitational field (with only minor contributions from those of other bodies such as the Moon and Sun), and hence attempts to detect the “æther drift” due to the Earth's orbital motion around the Sun such as the Michelson-Morley experiment will yield a null result, since the æther is effectively “dragged” or “entrained” along with the Earth. But since the gravitational field is generated by the Earth's mass, and hence doesn't rotate with it (Huh—what about the Lense-Thirring effect, which is never mentioned here?), it should be possible to detect the much smaller æther drift effect as the measurement apparatus rotates around the Earth, and it is claimed that several experiments have made such a detection. It's traditional that popular works on special relativity couch their examples in terms of observers on trains, so let me say that it's here that we feel the sickening non-inertial-frame lurch as the train departs the track and enters a new inertial frame headed for the bottom of the canyon. Immediately, we're launched into a discussion of the Sagnac effect and its various manifestations ranging from the original experiment to practical applications in laser ring gyroscopes, to round-the-world measurements bouncing signals off multiple satellites. For some reason the Sagnac effect seems to be a powerful attractor into which special relativity crackpottery is sucked. Why it is so difficult to comprehend, even by otherwise intelligent people, entirely escapes me. May I explain it to you? This would be easier with a diagram, but just to show off and emphasise how simple it is, I'll do it with words. Imagine you have a turntable, on which are mounted four mirrors which reflect light around the turntable in a square: the light just goes around and around. If the turntable is stationary and you send a pulse of light in one direction around the loop and then send another in the opposite direction, it will take precisely the same amount of time for them to complete one circuit of the mirrors. (In practice, one uses continuous beams of monochromatic light and combines them in an interferometer, but the effect is the same as measuring the propagation time—it's just easier to do it that way.) Now, let's assume you start the turntable rotating clockwise. Once again you send pulses of light around the loop in both directions; this time we'll call the one which goes in the same direction as the turntable's rotation the clockwise pulse and the other the counterclockwise pulse. Now when we measure how long it took for the clockwise pulse to make it one time around the loop we find that it took longer than for the counterclockwise pulse. OMG!!! Have we disproved Einstein's postulate of the constancy of the speed of light (as is argued in this book at interminable length)? Well, of course not, as a moment's reflexion will reveal. The clockwise pulse took longer to make it around the loop because it had farther to travel to arrive there: as it was bouncing from each mirror to the next, the rotation of the turntable was moving the next mirror further away, and so each leg it had to travel was longer. Conversely, as the counterclockwise pulse was in flight, its next mirror was approaching it, and hence by the time it made it around the loop it had travelled less far, and consequently arrived sooner. That's all there is to it, and precision measurements of the Sagnac effect confirm that this analysis is completely consistent with special relativity. The only possible source of confusion is if you make the self-evident blunder of analysing the system in the rotating reference frame of the turntable. Such a reference frame is trivially non-inertial, so special relativity does not apply. You can determine this simply by tossing a ball from one side of the turntable to another, with no need for all the fancy mirrors, light pulses, or the rest. Other claims of Beckmann's theory are explored, all either dubious or trivially falsified. Bethell says there is no evidence for the length contraction predicted by special relativity. In fact, analysis of heavy ion collisions confirm that each nucleus approaching the scene of the accident “sees” the other as a “pancake” due to relativistic length contraction. It is claimed that while physical processes on a particle moving rapidly through a gravitational field slow down, that an observer co-moving with that particle would not see a comparable slow-down of clocks at rest with respect to that gravitational field. But the corrections applied to the atomic clocks in GPS satellites incorporate this effect, and would produce incorrect results if it did not occur. I could go on and on. I'm sure there is a simple example from gravitational lensing or propagation of electromagnetic radiation from gamma ray bursts which would falsify the supposed classical explanation for the gravitational deflection of light due to a refractive effect based upon strength of the gravitational field, but why bother when so many things much easier to dispose of are hanging lower on the tree. Should you buy this book? No, unless, like me, you enjoy a rare example of crackpot science which is well done. This is one of those, and if you're well acquainted with special relativity (if not, take a trip on our C-ship!) you may find it entertaining finding the flaws in and identifying experiments which falsify the arguments here.

- Bjornson, Adrian. A Universe that We Can Believe. Woburn, Massachusetts: Addison Press, 2000. ISBN 0-9703231-0-7.

- Bockris, John O'M. The New Paradigm. College Station, TX: D&M Enterprises, 2005. ISBN 0-9767444-0-6.

-

As the nineteenth century gave way to the twentieth, the triumphs of

classical science were everywhere apparent: Newton's theories of

mechanics and gravitation, Maxwell's electrodynamics, the atomic

theory of chemistry, Darwin's evolution, Mendel's genetics, and the

prospect of formalising all of mathematics from a small set of logical

axioms. Certainly, there were a few little details awaiting explanation:

the curious failure to detect ether drift in the Michelson-Morley

experiment, the pesky anomalous precession of the perihelion of

the planet Mercury, the seeming contradiction between the

equipartition of energy and the actual spectrum of black

body radiation, the mysterious patterns in the spectral lines

of elements, and the source of the Sun's energy, but these seemed

matters the next generation of scientists could resolve by building

on the firm foundation laid by the last. Few would have imagined that

these curiosities would spark a thirty year revolution in physics

which would show the former foundations of science to be valid only

in the limits of slow velocities, weak fields, and macroscopic

objects.

At the start of the twenty-first century, in the very centennial

of Einstein's

annus mirabilis,

it is only natural to enquire how firm are the foundations of

present-day science, and survey the “little details and anomalies”

which might point toward scientific revolutions in this century.

That is the ambitious goal of this book, whose author's long career

in physical chemistry began in 1945 with a Ph.D. from Imperial

College, London, and spanned more than forty years as a full professor

at the University of Pennsylvania, Flinders University in Australia,

and Texas A&M University, where he was Distinguished Professor of

Energy and Environmental Chemistry, with more than 700 papers and

twenty books to his credit. And it is at this goal that Professor

Bockris utterly, unconditionally, and irredeemably fails.

By the evidence of the present volume, the author, notwithstanding his

distinguished credentials and long career, is a complete idiot.

That's not to say you won't learn some things by reading this

book. For example, what do

physicists Hendrik Lorentz, Werner Heisenberg, Hannes Alfvén,

Albert A. Michelson, and Lord Rayleigh;

chemist Amedeo Avogadro,

astronomers Chandra Wickramasinghe, Benik Markarian,

and Martin Rees;

the Weyerhaeuser Company;

the Doberman Pinscher dog breed;

Renaissance artist Michelangelo;

Cepheid variable stars;

Nazi propagandist Joseph Goebbels;

the Menninger Foundation and the Cavendish Laboratory;

evolutionary biologist Richard Dawkins;

religious figures Saint Ignatius of Antioch,

Bishop Berkeley, and Teilhard de Chardin;

parapsychologists York Dobyns and Brenda Dunne;

anomalist William R. Corliss;

and

Centreville Maryland, Manila in the Philippines,

and the Galapagos Islands

all have in common?

The “Shaking Pillars of the Paradigm” about which the author expresses

sentiments ranging from doubt to disdain in chapter 3 include

mathematics (where he considers irrational roots, non-commutative

multiplication of quaternions, and the theory of limits among flaws

indicative of the “break down” of mathematical foundations [p. 71]),

Darwinian evolution, special relativity, what he refers to as “The

So-Called General Theory of Relativity” with only the vaguest notion

of its content—yet is certain is dead wrong, quantum theory (see

p. 120 for a totally bungled explanation of Schrodinger's cat in which

he seems to think the result depends upon a decision

made by the cat), the big bang (which he deems “preposterus” on

p. 138) and the Doppler interpretation of redshifts, and naturalistic

theories of the origin of life. Chapter 4 begins with the claim that “There

is no physical model which can tell us why [electrostatic] attraction

and repulsion occur” (p. 163).

And what are those stubborn facts in which the author does

believe, or at least argues merit the attention of science, pointing

the way to a new foundation for science in this century? Well, that

would be: UFOs and alien landings; Kirlian photography; homeopathy and

Jacques Benveniste's “imprinting of water”; crop circles; Qi Gong

masters remotely changing the half-life of radioactive substances; the

Maharishi Effect and “Vedic Physics”; “cold fusion” and the

transmutation of base metals into gold (on both of which the author

published while at Texas A&M); telepathy, clairvoyance, and

precognition; apparitions, poltergeists, haunting, demonic possession,

channelling, and appearances of the Blessed Virgin Mary; out of body

and near-death experiences; survival after death, communication

through mediums including physical manifestations, and reincarnation;

and psychokinesis, faith and “anomalous” healing (including the

“psychic surgeons” of the Philippines), and astrology. The only

apparent criterion for the author's endorsement of a phenomenon appears

to be its rejection by mainstream science.

Now, many works of crank science can be quite funny, and entirely

worth reading for their amusement value. Sadly, this book is so

poorly written it cannot be enjoyed even on that level. In the

introduction to this reading list I mention that I don't include books

which I didn't finish, but that since I've been keeping the list I've

never abandoned a book partway through. Well, my record remains

intact, but this one sorely tempted me. The style, if you can call it

that, is such that one finds it difficult to believe English is the

author's mother tongue, no less that his doctorate is from a British

university at a time when language skills were valued. The prose is

often almost physically painful to read. Here is an example, from

footnote 37 on page 117—but you can find similar examples on

almost any page; I've chosen this one because it is, in addition,

almost completely irrelevant to the text it annotates.

Here, it is relevant to describe a corridor meeting with a mature colleague - keen on Quantum Mechanical calculations, - who had not the friends to give him good grades in his grant applications and thus could not employ students to work with him. I commiserated on his situation, - a professor in a science department without grant money. How can you publish I blurted out, rather tactlessly. “Ah, but I have Lili” he said (I've changed his wife's name). I knew Lili, a pleasant European woman interested in obscure religions. She had a high school education but no university training. “But” … I began to expostulate. “It's ok, ok”, said my colleague. “Well, we buy the programs to calculate bond strengths, put it in the computer and I tell Lili the quantities and she writes down the answer the computer gives. Then, we write a paper.” The program referred to is one which solves the Schrödinger equation and provides energy values, e.g., for bond strength in chemical compounds.

Now sit back, close your eyes, and imagine five hundred pages of this; in spelling, grammar, accuracy, logic, and command of the subject matter it reads like a textbook-length Slashdot post. Several recurrent characteristics are manifest in this excerpt. The author repeatedly, though not consistently, capitalises Important Words within Sentences; he uses hyphens where em-dashes are intended, and seems to have invented his own punctuation sign: a comma followed by a hyphen, which is used interchangeably with commas and em-dashes. The punctuation gives the impression that somebody glanced at the manuscript and told the author, “There aren't enough commas in it”, whereupon he went through and added three or four thousand in completely random locations, however inane. There is an inordinate fondness for “e.g.”, “i.e.”, and “cf.”, and they are used in ways which make one suspect the author isn't completely clear on their meaning or the distinctions among them. And regarding the footnote quoted above, did I mention that the author's wife is named “Lily”, and hails from Austria? Further evidence of the attention to detail and respect for the reader can be found in chapter 3 where most of the source citations in the last thirty pages are incorrect, and the blank cross-references scattered throughout the text. Not only is it obvious the book has not been fact checked, nor even proofread; it has never even been spelling checked—common words are misspelled all over. Bockris never manages the Slashdot hallmark of misspelling “the”, but on page 475 he misspells “to” as “ot”. Throughout you get the sense that what you're reading is not so much a considered scientific exposition and argument, but rather the raw unedited output of a keystroke capturing program running on the author's computer. Some readers may take me to task for being too harsh in these remarks, noting that the book was self-published by the author at age 82. (How do I know it was self-published? Because my copy came with the order from Amazon to the publisher to ship it to their warehouse folded inside, and the publisher's address in this document is directly linked to the author.) Well, call me unkind, but permit me to observe that readers don't get a quality discount based on the author's age from the price of US$34.95, which is on the very high end for a five hundred page paperback, nor is there a disclaimer on the front or back cover that the author might not be firing on all cylinders. Certainly, an eminent retired professor ought to be able to call on former colleagues and/or students to review a manuscript which is certain to become an important part of his intellectual legacy, especially as it attempts to expound a new paradigm for science. Even the most cursory editing to remove needless and tedious repetition could knock 100 pages off this book (and eliminating the misinformation and nonsense could probably slim it down to about ten). The vast majority of citations are to secondary sources, many popular science or new age books. Apart from these drawbacks, Bockris, like many cranks, seems compelled to personally attack Einstein, claiming his work was derivative, hinting at plagiarism, arguing that its significance is less than its reputation implies, and relating an unsourced story claiming Einstein was a poor husband and father (and even if he were, what does that have to do with the correctness and importance of his scientific contributions?). In chapter 2, he rants upon environmental and economic issues, calls for a universal dole (p. 34) for those who do not work (while on p. 436 he decries the effects of just such a dole on Australian youth), calls (p. 57) for censorship of music, compulsory population limitation, and government mandated instruction in philosophy and religion along with promotion of religious practice. Unlike many radical environmentalists of the fascist persuasion, he candidly observes (p. 58) that some of these measures “could not achieved under the present conditions of democracy”. So, while repeatedly inveighing against the corruption of government-funded science, he advocates what amounts to totalitarian government—by scientists. - Brown, Brandon R. Planck. Oxford: Oxford University Press, 2015. ISBN 978-0-19-021947-5.

-

Theoretical physics is usually a young person's game. Many of the

greatest breakthroughs have been made by researchers in their

twenties, just having mastered existing theories while remaining

intellectually flexible and open to new ideas. Max Planck,

born in 1858, was an exception to this rule. He spent most of his

twenties living with his parents and despairing of finding a

paid position in academia. He was thirty-six when he took on

the project of understanding heat radiation, and forty-two

when he explained it in terms which would launch the quantum

revolution in physics. He was in his fifties when he discovered

the zero-point energy of the vacuum, and remained engaged and active

in science until shortly before his death in 1947 at the age of

89. As theoretical physics editor for the then most

prestigious physics journal in the world,

Annalen der Physik, in 1905 he

approved publication of Einstein's special theory of relativity,

embraced the new ideas from a young outsider with neither a Ph.D. nor

an academic position, extended the theory in his own work in

subsequent years, and was instrumental in persuading Einstein

to come to Berlin, where he became a close friend.

Sometimes the simplest puzzles lead to the most profound of insights.

At the end of the nineteenth century, the radiation emitted by

heated bodies was such a conundrum. All objects emit electromagnetic

radiation due to the thermal motion of their molecules. If an object

is sufficiently hot, such as the filament of an incandescent lamp or

the surface of the Sun, some of the radiation will fall into the

visible range and be perceived as light. Cooler objects emit in

the infrared or lower frequency bands and can be detected by

instruments sensitive to them. The radiation emitted by a hot

object has a characteristic spectrum (the distribution of energy

by frequency), and has a peak which depends only upon the

temperature of the body. One of the simplest cases is that of a

black body,

an ideal object which perfectly absorbs all incident radiation.

Consider an ideal closed oven which loses no heat to the outside.

When heated to a given temperature, its walls will absorb and

re-emit radiation, with the spectrum depending upon its temperature.

But the

equipartition

theorem, a cornerstone of

statistical

mechanics, predicted that the absorption and re-emission of

radiation in the closed oven would result in a ever-increasing

peak frequency and energy, diverging to infinite temperature, the

so-called

ultraviolet

catastrophe. Not only did this violate the law of conservation of

energy, it was an affront to common sense: closed ovens do not explode

like nuclear bombs. And yet the theory which predicted this behaviour,

the

Rayleigh-Jeans

law,

made perfect sense based upon the motion of atoms and molecules,

correctly predicted numerous physical phenomena, and was correct for

thermal radiation at lower temperatures.

At the time Planck took up the problem of thermal radiation,

experimenters in Germany were engaged in measuring the radiation

emitted by hot objects with ever-increasing precision, confirming

the discrepancy between theory and reality, and falsifying several

attempts to explain the measurements. In December 1900, Planck

presented his new theory of black body radiation and what is

now called

Planck's Law

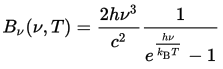

at a conference in Berlin. Written in modern notation, his

formula for the energy emitted by a body of temperature

T at frequency ν is:

This equation not only correctly predicted the results measured in the laboratories, it avoided the ultraviolet catastrophe, as it predicted an absolute cutoff of the highest frequency radiation which could be emitted based upon an object's temperature. This meant that the absorption and re-emission of radiation in the closed oven could never run away to infinity because no energy could be emitted above the limit imposed by the temperature. Fine: the theory explained the measurements. But what did it mean? More than a century later, we're still trying to figure that out. Planck modeled the walls of the oven as a series of resonators, but unlike earlier theories in which each could emit energy at any frequency, he constrained them to produce discrete chunks of energy with a value determined by the frequency emitted. This had the result of imposing a limit on the frequency due to the available energy. While this assumption yielded the correct result, Planck, deeply steeped in the nineteenth century tradition of the continuum, did not initially suggest that energy was actually emitted in discrete packets, considering this aspect of his theory “a purely formal assumption.” Planck's 1900 paper generated little reaction: it was observed to fit the data, but the theory and its implications went over the heads of most physicists. In 1905, in his capacity as editor of Annalen der Physik, he read and approved the publication of Einstein's paper on the photoelectric effect, which explained another physics puzzle by assuming that light was actually emitted in discrete bundles with an energy determined by its frequency. But Planck, whose equation manifested the same property, wasn't ready to go that far. As late as 1913, he wrote of Einstein, “That he might sometimes have overshot the target in his speculations, as for example in his light quantum hypothesis, should not be counted against him too much.” Only in the 1920s did Planck fully accept the implications of his work as embodied in the emerging quantum theory.

The equation for Planck's Law contained two new fundamental physical constants: Planck's constant (h) and Boltzmann's constant (kB). (Boltzmann's constant was named in memory of Ludwig Boltzmann, the pioneer of statistical mechanics, who committed suicide in 1906. The constant was first introduced by Planck in his theory of thermal radiation.) Planck realised that these new constants, which related the worlds of the very large and very small, together with other physical constants such as the speed of light (c), the gravitational constant (G), and the Coulomb constant (ke), allowed defining a system of units for quantities such as length, mass, time, electric charge, and temperature which were truly fundamental: derived from the properties of the universe we inhabit, and therefore comprehensible to intelligent beings anywhere in the universe. Most systems of measurement are derived from parochial anthropocentric quantities such as the temperature of somebody's armpit or the supposed distance from the north pole to the equator. Planck's natural units have no such dependencies, and when one does physics using them, equations become simpler and more comprehensible. The magnitudes of the Planck units are so far removed from the human scale they're unlikely to find any application outside theoretical physics (imagine speed limit signs expressed in a fraction of the speed of light, or road signs giving distances in Planck lengths of 1.62×10−35 metres), but they reflect the properties of the universe and may indicate the limits of our ability to understand it (for example, it may not be physically meaningful to speak of a distance smaller than the Planck length or an interval shorter than the Planck time [5.39×10−44 seconds]).

Planck's life was long and productive, and he enjoyed robust health (he continued his long hikes in the mountains into his eighties), but was marred by tragedy. His first wife, Marie, died of tuberculosis in 1909. He outlived four of his five children. His son Karl was killed in 1916 in World War I. His two daughters, Grete and Emma, both died in childbirth, in 1917 and 1919. His son and close companion Erwin, who survived capture and imprisonment by the French during World War I, was arrested and executed by the Nazis in 1945 for suspicion of involvement in the Stauffenberg plot to assassinate Hitler. (There is no evidence Erwin was a part of the conspiracy, but he was anti-Nazi and knew some of those involved in the plot.) Planck was repulsed by the Nazis, especially after a private meeting with Hitler in 1933, but continued in his post as the head of the Kaiser Wilhelm Society until 1937. He considered himself a German patriot and never considered emigrating (and doubtless his being 75 years old when Hitler came to power was a consideration). He opposed and resisted the purging of Jews from German scientific institutions and the campaign against “Jewish science”, but when ordered to dismiss non-Aryan members of the Kaiser Wilhelm Society, he complied. When Heisenberg approached him for guidance, he said, “You have come to get my advice on political questions, but I am afraid I can no longer advise you. I see no hope of stopping the catastrophe that is about to engulf all our universities, indeed our whole country. … You simply cannot stop a landslide once it has started.” Planck's house near Berlin was destroyed in an Allied bombing raid in February 1944, and with it a lifetime of his papers, photographs, and correspondence. (He and his second wife Marga had evacuated to Rogätz in 1943 to escape the raids.) As a result, historians have only limited primary sources from which to work, and the present book does an excellent job of recounting the life and science of a man whose work laid part of the foundations of twentieth century science. - Callender, Craig and Nick Huggett, eds. Physics Meets Philosophy at the Planck Scale. Cambridge: Cambridge University Press, 2001. ISBN 0-521-66445-4.

- Carr, Bernard, ed. Universe or Multiverse? Cambridge: Cambridge University Press, 2007. ISBN 0-521-84841-5.

- Before embarking upon his ultimately successful quest to discover the laws of planetary motion, Johannes Kepler tried to explain the sizes of the orbits of the planets from first principles: developing a mathematical model of the orbits based upon nested Platonic solids. Since, at the time, the solar system was believed by most to be the entire universe (with the fixed stars on a sphere surrounding it), it seemed plausible that the dimensions of the solar system would be fixed by fundamental principles of science and mathematics. Even though he eventually rejected his model as inaccurate, he never completely abandoned it—it was for later generations of astronomers to conclude that there is nothing fundamental whatsoever about the structure of the solar system: it is simply a contingent product of the history of its condensation from the solar nebula, and could have been entirely different. With the discovery of planets around other stars in the late twentieth century, we now know that not only do planetary systems vary widely, many are substantially more weird than most astronomers or even science fiction writers would have guessed. Since the completion of the Standard Model of particle physics in the 1970s, a major goal of theoretical physicists has been to derive, from first principles, the values of the more than twenty-five “free parameters” of the Standard Model (such as the masses of particles, relative strengths of forces, and mixing angles). At present, these values have to be measured experimentally and put into the theory “by hand”, and there is no accepted physical explanation for why they have the values they do. Further, many of these values appear to be “fine-tuned” to allow the existence of life in the universe (or at least, life which resembles ourselves)—a tiny change, for example, in the mass ratio of the up and down quarks and the electron would result in a universe with no heavy elements or chemistry; it's hard to imagine any form of life which could be built out of just protons or neutrons. The emergence of a Standard Model of cosmology has only deepened the mystery, adding additional apparently fine-tunings to the list. Most stunning is the cosmological constant, which appears to have a nonzero value which is 124 orders of magnitude smaller than predicted from a straightforward calculation from quantum physics. One might take these fine-tunings as evidence of a benevolent Creator (which is, indeed, discussed in chapters 25 and 26 of this book), or of our living in a simulation crafted by a clever programmer intent on optimising its complexity and degree of interestingness (chapter 27). But most physicists shy away from such deus ex machina and “we is in machina” explanations and seek purely physical reasons for the values of the parameters we measure. Now let's return for a moment to Kepler's attempt to derive the orbits of the planets from pure geometry. The orbit of the Earth appears, in fact, fine-tuned to permit the existence of life. Were it more elliptical, or substantially closer to or farther from the Sun, persistent liquid water on the surface would not exist, as seems necessary for terrestrial life. The apparent fine-tuning can be explained, however, by the high probability that the galaxy contains a multitude of planetary systems of every possible variety, and such a large ensemble is almost certain to contain a subset (perhaps small, but not void) in which an earthlike planet is in a stable orbit within the habitable zone of its star. Since we can only have evolved and exist in such an environment, we should not be surprised to find ourselves living on one of these rare planets, even though such environments represent an infinitesimal fraction of the volume of the galaxy and universe. As efforts to explain the particle physics and cosmological parameters have proved frustrating, and theoretical investigations into cosmic inflation and string theory have suggested that the values of the parameters may have simply been chosen at random by some process, theorists have increasingly been tempted to retrace the footsteps of Kepler and step back from trying to explain the values we observe, and instead view them, like the masses and the orbits of the planets, as the result of an historical process which could have produced very different results. The apparent fine-tuning for life is like the properties of the Earth's orbit—we can only measure the parameters of a universe which permits us to exist! If they didn't, we wouldn't be here to do the measuring. But note that like the parallel argument for the fine-tuning of the orbit of the Earth, this only makes sense if there are a multitude of actually existing universes with different random settings of the parameters, just as only a large ensemble of planetary systems can contain a few like the one in which we find ourselves. This means that what we think of as our universe (everything we can observe or potentially observe within the Hubble volume) is just one domain in a vastly larger “multiverse”, most or all of which may remain forever beyond the scope of scientific investigation. Now such a breathtaking concept provides plenty for physicists, cosmologists, philosophers, and theologians to chew upon, and macerate it they do in this thick (517 page), heavy (1.2 kg), and expensive (USD 85) volume, which is drawn from papers presented at conferences held between 2001 and 2005. Contributors include two Nobel laureates (Steven Weinberg and Frank Wilczek), and just about everybody else prominent in the multiverse debate, including Martin Rees, Stephen Hawking, Max Tegmark, Andrei Linde, Alexander Vilenkin, Renata Kallosh, Leonard Susskind, James Hartle, Brandon Carter, Lee Smolin, George Ellis, Nick Bostrom, John Barrow, Paul Davies, and many more. The editor's goal was that the papers be written for the intelligent layman: like articles in the pre-dumbed-down Scientific American or “front of book” material in Nature or Science. In fact, the chapters vary widely in technical detail and difficulty; if you don't follow this stuff closely, your eyes may glaze over in some of the more equation-rich chapters. This book is far from a cheering section for multiverse theories: both sides are presented and, in fact, the longest chapter is that of Lee Smolin, which deems the anthropic principle and anthropic arguments entirely nonscientific. Many of these papers are available in preliminary form for free on the arXiv preprint server; if you can obtain a list of the chapter titles and authors from the book, you can read most of the content for free. Renata Kallosh's chapter contains an excellent example of why one shouldn't blindly accept the recommendations of a spelling checker. On p. 205, she writes “…the gaugino condensate looks like a fractional instant on effect…”—that's supposed to be “instanton”!

- Carroll, Sean. From Eternity to Here. New York: Dutton, 2010. ISBN 978-0-525-95133-9.

- The nature of time has perplexed philosophers and scientists from the ancient Greeks (and probably before) to the present day. Despite two and half millennia of reflexion upon the problem and spectacular success in understanding many other aspects of the universe we inhabit, not only has little progress been made on the question of time, but to a large extent we are still puzzling over the same problems which vexed thinkers in the time of Socrates: Why does there seem to be an inexorable arrow of time which can be perceived in physical processes (you can scramble an egg, but just try to unscramble one)? Why do we remember the past, but not the future? Does time flow by us, living in an eternal present, or do we move through time? Do we have free will, or is that an illusion and is the future actually predestined? Can we travel to the past or to the future? If we are typical observers in an eternal or very long-persisting universe, why do we find ourselves so near its beginning (the big bang)? Indeed, what we have learnt about time makes these puzzles even more enigmatic. For it appears, based both on theory and all experimental evidence to date, that the microscopic laws of physics are completely reversible in time: any physical process can (and does) go in both the forward and reverse time directions equally well. (Actually, it's a little more complicated than that: just reversing the direction of time does not yield identical results, but simultaneously reversing the direction of time [T], interchanging left and right [parity: P], and swapping particles for antiparticles [charge: C] yields identical results under the so-called “CPT” symmetry which, as far is known, is absolute. The tiny violation of time reversal symmetry by itself in weak interactions seems, to most physicists, inadequate to explain the perceived unidirectional arrow of time, although some disagree.) In this book, the author argues that the way in which we perceive time here and now (whatever “now” means) is a direct consequence of the initial conditions which obtained at the big bang—the beginning of time, and the future state into which the universe is evolving—eternity. Whether or not you agree with the author's conclusions, this book is a tour de force popular exposition of thermodynamics and statistical mechanics, which provides the best intuitive grasp of these concepts of any non-technical book I have yet encountered. The science and ideas which influenced thermodynamics and its practical and philosophical consequences are presented in a historical context, showing how in many cases phenomenological models were successful in grasping the essentials of a physical process well before the actual underlying mechanisms were understood (which is heartening to those trying to model the very early universe absent a theory of quantum gravity). Carroll argues that the Second Law of Thermodynamics entirely defines the arrow of time. Closed systems (and for the purpose of the argument here we can consider the observable universe as such a system, although it is not precisely closed: particles enter and leave our horizon as the universe expands and that expansion accelerates) always evolve from a state of lower probability to one of higher probability: the “entropy” of a system is (sloppily stated) a measure of the probability of finding the system in a given macroscopically observable state, and over time the entropy always stays the same or increases; except for minor fluctuations, the entropy increases until the system reaches equilibrium, after which it simply fluctuates around the equilibrium state with essentially no change in its coarse-grained observable state. What we perceive as the arrow of time is simply systems evolving from less probable to more probable states, and since they (in isolation) never go the other way, we naturally observe the arrow of time to be universal. Look at it this way—there are vastly fewer configurations of the atoms which make up an egg as produced by a chicken: shell outside, yolk in the middle, and white in between, as there are for the same egg scrambled in the pan with the fragments of shell discarded in the poubelle. There are an almost inconceivable number of ways in which the atoms of the yolk and white can mix to make the scrambled egg, but far fewer ways they can end up neatly separated inside the shell. Consequently, if we see a movie of somebody unscrambling an egg, the white and yolk popping up from the pan to be surrounded by fragments which fuse into an unbroken shell, we know some trickster is running the film backward: it illustrates a process where the entropy dramatically decreases, and that never happens in the real world. (Or, more precisely, its probability of happening anywhere in the universe in the time since the big bang is “beyond vanishingly small”.) Now, once you understand these matters, as you will after reading the pellucid elucidation here, it all seems pretty straightforward: our universe is evolving, like all systems, from lower entropy to higher entropy, and consequently it's only natural that we perceive that evolution as the passage of time. We remember the past because the process of storing those memories increases the entropy of the universe; we cannot remember the future because we cannot predict the precise state of the coarse-grained future from that of the present, simply because there are far more possible states in the future than at the present. Seems reasonable, right? Well, up to a point, Lord Copper. The real mystery, to which Roger Penrose and others have been calling attention for some years, is not that entropy is increasing in our universe, but rather why it is presently so low compared to what it might be expected to be in a universe in a randomly chosen configuration, and further, why it was so absurdly low in the aftermath of the big bang. Given the initial conditions after the big bang, it is perfectly reasonable to expect the universe to have evolved to something like its present state. But this says nothing at all about why the big bang should have produced such an incomprehensibly improbable set of initial conditions. If you think about entropy in the usual thermodynamic sense of gas in a box, the evolution of the universe seems distinctly odd. After the big bang, the region which represents today's observable universe appears to have been a thermalised system of particles and radiation very near equilibrium, and yet today we see nothing of the sort. Instead, we see complex structure at scales from molecules to superclusters of galaxies, with vast voids in between, and stars profligately radiating energy into space with a temperature less than three degrees above absolute zero. That sure doesn't look like entropy going down: it's more like your leaving a pot of tepid water on the counter top overnight and, the next morning, finding a village of igloos surrounding a hot spring. I mean, it could happen, but how probable is that? It's gravity that makes the difference. Unlike all of the other forces of nature, gravity always attracts. This means that when gravity is significant (which it isn't in a steam engine or pan of water), a gas at thermal equilibrium is actually in a state of very low entropy. Any small compression or rarefaction in a region will cause particles to be gravitationally attracted to volumes with greater density, which will in turn reinforce the inhomogeneity, which will amplify the gravitational attraction. The gas at thermal equilibrium will, then, unless it is perfectly homogeneous (which quantum and thermal fluctuations render impossible) collapse into compact structures separated by voids, with the entropy increasing all the time. Voilà galaxies, stars, and planets. As sources of energy are exhausted, gravity wins in the end, and as structures compact ever more, entropy increasing apace, eventually the universe is filled only with black holes (with vastly more entropy than the matter and energy that fell into them) and cold dark objects. But wait, there's more! The expansion of the universe is accelerating, so any structures which are not gravitationally bound will eventually disappear over the horizon and the remnants (which may ultimately decay into a gas of unbound particles, although the physics of this remains speculative) will occupy a nearly empty expanding universe (absurd as this may sound, this de Sitter space is an exact solution to Einstein's equations of General Relativity). This, the author argues, is the highest entropy state of matter and energy in the presence of gravitation, and it appears from current observational evidence that that's indeed where we're headed. So, it's plausible the entire evolution of the universe from the big bang into the distant future increases entropy all the way, and hence there's no mystery why we perceive an arrow of time pointing from the hot dense past to cold dark eternity. But doggone it, we still don't have a clue why the big bang produced such low entropy! The author surveys a number of proposed explanations, some of which invoke fine-tuning with no apparent physical explanations, summon an enormous (or infinite) “multiverse” of all possibilities and argue that among such an ensemble, we find ourselves in one of the vanishingly small fraction of universes like our own because observers like ourselves couldn't exist in all the others (the anthropic argument), or that the big bang was not actually the beginning and that some dynamical process which preceded the big bang (which might then be considered a “big bounce”) forced the initial conditions into a low entropy state. There are many excellent arguments against these proposals, which are clearly presented. The author's own favourite, which he concedes is as speculative as all the others, is that de Sitter space is unstable against a quantum fluctuation which nucleates a disconnected bubble universe in which entropy is initially low. The process of nucleation increases entropy in the multiverse, and hence there is no upper bound at all on entropy, with the multiverse eternal in past and future, and entropy increasing forever without bound in the future and decreasing without bound in the past. (If you're a regular visitor here, you know what's coming, don't you?) Paging friar Ockham! We start out having discovered yet another piece of evidence for what appears to be a fantastically improbable fine-tuning of the initial conditions of our universe. The deeper we investigate this, the more mysterious it appears, as we discover no reason in the dynamical laws of physics for the initial conditions to be have been so unlikely among the ensemble of possible initial conditions. We are then faced with the “trichotomy” I discussed regarding the origin of life on Earth: chance (it just happened to be that way, or it was every possible way, and we, tautologically, live in one of the universes in which we can exist), necessity (some dynamical law which we haven't yet figured out caused the initial conditions to be the way we observe them to have been), or (and here's where all the scientists turn their backs upon me, snuff the candles, and walk away) design. Yes, design. Suppose (and yes, I know, I've used this analogy before and will certainly do so again) you were a character in a video game who somehow became sentient and began to investigate the universe you inhabited. As you did, you'd discover there were distinct regularities which governed the behaviour of objects and their interactions. As you probed deeper, you might be able to access the machine code of the underlying simulation (or at least get a glimpse into its operation by running precision experiments). You would discover that compared to a random collection of bits of the same length, it was in a fantastically improbable configuration, and you could find no plausible way that a random initial configuration could evolve into what you observe today, especially since you'd found evidence that your universe was not eternally old but rather came into being at some time in the past (when, say, the game cartridge was inserted). What would you conclude? Well, if you exclude the design hypothesis, you're stuck with supposing that there may be an infinity of universes like yours in all random configurations, and you observe the one you do because you couldn't exist in all but a very few improbable configurations of that ensemble. Or you might argue that some process you haven't yet figured out caused the underlying substrate of your universe to assemble itself, complete with the copyright statement and the Microsoft security holes, from a generic configuration beyond your ability to observe in the past. And being clever, you'd come up with persuasive arguments as to how these most implausible circumstances might have happened, even at the expense of invoking an infinity of other universes, unobservable in principle, and an eternity of time, past and present, in which events could play out. Or, you might conclude from the quantity of initial information you observed (which is identical to low initial entropy) and the improbability of that configuration having been arrived at by random processes on any imaginable time scale, that it was put in from the outside by an intelligent designer: you might call Him or Her the Programmer, and some might even come to worship this being, outside the observable universe, which is nonetheless responsible for its creation and the wildly improbable initial conditions which permit its inhabitants to exist and puzzle out their origins. Suppose you were running a simulation of a universe, and to win the science fair you knew you'd have to show the evolution of complexity all the way from the get-go to the point where creatures within the simulation started to do precision experiments, discover curious fine-tunings and discrepancies, and begin to wonder…? Would you start your simulation at a near-equilibrium condition? Only if you were a complete idiot—nothing would ever happen—and whatever you might say about post-singularity super-kids, they aren't idiots (well, let's not talk about the music they listen to, if you can call that music). No, you'd start the simulation with extremely low entropy, with just enough inhomogeneity that gravity would get into the act and drive the emergence of hierarchical structure. (Actually, if you set up quantum mechanics the way we observe it, you wouldn't have to put in the inhomogeneity; it will emerge from quantum fluctuations all by itself.) And of course you'd fine tune the parameters of the standard model of particle physics so your universe wouldn't immediately turn entirely into neutrons, diprotons, or some other dead end. Then you'd sit back, turn up the volume on the MultIversePod, and watch it run. Sure 'nuff, after a while there'd be critters trying to figure it all out, scratching their balding heads, and wondering how it came to be that way. You would be most amused as they excluded your existence as a hypothesis, publishing theories ever more baroque to exclude the possibility of design. You might be tempted to…. Fortunately, this chronicle does not publish comments. If you're sending them from the future, please use the antitelephone. (The author discusses this “simulation argument” in endnote 191. He leaves it to the reader to judge its plausibility, as do I. I remain on the record as saying, “more likely than not”.) Whatever you may think about the Big Issues raised here, if you've never experienced the beauty of thermodynamics and statistical mechanics at a visceral level, this is the book to read. I'll bet many engineers who have been completely comfortable with computations in “thermogoddamics” for decades finally discover they “get it” after reading this equation-free treatment aimed at a popular audience.

- Carroll, Sean. The Particle at the End of the Universe. New York: Dutton, 2012. ISBN 978-0-525-95359-3.

-

I believe human civilisation is presently in a little-perceived

race between sinking into an entropic collapse, extinguishing

liberty and individual initiative, and a technological singularity

which will simply transcend all of the problems we presently find

so daunting and intractable. If things end badly, our descendants

may look upon our age as one of extravagance, where vast resources

were expended in a quest for pure knowledge without any likelihood

of practical applications.

Thus, the last decade has seen the construction of what is arguably

the largest and most complicated machine ever built by our species,

the Large

Hadron Collider (LHC), to search for and determine the properties

of elementary particles: the most fundamental constituents of the

universe we inhabit. This book, accessible to the intelligent layman,

recounts the history of the quest for the components from which

everything in the universe is made, the ever more complex and

expensive machines we've constructed to explore them, and the

intricate interplay between theory and experiment which this

enterprise has entailed.

At centre stage in this narrative is the

Higgs particle,

first proposed in 1964 as accounting for the broken symmetry

in the electroweak sector (as we'd now say), which gives mass

to the

W and Z bosons,

accounting for the short range of the

weak interaction

and the mass of the electron. (It is often sloppily said that the

Higgs mechanism explains the origin of mass. In fact, as Frank

Wilczek explains in

The Lightness of Being [March 2009],

around 95% of all hadronic mass in the universe is pure

E=mc²

wiggling of quarks and gluons within particles in the nucleus.)

Still, the Higgs is important—if it didn't exist the particles

we're made of would all be massless, travel at the speed of light,

and never aggregate into stars, planets, physicists, or most

importantly, computer programmers. On the other hand, there

wouldn't be any politicians.

The LHC accelerates protons (the nuclei of hydrogen, which delightfully

come from a little cylinder of hydrogen gas shown on p. 310, which

contains enough to supply the LHC with protons for about a billion

years) to energies so great that these particles, when they collide, have

about the same energy as a flying mosquito. You might wonder why the

LHC collides protons with protons rather than with antiprotons as

the Tevatron did.

While colliding protons with antiprotons allows more of the collision

energy to go into creating new particles, the LHC's strategy of

very high luminosity (rate of collisions) would require creation of

far more antiprotons than its support facilities could produce, hence

the choice of proton-proton collisions. While the energy of

individual particles accelerated by the LHC is modest from our

macroscopic perspective, the total energy of the beam circulating

around the accelerator is intimidating: a full beam dump would suffice

to melt a ton of copper. Be sure to step aside should this happen.

Has the LHC found the Higgs? Probably—the announcement on July 4th, 2012 by the two detector teams reported evidence for a particle with properties just as expected for the Higgs, so if it turned out to be something else, it would be a big surprise (but then Nature never signed a contract with scientists not to perplex them with misdirection). Unlike many popular accounts, this book looks beneath the hood and explores just how difficult it is to tease evidence for a new particle from the vast spray of debris that issues from particle collisions. It isn't like a little ball with an “h” pops out and goes “bing” in the detector: in fact, a newly produced Higgs particle decays in about 10−22 seconds, even faster than assets entrusted to the management of Goldman Sachs. The debris which emerges from the demise of a Higgs particle isn't all that different from that produced by many other standard model events, so the evidence for the Higgs is essentially a “bump” in the rate of production of certain decay signatures over that expected from the standard model background (sources expected to occur in the absence of the Higgs). These, in turn, require a tremendous amount of theoretical and experimental input, as well as massive computer calculations to evaluate; once you begin to understand this, you'll appreciate that the distinction between theory and experiment in particle physics is more fluid than you might have imagined.

This book is a superb example of popular science writing, and its author has distinguished himself as a master of the genre. He doesn't pull any punches: after reading this book you'll understand, at least at a conceptual level, broken symmetries, scalar fields, particles as excitations of fields, and the essence of quantum mechanics (as given by Aatish Bhatia on Twitter), “Don't look: waves. Look: particles.” - Charpak, Georges et Richard L. Garwin. Feux follets et champignons nucléaires. Paris: Odile Jacob, [1997] 2000. ISBN 978-2-7381-0857-9.