| 2019 |

February 2019

- Dutton, Edward and Michael A. Woodley of Menie. At Our Wits' End. Exeter, UK: Imprint Academic, 2018. ISBN 978-1-84540-985-2.

-

During the Great Depression, the Empire State Building was built,

from the beginning of foundation excavation to

official opening, in 410 days (less than 14 months). After

the destruction of the World Trade Center in New York on

September 11, 2001, design and construction of its replacement,

the new

One

World Trade Center was completed on November 3, 2014, 4801

days (160 months) later.

In the 1960s, from U.S. president Kennedy's proposal of a manned

lunar mission to the landing of Apollo 11 on the Moon, 2978

days (almost 100 months) elapsed. In January, 2004, U.S. president

Bush announced the

“Vision

for Space Exploration”, aimed at a human return to the

lunar surface by 2020. After a comical series of studies,

revisions, cancellations, de-scopings, redesigns, schedule

slips, and cost overruns, its successor now plans to launch a

lunar flyby mission (not even a lunar orbit like

Apollo 8) in June 2022, 224 months later. A lunar

landing is planned for no sooner than 2028, almost 300 months

after the “vision”, and almost nobody believes that

date (the landing craft design has not yet begun, and there is

no funding for it in the budget).

Wherever you look: junk science, universities corrupted with

bogus “studies” departments, politicians peddling

discredited nostrums a moment's critical thinking reveals to be

folly, an economy built upon an ever-increasing tower of debt

that nobody really believes is ever going to be paid off, and

the dearth of major, genuine innovations (as opposed to

incremental refinement of existing technologies, as has driven

the computing, communications, and information technology

industries) in every field: science, technology, public policy,

and the arts, it often seems like the world is getting dumber.

What if it really is?

That is the thesis explored by this insightful book, which is

packed with enough “hate facts” to detonate the

head of any bien pensant

academic or politician. I define a “hate fact” as

something which is indisputably true, well-documented by evidence

in the literature, which has not been contradicted, but the

citation of which is considered “hateful” and can

unleash outrage mobs upon anyone so foolish as to utter the

fact in public and be a career-limiting move for those

employed in Social Justice Warrior-converged organisations.

(An example of a hate fact, unrelated to the topic of this

book, is the FBI violent crime statistics broken down by

the race of the criminal and victim. Nobody disputes the

accuracy of this information or the methodology by which it is

collected, but woe betide anyone so foolish as to cite the

data or draw the obvious conclusions from it.)

In April 2004 I made my own foray into the question of

declining intelligence in

“Global IQ: 1950–2050”

in which I combined estimates of the mean IQ of countries with

census data and forecasts of population growth to estimate global

mean IQ for a century starting at 1950. Assuming the mean IQ

of countries remains constant (which is optimistic, since part of

the population growth in high IQ countries with low fertility

rates is due to migration from countries with lower IQ), I found

that global mean IQ, which was 91.64 for a population of 2.55

billion in 1950, declined to 89.20 for the 6.07 billion alive

in 2000, and was expected to fall to 86.32 for the 9.06 billion

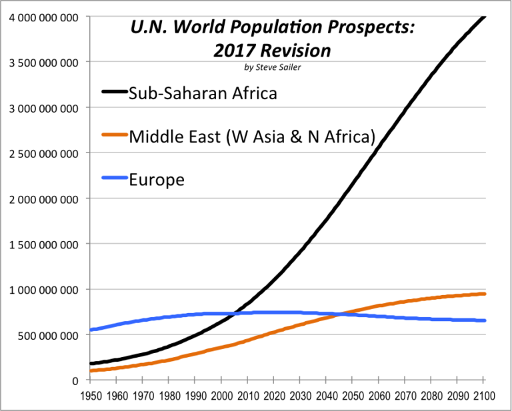

population forecast for 2050. This is mostly due to the

explosive population growth forecast for Sub-Saharan Africa,

where many of the populations with low IQ reside.

This is a particularly dismaying prospect, because there is no evidence for sustained consensual self-government in nations with a mean IQ less than 90. But while I was examining global trends assuming national IQ remains constant, in the present book the authors explore the provocative question of whether the population of today's developed nations is becoming dumber due to the inexorable action of natural selection on whatever genes determine intelligence. The argument is relatively simple, but based upon a number of pillars, each of which is a “hate fact”, although non-controversial among those who study these matters in detail.

- There is a factor, “general intelligence” or g, which measures the ability to solve a wide variety of mental problems, and this factor, measured by IQ tests, is largely stable across an individual's life.

- Intelligence, as measured by IQ tests, is, like height, in part heritable. The heritability of IQ is estimated at around 80%, which means that 80% of children's IQ can be estimated from that of their parents, and 20% is due to other factors.

- IQ correlates positively with factors contributing to success in society. The correlation with performance in education is 0.7, with highest educational level completed 0.5, and with salary 0.3.

- In Europe, between 1400 and around 1850, the wealthier half of the population had more children who survived to adulthood than the poorer half.

- Because IQ correlates with social success, that portion of the population which was more intelligent produced more offspring.

- Just as in selective breeding of animals by selecting those with a desired trait for mating, this resulted in a population whose average IQ increased (slowly) from generation to generation over this half-millennium.

While this makes for a funny movie, if the population is really getting dumber, it will have profound implications for the future. There will not just be a falling general level of intelligence but far fewer of the genius-level intellects who drive innovation in science, the arts, and the economy. Further, societies which reach the point where this decline sets in well before others that have industrialised more recently will find themselves at a competitive disadvantage across the board. (U.S. and Europe, I'm talking about China, Korea, and [to a lesser extent] Japan.) If you've followed the intelligence issue, about now you probably have steam coming out your ears waiting to ask, “But what about the Flynn effect?” IQ tests are usually “normed” to preserve the same mean and standard deviation (100 and 15 in the U.S. and Britain) over the years. James Flynn discovered that, in fact, measured by standardised tests which were not re-normed, measured IQ had rapidly increased in the 20th century in many countries around the world. The increases were sometimes breathtaking: on the standardised Raven's Progressive Matrices test (a nonverbal test considered to have little cultural bias), the scores of British schoolchildren increased by 14 IQ points—almost a full standard deviation—between 1942 and 2008. In the U.S., IQ scores seemed to be rising by around three points per decade, which would imply that people a hundred years ago were two standard deviations more stupid that those today, at the threshold of retardation. The slightest grasp of history (which, sadly many people today lack) will show how absurd such a supposition is. What's going on, then? The authors join James Flynn in concluding that what we're seeing is an increase in the population's proficiency in taking IQ tests, not an actual increase in general intelligence (g). Over time, children are exposed to more and more standardised tests and tasks which require the skills tested by IQ tests and, if practice doesn't make perfect, it makes better, and with more exposure to media of all kinds, skills of memorisation, manipulation of symbols, and spatial perception will increase. These are correlates of g which IQ tests measure, but what we're seeing may be specific skills which do not correlate with g itself. If this be the case, then eventually we should see the overall decline in general intelligence overtake the Flynn effect and result in a downturn in IQ scores. And this is precisely what appears to be happening. Norway, Sweden, and Finland have almost universal male military service and give conscripts a standardised IQ test when they report for training. This provides a large database, starting in 1950, of men in these countries, updated yearly. What is seen is an increase in IQ as expected from the Flynn effect from the start of the records in 1950 through 1997, when the scores topped out and began to decline. In Norway, the decline since 1997 was 0.38 points per decade, while in Denmark it was 2.7 points per decade. Similar declines have been seen in Britain, France, the Netherlands, and Australia. (Note that this decline may be due to causes other than decreasing intelligence of the original population. Immigration from lower-IQ countries will also contribute to decreases in the mean score of the cohorts tested. But the consequences for countries with falling IQ may be the same regardless of the cause.) There are other correlates of general intelligence which have little of the cultural bias of which some accuse IQ tests. They are largely based upon the assumption that g is something akin to the CPU clock speed of a computer: the ability of the brain to perform basic tasks. These include simple reaction time (how quickly can you push a button, for example, when a light comes on), the ability to discriminate among similar colours, the use of uncommon words, and the ability to repeat a sequence of digits in reverse order. All of these measures (albeit often from very sparse data sets) are consistent with increasing general intelligence in Europe up to some time in the 19th century and a decline ever since. If this is true, what does it mean for our civilisation? The authors contend that there is an inevitable cycle in the rise and fall of civilisations which has been seen many times in history. A society starts out with a low standard of living, high birth and death rates, and strong selection for intelligence. This increases the mean general intelligence of the population and, much faster, the fraction of genius level intellects. These contribute to a growth in the standard of living in the society, better conditions for the poor, and eventually a degree of prosperity which reduces the infant and childhood death rate. Eventually, the birth rate falls, starting with the more intelligent and better off portion of the population. The birth rate falls to or below replacement, with a higher fraction of births now from less intelligent parents. Mean IQ and the fraction of geniuses falls, the society falls into stagnation and decline, and usually ends up being conquered or supplanted by a younger civilisation still on the rising part of the intelligence curve. They argue that this pattern can be seen in the histories of Rome, Islamic civilisation, and classical China. And for the West—are we doomed to idiocracy? Well, there may be some possible escapes or technological fixes. We may discover the collection of genes responsible for the hereditary transmission of intelligence and develop interventions to select for them in the population. (Think this crosses the “ick factor”? What parent would look askance at a pill which gave their child an IQ boost of 15 points? What government wouldn't make these pills available to all their citizens purely on the basis of international competitiveness?) We may send some tiny fraction of our population to Mars, space habitats, or other challenging environments where they will be re-subjected to intense selection for intelligence and breed a successor society (doubtless very different from our own) which will start again at the beginning of the eternal cycle. We may have a religious revival (they happen when you least expect them), which puts an end to the cult of pessimism, decline, and death and restores belief in large families and, with it, the selection for intelligence. (Some may look at Joseph Smith as a prototype of this, but so far the impact of his religion has been on the margins outside areas where believers congregate.) Perhaps some of our increasingly sparse population of geniuses will figure out artificial general intelligence and our mind children will slip the surly bonds of biology and its tedious eternal return to stupidity. We might embrace the decline but vow to preserve everything we've learned as a bequest to our successors: stored in multiple locations in ways the next Enlightenment centuries hence can build upon, just as scholars in the Renaissance rediscovered the works of the ancient Greeks and Romans. Or, maybe we won't. In which case, “Winter has come and it's only going to get colder. Wrap up warm.” Here is a James Delingpole interview of the authors and discussion of the book.

April 2019

- Nelson, Roger D. Connected: The Emergence of Global Consciousness. Princeton: ICRL Press, 2019. ISBN 978-1-936033-35-5.

-

In the first half of the twentieth century

Pierre

Teilhard de Chardin developed the idea that the

process of evolution which had produced complex life

and eventually human intelligence on Earth was continuing

and destined to eventually reach an

Omega Point

in which, just as individual neurons self-organise to

produce the unified consciousness and intelligence of the

human brain, eventually individual human minds would

coalesce (he was thinking mostly of institutions and

technology, not a mystical global mind) into what he

called the

noosphere—a

sphere of unified thought surrounding the globe just like

the atmosphere. Could this be possible? Might the Internet

be the baby picture of the noosphere? And if a global mind

was beginning to emerge, might we be able to detect it with

the tools of science? That is the subject of this book

about the

Global Consciousness Project,

which has now been operating for more than two decades,

collecting an immense data set which has been, from inception,

completely transparent and accessible to anyone inclined to

analyse it in any way they can imagine. Written by the founder

of the project and operator of the network over its entire

history, the book presents the history, technical details,

experimental design, formal results, exploratory investigations

from the data set, and thoughts about what it all might mean.

Over millennia, many esoteric traditions have held that

“all is one”—that all humans and, in some

systems of belief, all living things or all of nature are

connected in some way and can interact in ways other than

physical (ultimately mediated by the electromagnetic force). A

common aspect of these philosophies and religions is that

individual consciousness is independent of the physical being

and may in some way be part of a larger, shared consciousness

which we may be able to access through techniques such as

meditation and prayer. In this view, consciousness may be

thought of as a kind of “field” with the brain

acting as a receiver in the same sense that a radio is a

receiver of structured information transmitted via the

electromagnetic field. Belief in reincarnation, for example, is

often based upon the view that death of the brain (the receiver)

does not destroy the coherent information in the consciousness

field which may later be instantiated in another living brain

which may, under some circumstances, access memories and

information from previous hosts.

Such beliefs have been common over much of human history and in

a wide variety of very diverse cultures around the globe, but in

recent centuries these beliefs have been displaced by the view

of mechanistic, reductionist science, which argues that the

brain is just a kind of (phenomenally complicated) biological

computer and that consciousness can be thought of as an emergent

phenomenon which arises when the brain computer's software

becomes sufficiently complex to be able to examine its own

operation. From this perspective, consciousness is confined

within the brain, cannot affect the outside world or the

consciousness of others except by physical interactions

initiated by motor neurons, and perceives the world only through

sensory neurons. There is no “consciousness field”,

and individual consciousness dies when the brain does.

But while this view is more in tune with the scientific outlook

which spawned the technological revolution that has transformed

the world and continues to accelerate, it has, so far, made

essentially zero progress in understanding consciousness.

Although we have built electronic computers which can perform

mathematical calculations trillions of times faster than the

human brain, and are on track to equal the storage capacity of

that brain some time in the next decade or so, we still don't

have the slightest idea how to program a computer to be

conscious: to be self-aware and act out of a sense of free will

(if free will, however defined, actually exists). So, if we

adopt a properly scientific and sceptical view, we must conclude

that the jury is still out on the question of consciousness. If

we don't understand enough about it to program it into a

computer, then we can't be entirely confident that it

is something we could program into a computer, or that

it is just some kind of software running on our brain-computer.

It looks like humans are, dare I say, programmed to believe in

consciousness as a force not confined to the brain. Many

cultures have developed shamanism, religions, philosophies, and

practices which presume the existence of the following kinds of

what Dean Radin calls Real Magic,

and which I quote from my review of his book with that title.

- Force of will: mental influence on the physical world, traditionally associated with spell-casting and other forms of “mind over matter”.

- Divination: perceiving objects or events distant in time and space, traditionally involving such practices as reading the Tarot or projecting consciousness to other places.

- Theurgy: communicating with non-material consciousness: mediums channelling spirits or communicating with the dead, summoning demons.

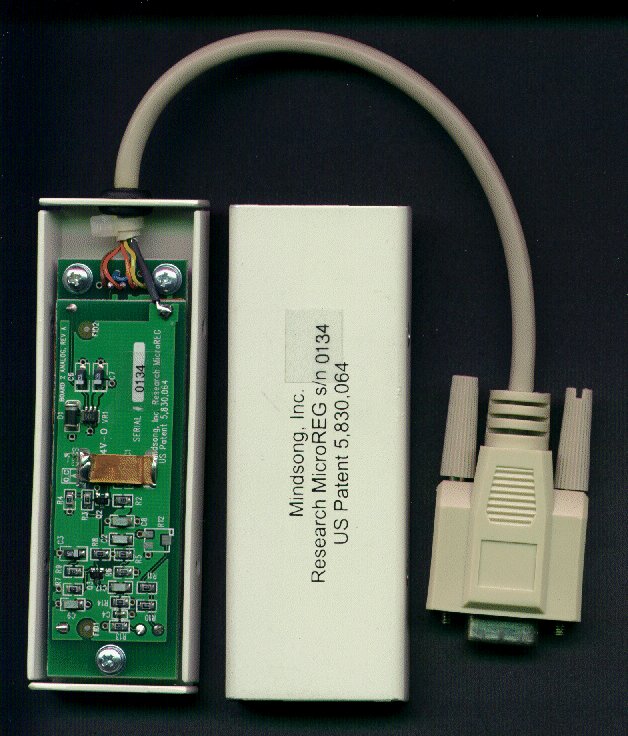

In 1998, Roger D. Nelson, the author of this book,

realised that the rapid development and worldwide

deployment of the Internet made it possible to expand

the FieldREG concept to a global scale. Random event

generators based upon quantum effects (usually

shot

noise from tunnelling across a back-biased Zener

diode or a resistor) had been scaled down to

small, inexpensive devices which could be attached to

personal computers via an RS-232 serial port. With

more and more people gaining access to the Internet

(originally mostly via dial-up to commercial Internet

Service Providers, then increasingly via persistent

broadband connections such as

ADSL

service over telephone wires or a cable television

connection), it might be possible to deploy a network of

random event generators at locations all around the world,

each of which would constantly collect timestamped data

which would be transmitted to a central server, collected

there, and made available to researchers for analysis by

whatever means they chose to apply.

As Roger Nelson discussed the project with his son Greg (who

would go on to be the principal software developer for the

project), Greg suggested that what was proposed was essentially

an

electroencephalogram

(EEG) for the hypothetical

emerging global mind, an “ElectroGaiaGram”

or EGG. Thus was born the “EGG Project” or,

as it is now formally called, the Global Consciousness

Project. Just as the many probes of an EEG provide a

(crude) view into the operation of a single brain, perhaps

the wide-flung, always-on network of REGs would pick up

evidence of coherence when a large number of the world's

minds were focused on a single event or idea. Once the

EGG project was named, terminology followed naturally: the

individual hosts running the random event generators would

be “eggs” and the central data archiving server

the “basket”.

In April 1998, Roger Nelson released the original proposal for

the project and shortly thereafter Greg Nelson began development

of the egg and basket software. I became involved in the

project in mid-summer 1998 and contributed code to the egg and

basket software, principally to allow it to be portable to other

variants of Unix systems (it was originally developed on Linux)

and machines with different byte order than the Intel processors

on which it ran, and also to reduce the resource requirements on

the egg host, making it easier to run on a non-dedicated

machine. I also contributed programs for the basket server to

assemble daily data summaries from the raw data collected by the

basket and to produce a real-time network status report. Evolved

versions of these programs remain in use today, more than two

decades later. On August 2nd, 1998, I began to run the second

egg in the network, originally on a Sun workstation running

Solaris; this was the first non-Linux, non-Intel,

big-endian egg

host in the network. A few days later, I brought up the fourth

egg, running on a Sun server in the Hall of the Servers one

floor below the second egg; this used a different kind of REG,

but was otherwise identical. Both of these eggs have been in

continuous operation from 1998 to the present (albeit with brief

outages due to power failures, machine crashes, and other

assorted disasters over the years), and have migrated from

machine to machine over time. The second egg is now connected

to Raspberry Pi running Linux, while the fourth is now hosted on

a Dell Intel-based server also running Linux, which was the

first egg host to run on a 64-bit machine in native mode.

In 1998, Roger D. Nelson, the author of this book,

realised that the rapid development and worldwide

deployment of the Internet made it possible to expand

the FieldREG concept to a global scale. Random event

generators based upon quantum effects (usually

shot

noise from tunnelling across a back-biased Zener

diode or a resistor) had been scaled down to

small, inexpensive devices which could be attached to

personal computers via an RS-232 serial port. With

more and more people gaining access to the Internet

(originally mostly via dial-up to commercial Internet

Service Providers, then increasingly via persistent

broadband connections such as

ADSL

service over telephone wires or a cable television

connection), it might be possible to deploy a network of

random event generators at locations all around the world,

each of which would constantly collect timestamped data

which would be transmitted to a central server, collected

there, and made available to researchers for analysis by

whatever means they chose to apply.

As Roger Nelson discussed the project with his son Greg (who

would go on to be the principal software developer for the

project), Greg suggested that what was proposed was essentially

an

electroencephalogram

(EEG) for the hypothetical

emerging global mind, an “ElectroGaiaGram”

or EGG. Thus was born the “EGG Project” or,

as it is now formally called, the Global Consciousness

Project. Just as the many probes of an EEG provide a

(crude) view into the operation of a single brain, perhaps

the wide-flung, always-on network of REGs would pick up

evidence of coherence when a large number of the world's

minds were focused on a single event or idea. Once the

EGG project was named, terminology followed naturally: the

individual hosts running the random event generators would

be “eggs” and the central data archiving server

the “basket”.

In April 1998, Roger Nelson released the original proposal for

the project and shortly thereafter Greg Nelson began development

of the egg and basket software. I became involved in the

project in mid-summer 1998 and contributed code to the egg and

basket software, principally to allow it to be portable to other

variants of Unix systems (it was originally developed on Linux)

and machines with different byte order than the Intel processors

on which it ran, and also to reduce the resource requirements on

the egg host, making it easier to run on a non-dedicated

machine. I also contributed programs for the basket server to

assemble daily data summaries from the raw data collected by the

basket and to produce a real-time network status report. Evolved

versions of these programs remain in use today, more than two

decades later. On August 2nd, 1998, I began to run the second

egg in the network, originally on a Sun workstation running

Solaris; this was the first non-Linux, non-Intel,

big-endian egg

host in the network. A few days later, I brought up the fourth

egg, running on a Sun server in the Hall of the Servers one

floor below the second egg; this used a different kind of REG,

but was otherwise identical. Both of these eggs have been in

continuous operation from 1998 to the present (albeit with brief

outages due to power failures, machine crashes, and other

assorted disasters over the years), and have migrated from

machine to machine over time. The second egg is now connected

to Raspberry Pi running Linux, while the fourth is now hosted on

a Dell Intel-based server also running Linux, which was the

first egg host to run on a 64-bit machine in native mode.

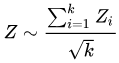

Here is precisely how the network measures deviation from the expectation for genuinely random data. The egg hosts all run a Network Time Protocol (NTP) client to provide accurate synchronisation with Internet time server hosts which are ultimately synchronised to atomic clocks or GPS. At the start of every second a total of 200 bits are read from the random event generator. Since all the existing generators provide eight bits of random data transmitted as bytes on a 9600 baud serial port, this involves waiting until the start of the second, reading 25 bytes from the serial port (first flushing any potentially buffered data), then breaking the eight bits out of each byte of data. A precision timing loop guarantees that the sampling starts at the beginning of the second-long interval to the accuracy of the computer's clock. This process produces 200 random bits. These bits, one or zero, are summed to produce a “sample” which counts the number of one bits for that second. This sample is stored in a buffer on the egg host, along with a timestamp (in Unix time() format), which indicates when it was taken. Buffers of completed samples are archived in files on the egg host's file system. Periodically, the basket host will contact the egg host over the Internet and request any samples collected after the last packet it received from the egg host. The egg will then transmit any newer buffers it has filled to the basket. All communications are performed over the stateless UDP Internet protocol, and the design of the basket request and egg reply protocol is robust against loss of packets or packets being received out of order. (This data transfer protocol may seem odd, but recall that the network was designed more than twenty years ago when many people, especially those outside large universities and companies, had dial-up Internet access. The architecture would allow a dial-up egg to collect data continuously and then, when it happened to be connected to the Internet, respond to a poll from the basket and transmit its accumulated data during the time it was connected. It also makes the network immune to random outages in Internet connectivity. Over two decades of operation, we have had exactly zero problems with Internet outages causing loss of data.) When a buffer from an egg host is received by the basket, it is stored in a database directory for that egg. The buffer contains a time stamp identifying the second at which each sample within it was collected. All times are stored in Universal Time (UTC), so no correction for time zones or summer and winter time is required. This is the entire collection process of the network. The basket host, which was originally located at Princeton University and now is on a server at global-mind.org, only stores buffers in the database. Buffers, once stored, are never modified by any other program. Bad data, usually long strings of zeroes or ones produced when a hardware random event generator fails electrically, are identified by a “sanity check” program and then manually added to a “rotten egg” database which causes these sequences to be ignored by analysis programs. The random event generators are very simple and rarely fail, so this is a very unusual circumstance. The raw database format is difficult for analysis programs to process, so every day an automated program (which I wrote) is run which reads the basket database, extracts every sample collected for the previous 24 hour period (or any desired 24 hour window in the history of the project), and creates a day summary file with a record for every second in the day with a column for the samples from each egg which reported that day. Missing data (eggs which did not report for that second) is indicated by a blank in that column. The data are encoded in CSV format which is easy to load into a spreadsheet or read with a program. Because some eggs may not report immediately due to Internet outages or other problems, the summary data report is re-generated two days later to capture late-arriving data. You can request custom data reports for your own analysis from the Custom Data Request page. If you are interested in doing your own exploratory analysis of the Global Consciousness Project data set, you may find my EGGSHELL C++ libraries useful. The analysis performed by the Project proceeds from these summary files as follows.First, we observe than each sample (xi) from egg i consists of 200 bits with an expected equal probability of being zero or one. Thus each sample has a mean expectation value (μ) of 100 and a standard deviation (σ) of 7.071 (which is just the square root of half the mean value in the case of events with probability 0.5).

Then, for each sample, we can compute its Stouffer Z-score as Zi = (xi −μ) / σ. From the Z-score, it is possible to directly compute the probability that the observed deviation from the expected mean value (μ) was due to chance.

It is now possible to compute a network-wide Z-score for all eggs reporting samples in that second using Stouffer's formula:

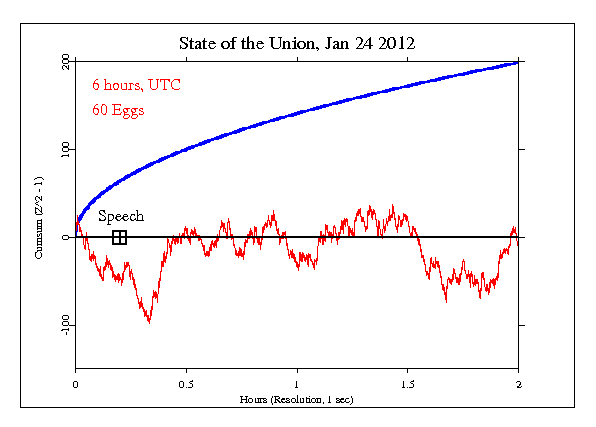

over all k eggs reporting. From this, one can compute the probability that the result from all k eggs reporting in that second was due to chance. Squaring this composite Z-score over all k eggs gives a chi-squared distributed value we shall call V, V = Z² which has one degree of freedom. These values may be summed, yielding a chi-squared distributed number with degrees of freedom equal to the number of values summed. From the chi-squared sum and number of degrees of freedom, the probability of the result over an entire period may be computed. This gives the probability that the deviation observed by all the eggs (the number of which may vary from second to second) over the selected window was due to chance. In most of the analyses of Global Consciousness Project data an analysis window of one second is used, which avoids the need for the chi-squared summing of Z-scores across multiple seconds. The most common way to visualise these data is a “cumulative deviation plot” in which the squared Z-scores are summed to show the cumulative deviation from chance expectation over time. These plots are usually accompanied by a curve which shows the boundary for a chance probability of 0.05, or one in twenty, which is often used a criterion for significance. Here is such a plot for U.S. president Obama's 2012 State of the Union address, an event of ephemeral significance which few people anticipated and even fewer remember.

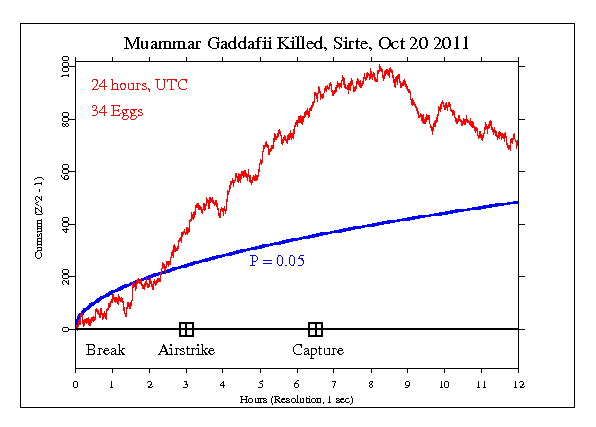

What we see here is precisely what you'd expect for purely random data without any divergence from random expectation. The cumulative deviation wanders around the expectation value of zero in a “random walk” without any obvious trend and never approaches the threshold of significance. So do all of our plots look like this (which is what you'd expect)? Well, not exactly. Now let's look at an event which was unexpected and garnered much more worldwide attention: the death of Muammar Gadaffi (or however you choose to spell it) on 2011-10-20.

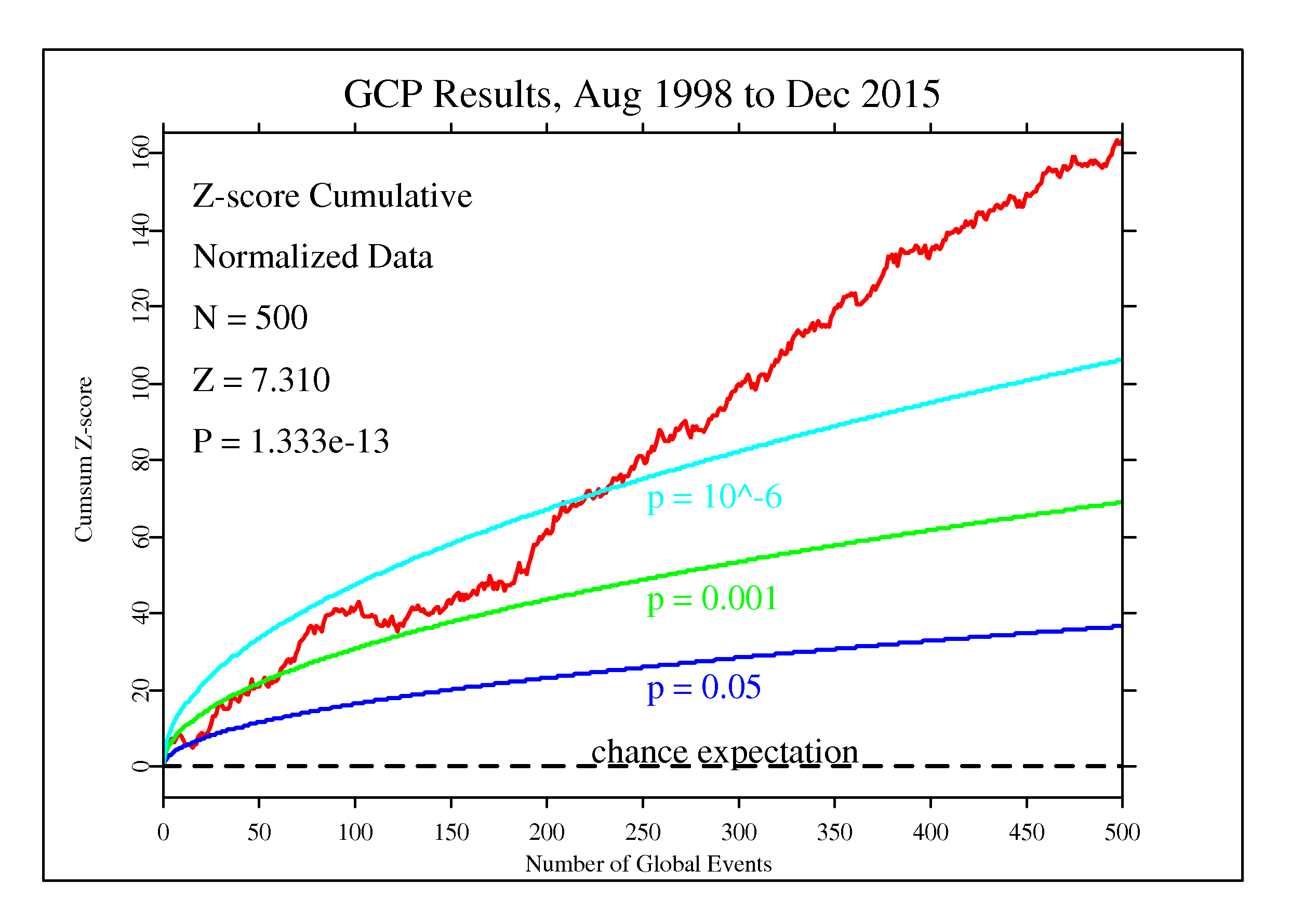

Now we see the cumulative deviation taking off, blowing right through the criterion of significance, and ending twelve hours later with a Z-score of 2.38 and a probability of the result being due to chance of one in 111. What's going on here? How could an event which engages the minds of billions of slightly-evolved apes affect the output of random event generators driven by quantum processes believed to be inherently random? Hypotheses non fingo. All, right, I'll fingo just a little bit, suggesting that my crackpot theory of paranormal phenomena might be in play here. But the real test is not in potentially cherry-picked events such as I've shown you here, but the accumulation of evidence over almost two decades. Each event has been the subject of a formal prediction, recorded in a Hypothesis Registry before the data were examined. (Some of these events were predicted well in advance [for example, New Year's Day celebrations or solar eclipses], while others could be defined only after the fact, such as terrorist attacks or earthquakes). The significance of the entire ensemble of tests can be computed from the network results from the 500 formal predictions in the Hypothesis Registry and the network results for the periods where a non-random effect was predicted. To compute this effect, we take the formal predictions and compute a cumulative Z-score across the events. Here's what you get.

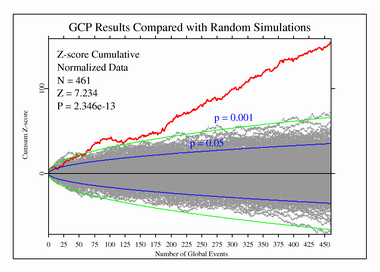

Now this is…interesting. Here, summing over 500 formal predictions, we have a Z-score of 7.31, which implies that the results observed were due to chance with a probability of less than one in a trillion. This is far beyond the criterion usually considered for a discovery in physics. And yet, what we have here is a tiny effect. But could it be expected in truly random data? To check this, we compare the results from the network for the events in the Hypothesis Registry with 500 simulated runs using data from a pseudorandom normal distribution.

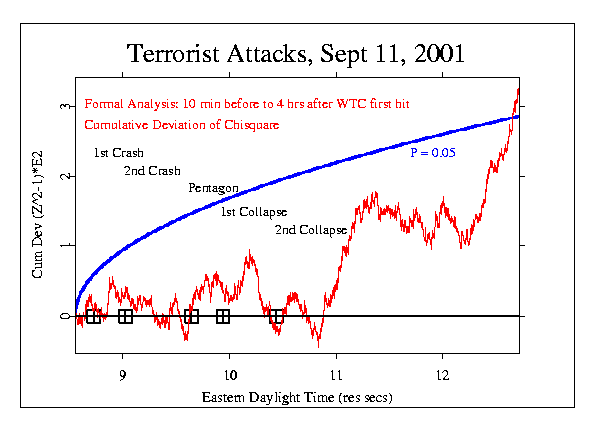

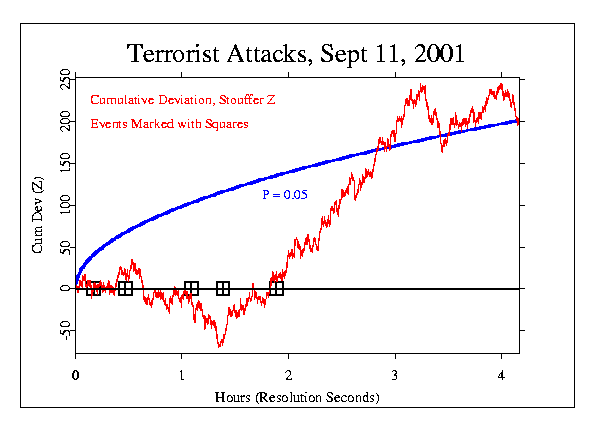

Since the network has been up and running continually since 1998, it was in operation on September 11, 2001, when a mass casualty terrorist attack occurred in the United States. The formally recorded prediction for this event was an elevated network variance in the period starting 10 minutes before the first plane crashed into the World Trade Center and extending for over four hours afterward (from 08:35 through 12:45 Eastern Daylight Time). There were 37 eggs reporting that day (around half the size of the fully built-out network at its largest). Here is a chart of the cumulative deviation of chi-square for that period.

The final probability was 0.028, which is equivalent to an odds ratio of 35 to one against chance. This is not a particularly significant result, but it met the pre-specified criterion of significance of probability less than 0.05. An alternative way of looking at the data is to plot the cumulative Z-score, which shows both the direction of the deviations from expectation for randomness as well as their magnitude, and can serve as a measure of correlation among the eggs (which should not exist in genuinely random data). This and subsequent analyses did not contribute to the formal database of results from which the overall significance figures were calculated, but are rather exploratory analyses at the data to see if other interesting patterns might be present.

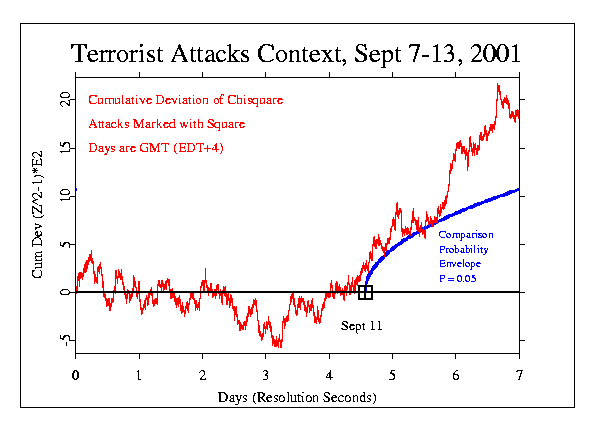

Had this form of analysis and time window been chosen a priori, it would have been calculated to have a chance probability of 0.000075, or less than one in ten thousand. Now let's look at a week-long window of time between September 7 and 13. The time of the September 11 attacks is marked by the black box. We use the cumulative deviation of chi-square from the formal analysis and start the plot of the P=0.05 envelope at that time.

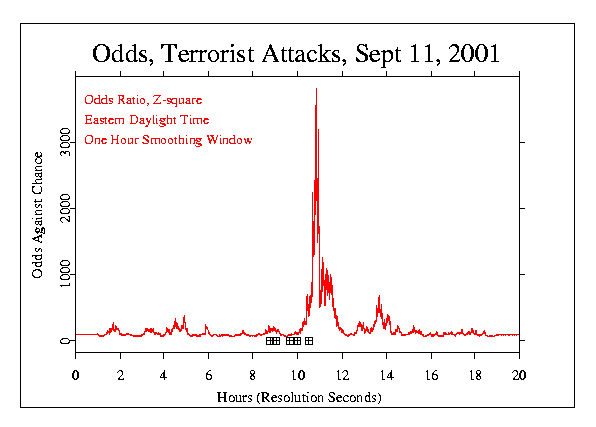

Another analysis looks at a 20 hour period centred on the attacks and smooths the Z-scores by averaging them within a one hour sliding window, then squares the average and converts to odds against chance.

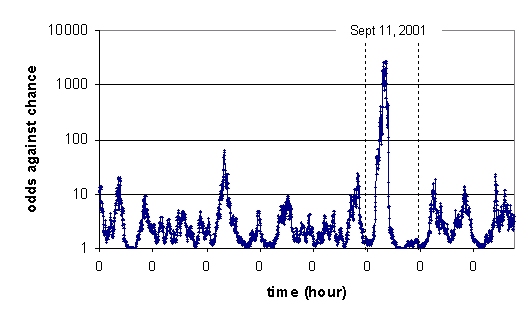

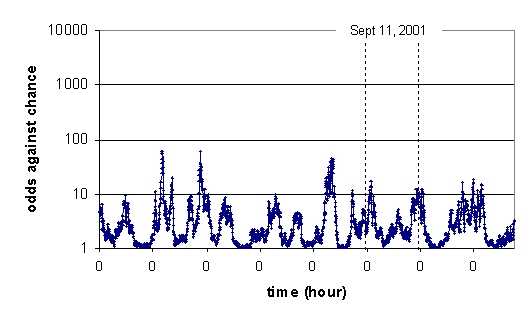

Dean Radin performed an independent analysis of the day's data binning Z-score data into five minute intervals over the period from September 6 to 13, then calculating the odds against the result being a random fluctuation. This is plotted on a logarithmic scale of odds against chance, with each 0 on the X axis denoting midnight of each day.

The following is the result when the actual GCP data from September 2001 is replaced with pseudorandom data for the same period.

So, what are we to make of all this? That depends upon what you, and I, and everybody else make of this large body of publicly-available, transparently-collected data assembled over more than twenty years from dozens of independently-operated sites all over the world. I don't know about you, but I find it darned intriguing. Having been involved in the project since its very early days and seen all of the software used in data collection and archiving with my own eyes, I have complete confidence in the integrity of the data and the people involved with the project. The individual random event generators pass exhaustive randomness tests. When control runs are made by substituting data for the periods predicted in the formal tests with data collected at other randomly selected intervals from the actual physical network, the observed deviations from randomness go away, and the same happens when network data are replaced by computer-generated pseudorandom data. The statistics used in the formal analysis are all simple matters you'll learn in an introductory stat class and are explained in my “Introduction to Probability and Statistics”. If you're interested in exploring further, Roger Nelson's book is an excellent introduction to the rationale and history of the project, how it works, and a look at the principal results and what they might mean. There is also non-formal exploration of other possible effects, such as attenuation by distance, day and night sleep cycles, and effect sizes for different categories of events. There's also quite a bit of New Age stuff which makes my engineer's eyes glaze over, but it doesn't detract from the rigorous information elsewhere. The ultimate resource is the Global Consciousness Project's sprawling and detailed Web site. Although well-designed, the site can be somewhat intimidating due to its sheer size. You can find historical documents, complete access to the full database, analyses of events, and even the complete source code for the egg and basket programs. A Kindle edition is available. All graphs in this article are as posted on the Global Consciousness Project Web site.

- Corcoran, Travis J. I.

The Powers of the Earth.

New Hampshire: Morlock Publishing, 2017.

ISBN 978-1-9733-1114-0.

Corcoran, Travis J. I. Causes of Separation. New Hampshire: Morlock Publishing, 2018. ISBN 978-1-9804-3744-4. - (Note: This is novel is the first of an envisioned four volume series titled Aristillus. It and the second book, Causes of Separation, published in May, 2018, together tell a single story which reaches a decisive moment just as the first book ends. Unusually, this will be a review of both novels, taken as a whole. If you like this kind of story at all, there's no way you'll not immediately plunge into the second book after setting down the first.) Around the year 2050, collectivists were firmly in power everywhere on Earth. Nations were subordinated to the United Nations, whose force of Peace Keepers (PKs) had absorbed all but elite special forces, and were known for being simultaneously brutal, corrupt, and incompetent. (Due to the equality laws, military units had to contain a quota of “Alternatively Abled Soldiers” who other troops had to wheel into combat.) The United States still existed as a country, but after decades of rule by two factions of the Democrat party: Populist and Internationalist, was mired in stagnation, bureaucracy, crumbling infrastructure, and on the verge of bankruptcy. The U.S. President, Themba Johnson, a former talk show host who combined cluelessness, a volatile temper, and vulpine cunning when it came to manipulating public opinion, is confronted with all of these problems and looking for a masterstroke to get beyond the next election. Around 2050, when the collectivists entered the inevitable end game their policies lead to everywhere they are tried, with the Bureau of Sustainable Research (BuSuR) suppressing new technologies in every field and the Construction Jobs Preservation Act and Bureau of Industrial Planning banning anything which might increase productivity, a final grasp to loot the remaining seed corn resulted in the CEO Trials aimed at the few remaining successful companies, with expropriation of their assets and imprisonment of their leaders. CEO Mike Martin manages to escape from prison and link up with renegade physicist Ponnala (“Ponzie”) Srinivas, inventor of an anti-gravity drive he doesn't want the slavers to control. Mike buys a rustbucket oceangoing cargo ship, equips it with the drive, an airtight compartment and life support, and flees Earth with a cargo of tunnel boring machines and water to exile on the Moon, in the crater Aristillus in Mare Imbrium on the lunar near side where, fortuitously, the impact of a metal-rich asteroid millions of years ago enriched the sub-surface with metals rare in the Moon's crust. Let me say a few words about the anti-gravity drive, which is very unusual and original, and whose properties play a significant role in the story. The drive works by coupling to the gravitational field of a massive body and then pushing against it, expending energy as it rises and gains gravitational potential energy. Momentum is conserved, as an equal and opposite force is exerted on the massive body against which it is pushing. The force vector is always along the line connecting the centre of mass of the massive body and the drive unit, directed away from the centre of mass. The force is proportional to the strength of the gravitational field in which the drive is operating, and hence stronger when pushing against a body like Earth as opposed to a less massive one like the Moon. The drive's force diminishes with distance from the massive body as its gravitational field falls off with the inverse square law, and hence the drive generates essentially no force when in empty space far from a gravitating body. When used to brake a descent toward a massive body, the drive converts gravitational potential energy into electricity like the regenerative braking system of an electric vehicle: energy which can be stored for use when later leaving the body. Because the drive can only push outward radially, when used to, say, launch from the Earth to the Moon, it is much like Jules Verne's giant cannon—the launch must occur at the latitude and longitude on Earth where the Moon will be directly overhead at the time the ship arrives at the Moon. In practice, the converted ships also carried auxiliary chemical rockets and reaction control thrusters for trajectory corrections and precision maneuvering which could not be accomplished with the anti-gravity drive. By 2064, the lunar settlement, called Aristillus by its inhabitants, was thriving, with more than a hundred thousand residents, and growing at almost twenty percent a year. (Well, nobody knew for sure, because from the start the outlook shared by the settlers was aligned with Mike Martin's anarcho-capitalist worldview. There was no government, no taxes, no ID cards, no business licenses, no regulations, no zoning [except covenants imposed by property owners on those who sub-leased property from them], no central bank, no paper money [an entrepreneur had found a vein of gold left by the ancient impactor and gone into business providing hard currency], no elections, no politicians, no forms to fill out, no police, and no army.) Some of these “features” of life on grey, regimented Earth were provided by private firms, while many of the others were found to be unnecessary altogether. The community prospered as it grew. Like many frontier settlements, labour was in chronic short supply, and even augmented by robot rovers and machines (free of the yoke of BuSuR), there was work for anybody who wanted it and job offers awaiting new arrivals. A fleet of privately operated ships maintained a clandestine trade with Earth, bringing goods which couldn't yet be produced on the Moon, atmosphere, water from the oceans (in converted tanker ships), and new immigrants who had sold their Earthly goods and quit the slave planet. Waves of immigrants from blood-soaked Nigeria and chaotic China established their own communities and neighbourhoods in the ever-growing network of tunnels beneath Aristillus. The Moon has not just become a refuge for humans. When BuSuR put its boot on the neck of technology, it ordered the shutdown of a project to genetically “uplift” dogs to human intelligence and beyond, creating “Dogs” (the capital letter denoting the uplift) and all existing Dogs to be euthanised. Many were, but John (we never learn his last name), a former U.S. Special Forces operator, manages to rescue a colony of Dogs from one of the labs before the killers arrive and escape with them to Aristillus, where they have set up the Den and engage in their own priorities, including role-playing games, software development, and trading on the betting markets. Also rescued by John was Gamma, the first Artificial General Intelligence to be created, whose intelligence is above the human level but not (yet, anyway) intelligence runaway singularity-level transcendent. Gamma has established itself in its own facility in Sinus Lunicus on the other side of Mare Imbrium, and has little contact with the human or Dog settlers. Inevitably, liberty produces prosperity, and prosperity eventually causes slavers to regard the free with envious eyes, and slowly and surely draw their plans against them. This is the story of the first interplanetary conflict, and a rousing tale of liberty versus tyranny, frontier innovation against collectivised incompetence, and principles (there is even the intervention of a Vatican diplomat) confronting brutal expedience. There are delicious side-stories about the creation of fake news, scheming politicians, would-be politicians in a libertarian paradise, open source technology, treachery, redemption, and heroism. How do three distinct species: human, Dog, and AI work together without a top-down structure or subordinating one to another? Can the lunar colony protect itself without becoming what its settlers left Earth to escape? Woven into the story is a look at how a libertarian society works (and sometimes doesn't work) in practice. Aristillus is in no sense a utopia: it has plenty of rough edges and things to criticise. But people there are free, and they prefer it to the prison planet they escaped. This is a wonderful, sprawling, action-packed story with interesting characters, complicated conflicts, and realistic treatment of what a small colony faces when confronted by a hostile planet of nine billion slaves. Think of this as Heinlein's The Moon is a Harsh Mistress done better. There are generous tips of the hat to Heinlein and other science fiction in the book, but this is a very different story with an entirely different outcome, and truer to the principles of individualism and liberty. I devoured these books and give them my highest recommendation. The Powers of the Earth won the 2018 Prometheus Award for best libertarian science fiction novel.

- Coppley, Jackson. The Code Hunters. Chevy Chase, MD: Contour Press, 2019. ISBN 978-1-09-107011-0.

- A team of expert cavers exploring a challenging cave in New Mexico in search of a possible connection to Carlsbad Caverns tumble into a chamber deep underground containing something which just shouldn't be there: a huge slab of metal, like titanium, twenty-four feet square and eight inches thick, set into the rock of the cave, bearing markings which resemble the pits and lands on an optical storage disc. No evidence for human presence in the cave prior to the discoverers is found, and dating confirms that the slab is at least ten thousand years old. There is no way an object that large could be brought through the cramped and twisting passages of the cave to the chamber where it was found. Wealthy adventurer Nicholas Foxe, with degrees in archaeology and cryptography, gets wind of the discovery and pulls strings to get access to the cave, putting together a research program to try to understand the origin of the slab and decode its enigmatic inscription. But as news of the discovery reaches others, they begin to pursue their own priorities. A New Mexico senator sends his on-the-make assistant to find out what is going on and see how it might be exploited to his advantage. An ex-Army special forces operator makes stealthy plans. An MIT string theorist with a wide range of interests begins exploring unorthodox ideas about how the inscriptions might be encoded. A televangelist facing hard times sees the Tablet as the way back to the top of the heap. A wealthy Texan sees the potential in the slab for wealth beyond his abundant dreams of avarice. As the adventure unfolds, we encounter a panoply of fascinating characters: a World Health Organization scientist, an Italian violin maker with an eccentric theory of language and his autistic daughter, and a “just the facts” police inspector. As clues are teased from the enigma, we visit exotic locations and experience harrowing adventure, finally grasping the significance of a discovery that bears on the very origin of modern humans. About now, you might be thinking “This sounds like a Dan Brown novel”, and in a sense you'd be right. But this is the kind of story Dan Brown would craft if he were a lot better author than he is: whereas Dan Brown books have become stereotypes of cardboard characters and fill-in-the-blanks plots with pseudo-scientific bafflegab stirred into the mix (see my review of Origin [May 2018]), this is a gripping tale filled with complex, quirky characters, unexpected plot twists, beautifully sketched locales, and a growing sense of wonder as the significance of the discovery is grasped. If anybody in Hollywood had any sense (yes, I know…) they would make this into a movie instead of doing another tedious Dan Brown sequel. This is subtitled “A Nicholas Foxe Adventure”: I sincerely hope there will be more to come. The author kindly let me read a pre-publication manuscript of this novel. The Kindle edition is free to Kindle Unlimited subscribers.

May 2019

- Smolin, Lee. Einstein's Unfinished Revolution. New York: Penguin Press, 2019. ISBN 978-1-59420-619-1.

-

In the closing years of the nineteenth century, one of those

nagging little discrepancies vexing physicists was the behaviour

of the

photoelectric

effect. Originally discovered in 1887, the phenomenon causes

certain metals, when illuminated by light, to absorb the light

and emit electrons. The perplexing point was that there was a

minimum wavelength (colour of light) necessary for electron

emission, and for longer wavelengths, no electrons would be

emitted at all, regardless of the intensity of the beam of light.

For example, a certain metal might emit electrons

when illuminated by green, blue, violet, and ultraviolet light, with

the intensity of electron emission proportional to the light

intensity, but red or yellow light, regardless of how intense,

would not result in a single electron being emitted.

This didn't make any sense. According to

Maxwell's

wave theory of light, which was almost universally

accepted and had passed stringent experimental tests, the

energy of light depended upon the amplitude of the wave

(its intensity), not the wavelength (or, reciprocally,

its frequency). And yet the photoelectric effect didn't

behave that way—it appeared that whatever was causing the

electrons to be emitted depended on the wavelength

of the light, and what's more, there was a sharp cut-off

below which no electrons would be emitted at all.

In 1905, in one of his

“miracle

year” papers,

“On a Heuristic Viewpoint Concerning the Production and

Transformation of Light”, Albert Einstein suggested a

solution to the puzzle. He argued that light did not propagate

as a wave at all, but rather in discrete particles, or “quanta”,

later named “photons”, whose energy was proportional

to the wavelength of the light. This neatly explained the

behaviour of the photoelectric effect. Light with a wavelength

longer than the cut-off point was transmitted by photons whose

energy was too low to knock electrons out of metal they

illuminated, while those above the threshold could liberate

electrons. The intensity of the light was a measure of the

number of photons in the beam, unrelated to the

energy of the individual photons.

This paper became one of the cornerstones of the revolutionary

theory of

quantum

mechanics, the complete working out of which occupied much

of the twentieth century. Quantum mechanics underlies the

standard model

of particle physics, which is arguably the most thoroughly tested

theory in the history of physics, with no experiment showing

results which contradict its predictions since it was formulated

in the 1970s. Quantum mechanics is necessary to explain the

operation of the electronic and optoelectronic devices upon

which our modern computing and communication infrastructure

is built, and describes every aspect of physical

chemistry.

But quantum mechanics is weird. Consider: if light

consists of little particles, like bullets, then why

when you shine a beam of light on a

barrier

with two slits do you get an interference pattern with bright

and dark bands precisely as you get with, say, water waves?

And if you send a single photon at a time and try to measure

which slit it went through, you find it always went through one

or the other, but then the interference pattern goes away.

It seems like whether the photon behaves as a wave or a particle

depends upon how you look at it. If you have an hour, here is

grand master explainer

Richard Feynman

(who won his own Nobel Prize in 1965 for reconciling the

quantum mechanical theory of light and the electron with

Einstein's

special

relativity) exploring how profoundly weird the

double slit

experiment is.

Fundamentally, quantum mechanics seems to violate the principle

of realism, which the author defines as follows.

The belief that there is an objective physical world whose properties are independent of what human beings know or which experiments we choose to do. Realists also believe that there is no obstacle in principle to our obtaining complete knowledge of this world.

This has been part of the scientific worldview since antiquity and yet quantum mechanics, confirmed by innumerable experiments, appears to indicate we must abandon it. Quantum mechanics says that what you observe depends on what you choose to measure; that there is an absolute limit upon the precision with which you can measure pairs of properties (for example position and momentum) set by the uncertainty principle; that it isn't possible to predict the outcome of experiments but only the probability among a variety of outcomes; and that particles which are widely separated in space and time but which have interacted in the past are entangled and display correlations which no classical mechanistic theory can explain—Einstein called the latter “spooky action at a distance”. Once again, all of these effects have been confirmed by precision experiments and are not fairy castles erected by theorists. From the formulation of the modern quantum theory in the 1920s, often called the Copenhagen interpretation after the location of the institute where one of its architects, Neils Bohr, worked, a number of eminent physicists including Einstein and Louis de Broglie were deeply disturbed by its apparent jettisoning of the principle of realism in favour of what they considered a quasi-mystical view in which the act of “measurement” (whatever that means) caused a physical change (wave function collapse) in the state of a system. This seemed to imply that the photon, or electron, or anything else, did not have a physical position until it interacted with something else: until then it was just an immaterial wave function which filled all of space and (when squared) gave the probability of finding it at that location. In 1927, de Broglie proposed a pilot wave theory as a realist alternative to the Copenhagen interpretation. In the pilot wave theory there is a real particle, which has a definite position and momentum at all times. It is guided in its motion by a pilot wave which fills all of space and is defined by the medium through which it propagates. We cannot predict the exact outcome of measuring the particle because we cannot have infinitely precise knowledge of its initial position and momentum, but in principle these quantities exist and are real. There is no “measurement problem” because we always detect the particle, not the pilot wave which guides it. In its original formulation, the pilot wave theory exactly reproduced the predictions of the Copenhagen formulation, and hence was not a competing theory but rather an alternative interpretation of the equations of quantum mechanics. Many physicists who preferred to “shut up and calculate” considered interpretations a pointless exercise in phil-oss-o-phy, but de Broglie and Einstein placed great value on retaining the principle of realism as a cornerstone of theoretical physics. Lee Smolin sketches an alternative reality in which “all the bright, ambitious students flocked to Paris in the 1930s to follow de Broglie, and wrote textbooks on pilot wave theory, while Bohr became a footnote, disparaged for the obscurity of his unnecessary philosophy”. But that wasn't what happened: among those few physicists who pondered what the equations meant about how the world really works, the Copenhagen view remained dominant. In the 1950s, independently, David Bohm invented a pilot wave theory which he developed into a complete theory of nonrelativistic quantum mechanics. To this day, a small community of “Bohmians” continue to explore the implications of his theory, working on extending it to be compatible with special relativity. From a philosophical standpoint the de Broglie-Bohm theory is unsatisfying in that it involves a pilot wave which guides a particle, but upon which the particle does not act. This is an “unmoved mover”, which all of our experience of physics argues does not exist. For example, Newton's third law of motion holds that every action has an equal and opposite reaction, and in Einstein's general relativity, spacetime tells mass-energy how to move while mass-energy tells spacetime how to curve. It seems odd that the pilot wave could be immune from influence of the particle it guides. A few physicists, such as Jack Sarfatti, have proposed “post-quantum” extensions to Bohm's theory in which there is back-reaction from the particle on the pilot wave, and argue that this phenomenon might be accessible to experimental tests which would distinguish post-quantum phenomena from the predictions of orthodox quantum mechanics. A few non-physicist crackpots have suggested these phenomena might even explain flying saucers. Moving on from pilot wave theory, the author explores other attempts to create a realist interpretation of quantum mechanics: objective collapse of the wave function, as in the Penrose interpretation; the many worlds interpretation (which Smolin calls “magical realism”); and decoherence of the wavefunction due to interaction with the environment. He rejects all of them as unsatisfying, because they fail to address glaring lacunæ in quantum theory which are apparent from its very equations. The twentieth century gave us two pillars of theoretical physics: quantum mechanics and general relativity—Einstein's geometric theory of gravitation. Both have been tested to great precision, but they are fundamentally incompatible with one another. Quantum mechanics describes the very small: elementary particles, atoms, and molecules. General relativity describes the very large: stars, planets, galaxies, black holes, and the universe as a whole. In the middle, where we live our lives, neither much affects the things we observe, which is why their predictions seem counter-intuitive to us. But when you try to put the two theories together, to create a theory of quantum gravity, the pieces don't fit. Quantum mechanics assumes there is a universal clock which ticks at the same rate everywhere in the universe. But general relativity tells us this isn't so: a simple experiment shows that a clock runs slower when it's in a gravitational field. Quantum mechanics says that it isn't possible to determine the position of a particle without its interacting with another particle, but general relativity requires the knowledge of precise positions of particles to determine how spacetime curves and governs the trajectories of other particles. There are a multitude of more gnarly and technical problems in what Stephen Hawking called “consummating the fiery marriage between quantum mechanics and general relativity”. In particular, the equations of quantum mechanics are linear, which means you can add together two valid solutions and get another valid solution, while general relativity is nonlinear, where trying to disentangle the relationships of parts of the systems quickly goes pear-shaped and many of the mathematical tools physicists use to understand systems (in particular, perturbation theory) blow up in their faces. Ultimately, Smolin argues, giving up realism means abandoning what science is all about: figuring out what is really going on. The incompatibility of quantum mechanics and general relativity provides clues that there may be a deeper theory to which both are approximations that work in certain domains (just as Newtonian mechanics is an approximation of special relativity which works when velocities are much less than the speed of light). Many people have tried and failed to “quantise general relativity”. Smolin suggests the problem is that quantum theory itself is incomplete: there is a deeper theory, a realistic one, to which our existing theory is only an approximation which works in the present universe where spacetime is nearly flat. He suggests that candidate theories must contain a number of fundamental principles. They must be background independent, like general relativity, and discard such concepts as fixed space and a universal clock, making both dynamic and defined based upon the components of a system. Everything must be relational: there is no absolute space or time; everything is defined in relation to something else. Everything must have a cause, and there must be a chain of causation for every event which traces back to its causes; these causes flow only in one direction. There is reciprocity: any object which acts upon another object is acted upon by that object. Finally, there is the “identity of indescernibles”: two objects which have exactly the same properties are the same object (this is a little tricky, but the idea is that if you cannot in some way distinguish two objects [for example, by their having different causes in their history], then they are the same object). This argues that what we perceive, at the human scale and even in our particle physics experiments, as space and time are actually emergent properties of something deeper which was manifest in the early universe and in extreme conditions such as gravitational collapse to black holes, but hidden in the bland conditions which permit us to exist. Further, what we believe to be “laws” and “constants” may simply be precedents established by the universe as it tries to figure out how to handle novel circumstances. Just as complex systems like markets and evolution in ecosystems have rules that change based upon events within them, maybe the universe is “making it up as it goes along”, and in the early universe, far from today's near-equilibrium, wild and crazy things happened which may explain some of the puzzling properties of the universe we observe today. This needn't forever remain in the realm of speculation. It is easy, for example, to synthesise a protein which has never existed before in the universe (it's an example of a combinatorial explosion). You might try, for example, to crystallise this novel protein and see how difficult it is, then try again later and see if the universe has learned how to do it. To be extra careful, do it first on the International Space Station and then in a lab on the Earth. I suggested this almost twenty years ago as a test of Rupert Sheldrake's theory of morphic resonance, but (although doubtless Smolin would shun me for associating his theory with that one), it might produce interesting results. The book concludes with a very personal look at the challenges facing a working scientist who has concluded the paradigm accepted by the overwhelming majority of his or her peers is incomplete and cannot be remedied by incremental changes based upon the existing foundation. He notes:There is no more reasonable bet than that our current knowledge is incomplete. In every era of the past our knowledge was incomplete; why should our period be any different? Certainly the puzzles we face are at least as formidable as any in the past. But almost nobody bets this way. This puzzles me.

Well, it doesn't puzzle me. Ever since I learned classical economics, I've always learned to look at the incentives in a system. When you regard academia today, there is huge risk and little reward to get out a new notebook, look at the first blank page, and strike out in an entirely new direction. Maybe if you were a twenty-something patent examiner in a small city in Switzerland in 1905 with no academic career or reputation at risk you might go back to first principles and overturn space, time, and the wave theory of light all in one year, but today's institutional structure makes it almost impossible for a young researcher (and revolutionary ideas usually come from the young) to strike out in a new direction. It is a blessing that we have deep thinkers such as Lee Smolin setting aside the easy path to retirement to ask these deep questions today. Here is a lecture by the author at the Perimeter Institute about the topics discussed in the book. He concentrates mostly on the problems with quantum theory and not the speculative solutions discussed in the latter part of the book. - Kotkin, Stephen. Stalin, Vol. 2: Waiting for Hitler, 1929–1941. New York: Penguin Press, 2017. ISBN 978-1-59420-380-0.

-

This is the second volume in the author's monumental projected

three-volume biography of Joseph Stalin. The first volume,

Stalin: Paradoxes of Power, 1878–1928

(December 2018) covers the period from Stalin's birth through

the consolidation of his sole power atop the Soviet state after

the death of Lenin. The third volume, which will cover the

period from the Nazi invasion of the Soviet Union in 1941 through

the death of Stalin in 1953 has yet to be published.

As this volume begins in 1928, Stalin is securely in the

supreme position of the Communist Party of the Soviet

Union, and having over the years staffed the senior ranks

of the party and the Soviet state (which the party operated

like the puppet it was) with loyalists who owed their positions

to him, had no serious rivals who might challenge him. (It is

often claimed that Stalin was paranoid and feared a coup, but

would a despot fearing for his position regularly take

summer holidays, months in length, in Sochi, far from the capital?)

By 1928, the Soviet Union had largely recovered from the damage

inflicted by the Great War, Bolshevik revolution, and subsequent

civil war. Industrial and agricultural production were back to

around their 1914 levels, and most measures of well-being had

similarly recovered. To be sure, compared to the developed

industrial economies of countries such as Germany, France, or

Britain, Russia remained a backward economy largely based upon

primitive agriculture, but at least it had undone the damage

inflicted by years of turbulence and conflict.

But in the eyes of Stalin and his close associates, who were ardent

Marxists, there was a dangerous and potentially deadly internal

contradiction in the Soviet system as it then stood. In 1921, in

response to the chaos and famine following the 1917 revolution and

years-long civil war, Lenin had proclaimed the

New

Economic Policy (NEP), which tempered the pure

collectivism of original Bolshevik doctrine by introducing a

mixed economy, where large enterprises would continue to be

owned and managed by the state, but small-scale businesses

could be privately owned and run for profit. More importantly,

agriculture, which had previously been managed under a top-down

system of coercive requisitioning of grain and other products

by the state, was replaced by a market system where farmers

could sell their products freely, subject to a tax, payable in

product, proportional to their production (and thus creating an

incentive to increase production).

The NEP was a great success, and shortages of agricultural

products were largely eliminated. There was grousing about

the growing prosperity of the so-called

NEPmen, but the

results of freeing the economy from the shackles of state

control were evident to all. But according to Marxist doctrine, it was

a dagger pointed at the heart of the socialist state.

By 1928, the Soviet economy could be described, in Marxist

terms, as socialism in the industrial cities and capitalism in

the agrarian countryside. But, according to Marx, the

form of politics was determined by the organisation of the

means of production—paraphrasing Brietbart, politics is

downstream of economics. This meant that preserving capitalism

in a large sector of the country, one employing a large

majority of its population and necessary to feed the cities,

was an existential risk. In such a situation it would only be

normal for the capitalist peasants to eventually prevail over

the less numerous urbanised workers and destroy socialism.

Stalin was a Marxist. He was not an opportunist who used

Marxism-Leninism to further his own ambitions. He really

believed this stuff. And so, in 1928, he proclaimed an

end to the NEP and began the forced collectivisation of Soviet

agriculture. Private ownership of land would be abolished, and

the 120 million peasants essentially enslaved as “workers”

on collective or state farms, with planting, quotas to

be delivered, and management essentially controlled by the party.

After an initial lucky year, the inevitable catastrophe ensued. Between 1931

and 1933 famine and epidemics resulting from it killed between

five and seven million people. The country lost around half of

its cattle and two thirds of its sheep. In 1929, the average

family in Kazakhstan owned 22.6 cattle; in 1933 3.7. This was

a calamity on the same order as the Jewish Holocaust in Germany,

and just as man-made: during this period there was a global glut

of food, but Stalin refused to admit the magnitude of the

disaster for fear of inciting enemies to attack and because doing so

would concede the failure of his collectivisation project.

In addition to the famine, the process of collectivisation

resulted in between four and five million people being arrested,

executed, deported to other regions, or jailed.

Many in the starving countryside said, “If only Stalin

knew, he would do something.” But the evidence is

overwhelming: Stalin knew, and did nothing. Marxist theory

said that agriculture must be collectivised, and by pure force

of will he pushed through the project, whatever the cost. Many

in the senior Soviet leadership questioned this single-minded

pursuit of a theoretical goal at horrendous human cost, but they

did not act to stop it. But Stalin remembered their opposition

and would settle scores with them later.

By 1936, it appeared that the worst of the period of

collectivisation was over. The peasants, preferring to live

in slavery than starve to death, had acquiesced to their fate

and resumed production, and the weather co-operated in

producing good harvests. And then, in 1937, a new horror

was unleashed upon the Soviet people, also completely man-made

and driven by the will of Stalin, the

Great Terror.

Starting slowly in the aftermath of the assassination of

Sergey Kirov

in 1934, by 1937 the absurd devouring of those most

loyal to the Soviet regime, all over Stalin's signature, reached

a crescendo. In 1937 and 1938 1,557,259 people would be

arrested and 681,692 executed, the overwhelming majority for

political offences, this in a country with a working-age population

of 100 million. Counting deaths from other causes as a

result of the secret police, the overall death toll was probably

around 830,000. This was so bizarre, and so unprecedented in

human history, it is difficult to find any comparable situation, even in

Nazi Germany. As the author remarks,

To be sure, the greater number of victims were ordinary Soviet people, but what regime liquidates colossal numbers of loyal officials? Could Hitler—had he been so inclined—have compelled the imprisonment or execution of huge swaths of Nazi factory and farm bosses, as well as almost all of the Nazi provincial Gauleiters and their staffs, several times over? Could he have executed the personnel of the Nazi central ministries, thousands of his Wehrmacht officers—including almost his entire high command—as well as the Reich's diplomatic corps and its espionage agents, its celebrated cultural figures, and the leadership of Nazi parties throughout the world (had such parties existed)? Could Hitler also have decimated the Gestapo even while it was carrying out a mass bloodletting? And could the German people have been told, and would the German people have found plausible, that almost everyone who had come to power with the Nazi revolution turned out to be a foreign agent and saboteur?