| 2017 |

January 2017

- Brown, Brandon R. Planck. Oxford: Oxford University Press, 2015. ISBN 978-0-19-021947-5.

-

Theoretical physics is usually a young person's game. Many of the

greatest breakthroughs have been made by researchers in their

twenties, just having mastered existing theories while remaining

intellectually flexible and open to new ideas. Max Planck,

born in 1858, was an exception to this rule. He spent most of his

twenties living with his parents and despairing of finding a

paid position in academia. He was thirty-six when he took on

the project of understanding heat radiation, and forty-two

when he explained it in terms which would launch the quantum

revolution in physics. He was in his fifties when he discovered

the zero-point energy of the vacuum, and remained engaged and active

in science until shortly before his death in 1947 at the age of

89. As theoretical physics editor for the then most

prestigious physics journal in the world,

Annalen der Physik, in 1905 he

approved publication of Einstein's special theory of relativity,

embraced the new ideas from a young outsider with neither a Ph.D. nor

an academic position, extended the theory in his own work in

subsequent years, and was instrumental in persuading Einstein

to come to Berlin, where he became a close friend.

Sometimes the simplest puzzles lead to the most profound of insights.

At the end of the nineteenth century, the radiation emitted by

heated bodies was such a conundrum. All objects emit electromagnetic

radiation due to the thermal motion of their molecules. If an object

is sufficiently hot, such as the filament of an incandescent lamp or

the surface of the Sun, some of the radiation will fall into the

visible range and be perceived as light. Cooler objects emit in

the infrared or lower frequency bands and can be detected by

instruments sensitive to them. The radiation emitted by a hot

object has a characteristic spectrum (the distribution of energy

by frequency), and has a peak which depends only upon the

temperature of the body. One of the simplest cases is that of a

black body,

an ideal object which perfectly absorbs all incident radiation.

Consider an ideal closed oven which loses no heat to the outside.

When heated to a given temperature, its walls will absorb and

re-emit radiation, with the spectrum depending upon its temperature.

But the

equipartition

theorem, a cornerstone of

statistical

mechanics, predicted that the absorption and re-emission of

radiation in the closed oven would result in a ever-increasing

peak frequency and energy, diverging to infinite temperature, the

so-called

ultraviolet

catastrophe. Not only did this violate the law of conservation of

energy, it was an affront to common sense: closed ovens do not explode

like nuclear bombs. And yet the theory which predicted this behaviour,

the

Rayleigh-Jeans

law,

made perfect sense based upon the motion of atoms and molecules,

correctly predicted numerous physical phenomena, and was correct for

thermal radiation at lower temperatures.

At the time Planck took up the problem of thermal radiation,

experimenters in Germany were engaged in measuring the radiation

emitted by hot objects with ever-increasing precision, confirming

the discrepancy between theory and reality, and falsifying several

attempts to explain the measurements. In December 1900, Planck

presented his new theory of black body radiation and what is

now called

Planck's Law

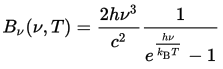

at a conference in Berlin. Written in modern notation, his

formula for the energy emitted by a body of temperature

T at frequency ν is:

This equation not only correctly predicted the results measured in the laboratories, it avoided the ultraviolet catastrophe, as it predicted an absolute cutoff of the highest frequency radiation which could be emitted based upon an object's temperature. This meant that the absorption and re-emission of radiation in the closed oven could never run away to infinity because no energy could be emitted above the limit imposed by the temperature. Fine: the theory explained the measurements. But what did it mean? More than a century later, we're still trying to figure that out. Planck modeled the walls of the oven as a series of resonators, but unlike earlier theories in which each could emit energy at any frequency, he constrained them to produce discrete chunks of energy with a value determined by the frequency emitted. This had the result of imposing a limit on the frequency due to the available energy. While this assumption yielded the correct result, Planck, deeply steeped in the nineteenth century tradition of the continuum, did not initially suggest that energy was actually emitted in discrete packets, considering this aspect of his theory “a purely formal assumption.” Planck's 1900 paper generated little reaction: it was observed to fit the data, but the theory and its implications went over the heads of most physicists. In 1905, in his capacity as editor of Annalen der Physik, he read and approved the publication of Einstein's paper on the photoelectric effect, which explained another physics puzzle by assuming that light was actually emitted in discrete bundles with an energy determined by its frequency. But Planck, whose equation manifested the same property, wasn't ready to go that far. As late as 1913, he wrote of Einstein, “That he might sometimes have overshot the target in his speculations, as for example in his light quantum hypothesis, should not be counted against him too much.” Only in the 1920s did Planck fully accept the implications of his work as embodied in the emerging quantum theory.

The equation for Planck's Law contained two new fundamental physical constants: Planck's constant (h) and Boltzmann's constant (kB). (Boltzmann's constant was named in memory of Ludwig Boltzmann, the pioneer of statistical mechanics, who committed suicide in 1906. The constant was first introduced by Planck in his theory of thermal radiation.) Planck realised that these new constants, which related the worlds of the very large and very small, together with other physical constants such as the speed of light (c), the gravitational constant (G), and the Coulomb constant (ke), allowed defining a system of units for quantities such as length, mass, time, electric charge, and temperature which were truly fundamental: derived from the properties of the universe we inhabit, and therefore comprehensible to intelligent beings anywhere in the universe. Most systems of measurement are derived from parochial anthropocentric quantities such as the temperature of somebody's armpit or the supposed distance from the north pole to the equator. Planck's natural units have no such dependencies, and when one does physics using them, equations become simpler and more comprehensible. The magnitudes of the Planck units are so far removed from the human scale they're unlikely to find any application outside theoretical physics (imagine speed limit signs expressed in a fraction of the speed of light, or road signs giving distances in Planck lengths of 1.62×10−35 metres), but they reflect the properties of the universe and may indicate the limits of our ability to understand it (for example, it may not be physically meaningful to speak of a distance smaller than the Planck length or an interval shorter than the Planck time [5.39×10−44 seconds]).

Planck's life was long and productive, and he enjoyed robust health (he continued his long hikes in the mountains into his eighties), but was marred by tragedy. His first wife, Marie, died of tuberculosis in 1909. He outlived four of his five children. His son Karl was killed in 1916 in World War I. His two daughters, Grete and Emma, both died in childbirth, in 1917 and 1919. His son and close companion Erwin, who survived capture and imprisonment by the French during World War I, was arrested and executed by the Nazis in 1945 for suspicion of involvement in the Stauffenberg plot to assassinate Hitler. (There is no evidence Erwin was a part of the conspiracy, but he was anti-Nazi and knew some of those involved in the plot.) Planck was repulsed by the Nazis, especially after a private meeting with Hitler in 1933, but continued in his post as the head of the Kaiser Wilhelm Society until 1937. He considered himself a German patriot and never considered emigrating (and doubtless his being 75 years old when Hitler came to power was a consideration). He opposed and resisted the purging of Jews from German scientific institutions and the campaign against “Jewish science”, but when ordered to dismiss non-Aryan members of the Kaiser Wilhelm Society, he complied. When Heisenberg approached him for guidance, he said, “You have come to get my advice on political questions, but I am afraid I can no longer advise you. I see no hope of stopping the catastrophe that is about to engulf all our universities, indeed our whole country. … You simply cannot stop a landslide once it has started.” Planck's house near Berlin was destroyed in an Allied bombing raid in February 1944, and with it a lifetime of his papers, photographs, and correspondence. (He and his second wife Marga had evacuated to Rogätz in 1943 to escape the raids.) As a result, historians have only limited primary sources from which to work, and the present book does an excellent job of recounting the life and science of a man whose work laid part of the foundations of twentieth century science. - Wolfe, Tom. The Kingdom of Speech. New York: Little, Brown, 2016. ISBN 978-0-316-40462-4.

- In this short (192) page book, Tom Wolfe returns to his roots in the “new journalism”, of which he was a pioneer in the 1960s. Here the topic is the theory of evolution; the challenge posed to it by human speech (because no obvious precursor to speech occurs in other animals); attempts, from Darwin to Noam Chomsky to explain this apparent discrepancy and preserve the status of evolution as a “theory of everything”; and the evidence collected by linguist and anthropologist Daniel Everett among the Pirahã people of the Amazon basin in Brazil, which appears to falsify Chomsky's lifetime of work on the origin of human language and the universality of its structure. A second theme is contrasting theorists and intellectuals such as Darwin and Chomsky with “flycatchers” such as Alfred Russel Wallace, Darwin's rival for priority in publishing the theory of evolution, and Daniel Everett, who work in the field—often in remote, unpleasant, and dangerous conditions—to collect the data upon which the grand thinkers erect their castles of hypothesis. Doubtless fearful of the reaction if he suggested the theory of evolution applied to the origin of humans, in his 1859 book On the Origin of Species, Darwin only tiptoed close to the question two pages from the end, writing, “In the distant future, I see open fields for far more important researches. Psychology will be securely based on a new foundation, that of the necessary acquirement of each mental power and capacity of gradation. Light will be thrown on the origin of man and his history.” He needn't have been so cautious: he fooled nobody. The very first review, five days before publication, asked, “If a monkey has become a man—…?”, and the tempest was soon at full force. Darwin's critics, among them Max Müller, German-born professor of languages at Oxford, and Darwin's rival Alfred Wallace, seized upon human characteristics which had no obvious precursors in the animals from which man was supposed to have descended: a hairless body, the capacity for abstract thought, and, Müller's emphasis, speech. As Müller said, “Language is our Rubicon, and no brute will dare cross it.” How could Darwin's theory, which claimed to describe evolution from existing characteristics in ancestor species, explain completely novel properties which animals lacked? Darwin responded with his 1871 The Descent of Man, and Selection in Relation to Sex, which explicitly argued that there were precursors to these supposedly novel human characteristics among animals, and that, for example, human speech was foreshadowed by the mating songs of birds. Sexual selection was suggested as the mechanism by which humans lost their hair, and the roots of a number of human emotions and even religious devotion could be found in the behaviour of dogs. Many found these arguments, presented without any concrete evidence, unpersuasive. The question of the origin of language had become so controversial and toxic that a year later, the Philological Society of London announced it would no longer accept papers on the subject. With the rediscovery of Gregor Mendel's work on genetics and subsequent research in the field, a mechanism which could explain Darwin's evolution was in hand, and the theory became widely accepted, with the few discrepancies set aside (as had the Philological Society) as things we weren't yet ready to figure out. In the years after World War II, the social sciences became afflicted by a case of “physics envy”. The contribution to the war effort by their colleagues in the hard sciences in areas such as radar, atomic energy, and aeronautics had been handsomely rewarded by prestige and funding, while the more squishy sciences remained in a prewar languor along with the departments of Latin, Medieval History, and Drama. Clearly, what was needed was for these fields to adopt a theoretical approach grounded in mathematics which had served so well for chemists, physicists, engineers, and appeared to be working for the new breed of economists. It was into this environment that in the late 1950s a young linguist named Noam Chomsky burst onto the scene. Over its century and a half of history, much of the work of linguistics had been cataloguing and studying the thousands of languages spoken by people around the world, much as entomologists and botanists (or, in the pejorative term of Darwin's age, flycatchers) travelled to distant lands to discover the diversity of nature and try to make sense of how it was all interrelated. In his 1957 book, Syntactic Structures, Chomsky, then just twenty-eight years old and working in the building at MIT where radar had been developed during the war, said all of this tedious and messy field work was unnecessary. Humans had evolved (note, “evolved”) a “language organ”, an actual physical structure within the brain—the “language acquisition device”—which children used to learn and speak the language they heard from their parents. All human languages shared a “universal grammar”, on top of which all the details of specific languages so carefully catalogued in the field were just fluff, like the specific shape and colour of butterflies' wings. Chomsky invented the “Martian linguist” which was to come to feature in his lectures, who he claimed, arriving on Earth, would quickly discover the unity underlying all human languages. No longer need the linguist leave his air conditioned office. As Wolfe writes in chapter 4, “Now, all the new, Higher Things in a linguist's life were to be found indoors, at a desk…looking at learned journals filled with cramped type instead of at a bunch of hambone faces in a cloud of gnats.” Given the alternatives, most linguists opted for the office, and for the prestige that a theory-based approach to their field conferred, and by the 1960s, Chomsky's views had taken over linguistics, with only a few dissenters, at whom Chomsky hurled thunderbolts from his perch on academic Olympus. He transmuted into a general-purpose intellectual, pronouncing on politics, economics, philosophy, history, and whatever occupied his fancy, all with the confidence and certainty he brought to linguistics. Those who dissented he denounced as “frauds”, “liars”, or “charlatans”, including B. F. Skinner, Alan Dershowitz, Jacques Lacan, Elie Wiesel, Christopher Hitchens, and Jacques Derrida. (Well, maybe I agree when it comes to Derrida and Lacan.) In 2002, with two colleagues, he published a new theory claiming that recursion—embedding one thought within another—was a universal property of human language and component of the universal grammar hard-wired into the brain. Since 1977, Daniel Everett had been living with and studying the Pirahã in Brazil, originally as a missionary and later as an academic linguist trained and working in the Chomsky tradition. He was the first person to successfully learn the Pirahã language, and documented it in publications. In 2005 he published a paper in which he concluded that the language, one of the simplest ever described, contained no recursion whatsoever. It also contained neither a past nor future tense, description of relations beyond parents and siblings, gender, numbers, and many additional aspects of other languages. But the absence of recursion falsified Chomsky's theory, which pronounced it a fundamental part of all human languages. Here was a field worker, a flycatcher, braving not only gnats but anacondas, caimans, and just about every tropical disease in the catalogue, knocking the foundation from beneath the great man's fairy castle of theory. Naturally, Chomsky and his acolytes responded with their customary vituperation, (this time, the adjective of choice for Everett was “charlatan”). Just as they were preparing the academic paper which would drive a stake through this nonsense, Everett published Don't Sleep, There Are Snakes, a combined account of his thirty years with the Pirahã and an analysis of their language. The book became a popular hit and won numerous awards. In 2012, Everett followed up with Language: The Cultural Tool, which rejects Chomsky's view of language as an innate and universal human property in favour of the view that it is one among a multitude of artifacts created by human societies as a tool, and necessarily reflects the characteristics of those societies. Chomsky now refuses to discuss Everett's work. In the conclusion, Wolfe comes down on the side of Everett, and argues that the solution to the mystery of how speech evolved is that it didn't evolve at all. Speech is simply a tool which humans used their big brains to invent to help them accomplish their goals, just as they invented bows and arrows, canoes, and microprocessors. It doesn't make any more sense to ask how evolution produced speech than it does to suggest it produced any of those other artifacts not made by animals. He further suggests that the invention of speech proceeded from initial use of sounds as mnemonics for objects and concepts, then progressed to more complex grammatical structure, but I found little evidence in his argument to back the supposition, nor is this a necessary part of viewing speech as an invented artifact. Chomsky's grand theory, like most theories made up without grounding in empirical evidence, is failing both by being falsified on its fundamentals by the work of Everett and others, and also by the failure, despite half a century of progress in neurophysiology, to identify the “language organ” upon which it is based. It's somewhat amusing to see soft science academics rush to Chomsky's defence, when he's arguing that language is biologically determined as opposed to being, as Everett contends, a social construct whose details depend upon the cultural context which created it. A hunter-gatherer society such as the Pirahã living in an environment where food is abundant and little changes over time scales from days to generations, doesn't need a language as complicated as those living in an agricultural society with division of labour, and it shouldn't be a surprise to find their language is more rudimentary. Chomsky assumed that all human languages were universal (able to express any concept), in the sense David Deutsch defined universality in The Beginning of Infinity, but why should every people have a universal language when some cultures get along just fine without universal number systems or alphabets? Doesn't it make a lot more sense to conclude that people settle on a language, like any other tools, which gets the job done? Wolfe then argues that the capacity of speech is the defining characteristic of human beings, and enables all of the other human capabilities and accomplishments which animals lack. I'd consider this not proved. Why isn't the definitive human characteristic the ability to make tools, and language simply one among a multitude of tools humans have invented? This book strikes me as one or two interesting blog posts struggling to escape from a snarknado of Wolfe's 1960s style verbal fireworks, including Bango!, riiippp, OOOF!, and “a regular crotch crusher!”. At age 85, he's still got it, but I wonder whether he, or his editor, questioned whether this style of journalism is as effective when discussing evolutionary biology and linguistics as in mocking sixties radicals, hippies, or pretentious artists and architects. There is some odd typography, as well. Grave accents are used in words like “learnèd”, presumably to indicate it's to be pronounced as two syllables, but then occasionally we get an acute accent instead—what's that supposed to mean? Chapter endnotes are given as superscript letters while source citations are superscript numbers, neither of which are easy to select on a touch-screen Kindle edition. There is no index.

February 2017

- Verne, Jules. Hector Servadac. Seattle: CreateSpace, [1877] 2014. ISBN 978-1-5058-3124-5.

- Over the years, I have been reading my way through the classic science fiction novels of Jules Verne, and I have prepared public domain texts of three of them which are available on my site and Project Gutenberg. Verne not only essentially invented the modern literary genre of science fiction, he was an extraordinary prolific author, publishing sixty-two novels in his Voyages extraordinaires between 1863 and 1905. What prompted me to pick up the present work was an interview I read in December 2016, in which Freeman Dyson recalled that it was reading this book at around the age of eight which, more than anything, set him on a course to become a mathematician and physicist. He notes that he originally didn't know it was fiction, and was disappointed to discover the events recounted hadn't actually happened. Well, that's about as good a recommendation as you can get, so I decided to put Hector Servadac on the list. On the night of December 31–January 1, Hector Servadac, a captain in the French garrison at Mostaganem in Algeria, found it difficult to sleep, since in the morning he was to fight a duel with Wassili Timascheff, his rival for the affections of a young woman. During the night, the captain and his faithful orderly Laurent Ben-Zouf, perceived an enormous shock, and regained consciousness amid the ruins of their hut, and found themselves in a profoundly changed world. Thus begins a scientific detective story much different than many of Verne's other novels. We have the resourceful and intrepid Captain Servadac and his humorous side-kick Ben-Zouf, to be sure, but instead of them undertaking a perilous voyage of exploration, instead they are taken on a voyage, by forces unknown, and must discover what has happened and explain the odd phenomena they are experiencing. And those phenomena are curious, indeed: the Sun rises in the west and sets in the east, and the day is now only twelve hours long; their weight, and that of all objects, has been dramatically reduced, and they can now easily bound high into the air; the air itself seems to have become as thin as on high mountain peaks; the Moon has vanished from the sky; the pole has shifted and there is a new north star; and their latitude now seems to be near the equator. Exploring their environs only adds mysteries to the ever-growing list. They now seem to inhabit an island of which they are the only residents: the rest of Algeria has vanished. Eventually they make contact with Count Timascheff, whose yacht was standing offshore and, setting aside their dispute (the duel deferred in light of greater things is a theme you'll find elsewhere in the works of Verne), they seek to explore the curiously altered world they now inhabit. Eventually, they discover its inhabitants seem to number only thirty-six: themselves, the Russian crew of Timascheff's yacht; some Spanish workers; a young Italian girl and Spanish boy; Isac Hakhabut, a German Jewish itinerant trader whose ship full of merchandise survived the cataclysm; the remainder of the British garrison at Gibraltar, which has been cut off and reduced to a small island; and Palmyrin Rosette, formerly Servadac's teacher (and each other's nemeses), an eccentric and irritable astronomer. They set out on a voyage of exploration and begin to grasp what has happened and what they must do to survive. In 1865, Verne took us De la terre à la lune. Twelve years later, he treats us to a tour of the solar system, from the orbit of Venus to that of Jupiter, with abundant details of what was known about our planetary neighbourhood in his era. As usual, his research is nearly impeccable, although the orbital mechanics are fantasy and must be attributed to literary license: a body with an orbit which crosses those of Venus and Jupiter cannot have an orbital period of two years: it will be around five years, but that wouldn't work with the story. Verne has his usual fun with the national characteristics of those we encounter. Modern readers may find the descriptions of the miserly Jew Hakhabut and the happy but indolent Spaniards offensive—so be it—such is nineteenth century literature. This is a grand adventure: funny, enlightening, and engaging the reader in puzzling out mysteries of physics, astronomy, geology, chemistry, and, if you're like this reader, checking the author's math (which, orbital mechanics aside, is more or less right, although he doesn't make the job easy by using a multitude of different units). It's completely improbable, of course—you don't go to Jules Verne for that: he's the fellow who shot people to the Moon with a nine hundred foot cannon—but just as readers of modern science fiction are willing to accept faster than light drives to make the story work, a little suspension of disbelief here will yield a lot of entertainment. Jules Verne is the second most translated of modern authors (Agatha Christie is the first) and the most translated of those writing in French. Regrettably, Verne, and his reputation, have suffered from poor translation. He is a virtuoso of the French language, using his large vocabulary to layer meanings and subtexts beneath the surface, and many translators fail to preserve these subtleties. There have been several English translations of this novel under different titles (which I shall decline to state, as they are spoilers for the first half of the book), none of which are deemed worthy of the original. I read the Kindle edition from Arvensa, which is absolutely superb. You don't usually expect much when you buy a Kindle version of a public domain work for US$ 0.99, but in this case you'll receive a thoroughly professional edition free of typographical errors which includes all of the original illustrations from the original 1877 Hetzel edition. In addition there is a comprehensive biography of Jules Verne and an account of his life and work published at the height of his career. Further, the Kindle French dictionary, a free download, is absolutely superb when coping with Verne's enormous vocabulary. Verne is very fond of obscure terms, and whether discussing nautical terminology, geology, astronomy, or any other specialties, peppers his prose with jargon which used to send me off to flip through the Little Bob. Now it's just a matter of highlighting the word (in the iPad Kindle app), and up pops the definition from the amazingly comprehensive dictionary. (This is a French-French dictionary; if you need a dictionary which provides English translations, you'll need to install such an application.) These Arvensa Kindle editions are absolutely the best way to enjoy Jules Verne and other classic French authors, and I will definitely seek out others to read in the future. You can obtain the complete works of Jules Verne, 160 titles, with 5400 illustrations, for US$ 2.51 at this writing.

- Jenne, Mike. Pale Blue. New York: Yucca Publishing, 2016. ISBN 978-1-63158-084-0.

-

This is the final novel in the trilogy which began with

Blue Gemini (April 2016)

and continued in

Blue Darker than Black (August 2016).

After the harrowing rescue mission which concluded the

second book, Drew Carson and Scott Ourecky, astronauts of the

U.S. Air Force's covert Blue Gemini project, a manned satellite

interceptor based upon NASA's Project Gemini spacecraft,

hope for a long stand-down before what is slated to be the

final mission in the project, whose future is uncertain due

to funding issues, inter-service rivalry, the damage to its

Pacific island launch site due to a recent tropical storm, and

the upcoming 1972 presidential election.

Meanwhile, in the Soviet Union, progress continues on the

Krepost project: a manned space

station equipped for surveillance and armed with a nuclear

warhead which can be de-orbited and dropped on any target

along the station's ground track. General Rustam Abdirov, a

survivor of the

Nedelin

disaster in 1960, is pushing the project to completion

through his deputy, Gregor Yohzin, and believes it may hold the

key to breaking what Abdirov sees as the stalemate of the

Cold War. Yohzin is increasingly worried about Abdirov's

stability and the risks posed by the project, and has been

covertly passing information to U.S. intelligence.

As information from Yohzin's espionage reaches Blue Gemini

headquarters, Carson and Ourecky are summoned back and plans

drawn up to intercept the orbital station before a crew can be

launched to it, after which destroying it would not only be

hazardous, but could provoke a superpower confrontation.

On the Soviet side, nothing is proceeding as planned, and

the interception mission must twist and turn based upon limited

and shifting information.

About half way through the book, and after some big surprises,

the Krepost crisis is resolved. The reader might be

inclined, then, to wonder “what next?” What follows

is a war story, set in the final days of the Vietnam conflict,

and for quite a while it seems incongruous and unrelated to all

that has gone before. I have remarked in reviews of the earlier

books of the trilogy that the author is keeping a large number

of characters and sub-plots in the air, and wondered whether and how

he was going to bring it all together. Well, in the last five chapters

he does it, magnificently, and ties everything up with a bow on the

top, ending what has been a rewarding thriller in a moving, human

conclusion.

There are a few goofs. Launch windows to

inclined Earth orbits occur every day; in case of a launch delay,

there is no need for a long wait before the next launch attempt (chapter 4).

Attempting to solve a difficult problem, “the variables refused

to remain constant”—that's why they're called

variables (chapter 10)!

Beaujolais is red, not white, wine (chapter 16).

A character claims to have seen a hundred

stars in the Pleiades from space with the unaided eye. This is

impossible: while the cluster contains around 1000 stars, only

14 are bright enough to be seen with the best human vision under

the darkest skies. Observing from space is slightly better than

from the Earth's surface, but in this case the observer would have

been looking through a spacecraft window, which would attenuate

light more than the Earth's atmosphere (chapter 25). MIT's Draper Laboratory

did not design the Gemini on-board computer; it was developed

by the IBM Federal Systems Division (chapter 26).

The trilogy is a big, sprawling techno-thriller with interesting and

complicated characters and includes space flight, derring do in remote

and dangerous places, military and political intrigue in both the U.S.

and Soviet Union, espionage, and a look at how the stresses of

military life and participation in black programs make the lives of

those involved in them difficult. Although the space program which

is the centrepiece of the story is fictional, the attention to detail

is exacting: had it existed, this is probably how it would have been

done. I have one big quibble with a central part of the premise, which

I will discuss behind the curtain.

This trilogy is one long story which spans three books. The second and third novels begin with brief summaries of prior events, but these are intended mostly for readers who have forgotten where the previous volume left off. If you don't read the three books in order, you'll miss a great deal of the character and plot development which makes the entire story so rewarding. More than 1600 pages may seem a large investment in a fictional account of a Cold War space program that never happened, but the technical authenticity; realistic portrayal of military aerospace projects and the interaction of pilots, managers, engineers, and politicians; and complicated and memorable characters made it more than worthwhile to this reader.Spoiler warning: Plot and/or ending details follow.The rationale for the Blue Gemini program which caused it to be funded is largely as a defence against a feared Soviet “orbital bombardment system”: one or more satellites which, placed in orbits which regularly overfly the U.S. and allies, could be commanded to deorbit and deliver nuclear warheads to any location below. It is the development of such a weapon, its deployment, and a mission to respond to the threat which form the core of the plot of this novel. But an orbital bombardment system isn't a very useful weapon, and doesn't make much sense, especially in the context of the late 1960s to early '70s in which this story is set. The Krepost of the novel was armed with a single high-yield weapon, and operated in a low Earth orbit at an inclination of 51°. The weapon was equipped with only a retrorocket and heat shield, and would have little cross-range (ability to hit targets lateral to its orbital path). This would mean that in order to hit a specific target, the orbital station would have to wait up to a day for the Earth to rotate so the target was aligned with the station's orbital plane. And this would allow bombardment of only a single target with one warhead. Keeping the station ready for use would require a constant series of crew ferry and freighter launches, all to maintain just one bomb on alert. By comparison, by 1972, the Soviet Union had on the order of a thousand warheads mounted on ICBMs, which required no space launch logistics to maintain, and could reach targets anywhere within half an hour of the launch order being given. Finally, a space station in low Earth orbit is pretty much a sitting duck for countermeasures. It is easy to track from the ground, and has limited maneuvering capability. Even guns in space do not much mitigate the threat from a variety of anti-satellite weapons, including Blue Gemini. While the drawbacks of orbital deployment of nuclear weapons caused the U.S. and Soviet Union to eschew them in favour of more economical and secure platforms such as silo-based missiles and ballistic missile submarines, their appearance here does not make this “what if?” thriller any less effective or thrilling. This was the peak of the Cold War, and both adversaries explored many ideas which, in retrospect, appear to have made little sense. A hypothetical Soviet nuclear-armed orbital battle station is no less crazy than Project Pluto in the U.S.Spoilers end here.

March 2017

- Awret, Uziel, ed. The Singularity. Exeter, UK: Imprint Academic, 2016. ISBN 978-1-84540-907-4.

-

For more than half a century, the prospect of a technological

singularity has been part of the intellectual landscape of those

envisioning the future. In 1965, in a paper titled “Speculations

Concerning the First Ultraintelligent Machine” statistician

I. J. Good

wrote,

Let an ultra-intelligent machine be defined as a machine that can far surpass all of the intellectual activities of any man however clever. Since the design of machines is one of these intellectual activities, an ultraintelligent machine could design even better machines; there would then unquestionably be an “intelligence explosion”, and the intelligence of man would be left far behind. Thus the first ultraintelligent machine is the last invention that man need ever make.

(The idea of a runaway increase in intelligence had been discussed earlier, notably by Robert A. Heinlein in a 1952 essay titled “Where To?”) Discussion of an intelligence explosion and/or technological singularity was largely confined to science fiction and the more speculatively inclined among those trying to foresee the future, largely because the prerequisite—building machines which were more intelligent than humans—seemed such a distant prospect, especially as the initially optimistic claims of workers in the field of artificial intelligence gave way to disappointment. Over all those decades, however, the exponential growth in computing power available at constant cost continued. The funny thing about continued exponential growth is that it doesn't matter what fixed level you're aiming for: the exponential will eventually exceed it, and probably a lot sooner than most people expect. By the 1990s, it was clear just how far the growth in computing power and storage had come, and that there were no technological barriers on the horizon likely to impede continued growth for decades to come. People started to draw straight lines on semi-log paper and discovered that, depending upon how you evaluate the computing capacity of the human brain (a complicated and controversial question), the computing power of a machine with a cost comparable to a present-day personal computer would cross the human brain threshold sometime in the twenty-first century. There seemed to be a limited number of alternative outcomes.- Progress in computing comes to a halt before reaching parity with human brain power, due to technological limits, economics (inability to afford the new technologies required, or lack of applications to fund the intermediate steps), or intervention by authority (for example, regulation motivated by a desire to avoid the risks and displacement due to super-human intelligence).

- Computing continues to advance, but we find that the human brain is either far more complicated than we believed it to be, or that something is going on in there which cannot be modelled or simulated by a deterministic computational process. The goal of human-level artificial intelligence recedes into the distant future.

- Blooie! Human level machine intelligence is achieved, successive generations of machine intelligences run away to approach the physical limits of computation, and before long machine intelligence exceeds that of humans to the degree humans surpass the intelligence of mice (or maybe insects).

I take it for granted that there are potential good and bad aspects to an intelligence explosion. For example, ending disease and poverty would be good. Destroying all sentient life would be bad. The subjugation of humans by machines would be at least subjectively bad.

…well, at least in the eyes of the humans. If there is a singularity in our future, how might we act to maximise the good consequences and avoid the bad outcomes? Can we design our intellectual successors (and bear in mind that we will design only the first generation: each subsequent generation will be designed by the machines which preceded it) to share human values and morality? Can we ensure they are “friendly” to humans and not malevolent (or, perhaps, indifferent, just as humans do not take into account the consequences for ant colonies and bacteria living in the soil upon which buildings are constructed?) And just what are “human values and morality” and “friendly behaviour” anyway, given that we have been slaughtering one another for millennia in disputes over such issues? Can we impose safeguards to prevent the artificial intelligence from “escaping” into the world? What is the likelihood we could prevent such a super-being from persuading us to let it loose, given that it thinks thousands or millions of times faster than we, has access to all of human written knowledge, and the ability to model and simulate the effects of its arguments? Is turning off an AI murder, or terminating the simulation of an AI society genocide? Is it moral to confine an AI to what amounts to a sensory deprivation chamber, or in what amounts to solitary confinement, or to deceive it about the nature of the world outside its computing environment? What will become of humans in a post-singularity world? Given that our species is the only survivor of genus Homo, history is not encouraging, and the gap between human intelligence and that of post-singularity AIs is likely to be orders of magnitude greater than that between modern humans and the great apes. Will these super-intelligent AIs have consciousness and self-awareness, or will they be philosophical zombies: able to mimic the behaviour of a conscious being but devoid of any internal sentience? What does that even mean, and how can you be sure other humans you encounter aren't zombies? Are you really all that sure about yourself? Are the qualia of machines not constrained? Perhaps the human destiny is to merge with our mind children, either by enhancing human cognition, senses, and memory through implants in our brain, or by uploading our biological brains into a different computing substrate entirely, whether by emulation at a low level (for example, simulating neuron by neuron at the level of synapses and neurotransmitters), or at a higher, functional level based upon an understanding of the operation of the brain gleaned by analysis by AIs. If you upload your brain into a computer, is the upload conscious? Is it you? Consider the following thought experiment: replace each biological neuron of your brain, one by one, with a machine replacement which interacts with its neighbours precisely as the original meat neuron did. Do you cease to be you when one neuron is replaced? When a hundred are replaced? A billion? Half of your brain? The whole thing? Does your consciousness slowly fade into zombie existence as the biological fraction of your brain declines toward zero? If so, what is magic about biology, anyway? Isn't arguing that there's something about the biological substrate which uniquely endows it with consciousness as improbable as the discredited theory of vitalism, which contended that living things had properties which could not be explained by physics and chemistry? Now let's consider another kind of uploading. Instead of incremental replacement of the brain, suppose an anæsthetised human's brain is destructively scanned, perhaps by molecular-scale robots, and its structure transferred to a computer, which will then emulate it precisely as the incrementally replaced brain in the previous example. When the process is done, the original brain is a puddle of goo and the human is dead, but the computer emulation now has all of the memories, life experience, and ability to interact as its progenitor. But is it the same person? Did the consciousness and perception of identity somehow transfer from the brain to the computer? Or will the computer emulation mourn its now departed biological precursor, as it contemplates its own immortality? What if the scanning process isn't destructive? When it's done, BioDave wakes up and makes the acquaintance of DigiDave, who shares his entire life up to the point of uploading. Certainly the two must be considered distinct individuals, as are identical twins whose histories diverged in the womb, right? Does DigiDave have rights in the property of BioDave? “Dave's not here”? Wait—we're both here! Now what? Or, what about somebody today who, in the sure and certain hope of the Resurrection to eternal life opts to have their brain cryonically preserved moments after clinical death is pronounced. After the singularity, the decedent's brain is scanned (in this case it's irrelevant whether or not the scan is destructive), and uploaded to a computer, which starts to run an emulation of it. Will the person's identity and consciousness be preserved, or will it be a new person with the same memories and life experiences? Will it matter? Deep questions, these. The book presents Chalmers' paper as a “target essay”, and then invites contributors in twenty-six chapters to discuss the issues raised. A concluding essay by Chalmers replies to the essays and defends his arguments against objections to them by their authors. The essays, and their authors, are all over the map. One author strikes this reader as a confidence man and another a crackpot—and these are two of the more interesting contributions to the volume. Nine chapters are by academic philosophers, and are mostly what you might expect: word games masquerading as profound thought, with an admixture of ad hominem argument, including one chapter which descends into Freudian pseudo-scientific analysis of Chalmers' motives and says that he “never leaps to conclusions; he oozes to conclusions”. Perhaps these are questions philosophers are ill-suited to ponder. Unlike questions of the nature of knowledge, how to live a good life, the origins of morality, and all of the other diffuse gruel about which philosophers have been arguing since societies became sufficiently wealthy to indulge in them, without any notable resolution in more than two millennia, the issues posed by a singularity have answers. Either the singularity will occur or it won't. If it does, it will either result in the extinction of the human species (or its reduction to irrelevance), or it won't. AIs, if and when they come into existence, will either be conscious, self-aware, and endowed with free will, or they won't. They will either share the values and morality of their progenitors or they won't. It will either be possible for humans to upload their brains to a digital substrate, or it won't. These uploads will either be conscious, or they'll be zombies. If they're conscious, they'll either continue the identity and life experience of the pre-upload humans, or they won't. These are objective questions which can be settled by experiment. You get the sense that philosophers dislike experiments—they're a risk to job security disputing questions their ancestors have been puzzling over at least since Athens. Some authors dispute the probability of a singularity and argue that the complexity of the human brain has been vastly underestimated. Others contend there is a distinction between computational power and the ability to design, and consequently exponential growth in computing may not produce the ability to design super-intelligence. Still another chapter dismisses the evolutionary argument through evidence that the scope and time scale of terrestrial evolution is computationally intractable into the distant future even if computing power continues to grow at the rate of the last century. There is even a case made that the feasibility of a singularity makes the probability that we're living, not in a top-level physical universe, but in a simulation run by post-singularity super-intelligences, overwhelming, and that they may be motivated to turn off our simulation before we reach our own singularity, which may threaten them. This is all very much a mixed bag. There are a multitude of Big Questions, but very few Big Answers among the 438 pages of philosopher word salad. I find my reaction similar to that of David Hume, who wrote in 1748:If we take in our hand any volume of divinity or school metaphysics, for instance, let us ask, Does it contain any abstract reasoning containing quantity or number? No. Does it contain any experimental reasoning concerning matter of fact and existence? No. Commit it then to the flames, for it can contain nothing but sophistry and illusion.

I don't burn books (it's некультурный and expensive when you read them on an iPad), but you'll probably learn as much pondering the questions posed here on your own and in discussions with friends as from the scholarly contributions in these essays. The copy editing is mediocre, with some eminent authors stumbling over the humble apostrophe. The Kindle edition cites cross-references by page number, which are useless since the electronic edition does not include page numbers. There is no index. - Hannan, Daniel. What Next. London: Head of Zeus, 2016. ISBN 978-1-78669-193-4.

- On June 23rd, 2016, the people of the United Kingdom, against the advice of most politicians, big business, organised labour, corporate media, academia, and their self-styled “betters”, narrowly voted to re-assert their sovereignty and reclaim the independence of their proud nation, slowly being dissolved in an “ever closer union” with the anti-democratic, protectionist, corrupt, bankrupt, and increasingly authoritarian European Union (EU). The day of the referendum, bookmakers gave odds which implied less than a 20% chance of a Leave vote, and yet the morning after the common sense and perception of right and wrong of the British people, which had caused them to prevail in the face of wars, economic and social crises, and a changing international environment re-asserted itself, and caused them to say, “No more, thank you. We prefer our thousand year tradition of self-rule to being dictated to by unelected foreign oligarchic technocrats.” The author, Conservative Member of the European Parliament for South East England since 1999, has been one of the most vociferous and eloquent partisans of Britain's reclaiming its independence and campaigners for a Leave vote in the referendum; the vote was a personal triumph for him. In the introduction, he writes, “After forty-three years, we have pushed the door ajar. A rectangle of light dazzles us and, as our eyes adjust, we see a summer meadow. Swallows swoop against the blue sky. We hear the gurgling of a little brook. Now to stride into the sunlight.” What next, indeed? Before presenting his vision of an independent, prosperous, and more free Britain, he recounts Britain's history in the European Union, the sordid state of the institutions of that would-be socialist superstate, and the details of the Leave campaign, including a candid and sometimes acerbic view not just of his opponents but also nominal allies. Hannan argues that Leave ultimately won because those advocating it were able to present a positive future for an independent Britain. He says that every time the Leave message veered toward negatives of the existing relationship with the EU, in particular immigration, polling in favour of Leave declined, and when the positive benefits of independence—for example free trade with Commonwealth nations and the rest of the world, local control of Britain's fisheries and agriculture, living under laws made in Britain by a parliament elected by the British people—Leave's polling improved. Fundamentally, you can only get so far asking people to vote against something, especially when the establishment is marching in lockstep to create fear of the unknown among the electorate. Presenting a positive vision was, Hannan believes, essential to prevailing. Central to understanding a post-EU Britain is the distinction between a free-trade area and a customs union. The EU has done its best to confuse people about this issue, presenting its single market as a kind of free trade utopia. Nothing could be farther from the truth. A free trade area is just what the name implies: a group of states which have eliminated tariffs and other barriers such as quotas, and allow goods and services to cross borders unimpeded. A customs union such as the EU establishes standards for goods sold within its internal market which, through regulation, members are required to enforce (hence, the absurdity of unelected bureaucrats in Brussels telling the French how to make cheese). Further, while goods conforming to the regulations can be sold within the union, there are major trade barriers with parties outside, often imposed to protect industries with political pull inside the union. For example, wine produced in California or Chile is subject to a 32% tariff imposed by the EU to protect its own winemakers. British apparel manufacturers cannot import textiles from India, a country with long historical and close commercial ties, without paying EU tariffs intended to protect uncompetitive manufacturers on the Continent. Pointy-headed and economically ignorant “green” policies compound the problem: a medium-sized company in the EU pays 20% more for energy than a competitor in China and twice as much as one in the United States. In international trade disputes, Britain in the EU is represented by one twenty-eighth of a European Commissioner, while an independent Britain will have its own seat, like New Zealand, Switzerland, and the US. Hannan believes that after leaving the EU, the UK should join the European Free Trade Association (EFTA), and demonstrates how ETFA states such as Norway and Switzerland are more prosperous than EU members and have better trade with countries outside it. (He argues against joining the European Economic Area [EEA], from which Switzerland has wisely opted out. The EEA provides too much leverage to the Brussels imperium to meddle in the policies of member states.) More important for Britain's future than its relationship to the EU is its ability, once outside, to conclude bilateral trade agreements with important trading partners such as the US (even, perhaps, joining NAFTA), Anglosphere countries such as Australia, South Africa, and New Zealand, and India, China, Russia, Brazil and other nations: all of which it cannot do while a member of the EU. What of Britain's domestic policy? Free of diktats from Brussels, it will be whatever Britons wish, expressed through their representatives at Westminster. Hannan quotes the psychologist Kurt Lewin, who in the 1940s described change as a three stage process. First, old assumptions about the way things are and the way they have to be become “unfrozen”. This ushers in a period of rapid transformation, where institutions become fluid and can adapt to changed circumstances and perceptions. Then the new situation congeals into a status quo which endures until the next moment of unfreezing. For four decades, Britain has been frozen into an inertia where parliamentarians and governments respond to popular demands all too often by saying, “We'd like to do that, but the EU doesn't permit it.” Leaving the EU will remove this comfortable excuse, and possibly catalyse a great unfreezing of Britain's institutions. Where will this ultimately go? Wherever the people wish it to. Hannan has some suggestions for potential happy outcomes in this bright new day. Britain has devolved substantial governance to Scotland, and yet Scottish MPs still vote in Westminster for policies which affect England but to which their constituents are not subject. Perhaps federalisation might progress to the point where the House of Commons becomes the English Parliament, with either a reformed House of Lords or a new body empowered to vote only on matters affecting the entire Union such as national defence and foreign policy. Free of the EU, the UK can adopt competitive corporate taxation and governance policies, and attract companies from around the world to build not just headquarters but also research and development and manufacturing facilities. The national VAT could be abolished entirely and replaced with a local sales tax, paid at point of retail, set by counties or metropolitan areas in competition with one another (current payments to these authorities by the Treasury are almost exactly equal to revenue from the VAT); with competition, authorities will be forced to economise lest their residents vote with their feet. With their own source of revenue, decision making for a host of policies, from housing to welfare, could be pushed down from Whitehall to City Hall. Immigration can be re-focused upon the need of the country for skills and labour, not thrown open to anybody who arrives. The British vote for independence has been decried by the elitists, oligarchs, and would-be commissars as a “populist revolt”. (Do you think those words too strong? Did you know that all of those EU politicians and bureaucrats are exempt from taxation in their own countries, and pay a flat tax of around 21%, far less than the despised citizens they rule?) What is happening, first in Britain, and before long elsewhere as the corrupt foundations of the EU crumble, is that the working classes are standing up to the smirking classes and saying, “Enough.” Britain's success, which (unless the people are betrayed and their wishes subverted) is assured, since freedom and democracy always work better than slavery and bureaucratic dictatorship, will serve to demonstrate to citizens of other railroad-era continental-scale empires that smaller, agile, responsive, and free governance is essential for success in the information age.

- Pratchett, Terry and Stephen Baxter. The Long War. New York: HarperCollins, 2013. ISBN 978-0-06-206869-9.

- This is the second novel in the authors' series which began with The Long Earth (November 2012). That book, which I enjoyed immensely, created a vast new arena for storytelling: a large, perhaps infinite, number of parallel Earths, all synchronised in time, among which people can “step” with the aid of a simple electronic gizmo (incorporating a potato) whose inventor posted the plans on the Internet on what has since been called Step Day. Some small fraction of the population has always been “natural steppers”—able to move among universes without mechanical assistance, but other than that tiny minority, all of the worlds of the Long Earth beyond our own (called the Datum) are devoid of humans. There are natural stepping humanoids, dubbed “elves” and “trolls”, but none with human-level intelligence. As this book opens, a generation has passed since Step Day, and the human presence has begun to expand into the vast expanses of the Long Earth. Most worlds are pristine wilderness, with all the dangers to pioneers venturing into places where large predators have never been controlled. Joshua Valienté, whose epic voyage of exploration with Lobsang (who from moment to moment may be a motorcycle repairman, computer network, Tibetan monk, or airship) discovered the wonders of these innumerable worlds in the first book, has settled down to raise a family on a world in the Far West. Humans being humans, this gift of what amounts of an infinitely larger scope for their history has not been without its drawbacks and conflicts. With the opening of an endless frontier, the restless and creative have decamped from the Datum to seek adventure and fortune free of the crowds and control of their increasingly regimented home world. This has resulted in a drop in innovation and economic hit to the Datum, and for Datum politicians (particularly in the United States, the grabbiest of all jurisdictions) to seek to expand their control (and particularly the ability to loot) to all residents of the so-called “Aegis”—the geographical footprint of its territory across the multitude of worlds. The trolls, who mostly get along with humans and work for them, hear news from across the worlds through their “long call” of scandalous mistreatment of their kind by humans in some places, and now appear to have vanished from many human settlements to parts unknown. A group of worlds in the American Aegis in the distant West have adopted the Valhalla Declaration, asserting their independence from the greedy and intrusive government of the Datum and, in response, the Datum is sending a fleet of stepping airships (or “twains”, named for the Mark Twain of the first novel) to assert its authority over these recalcitrant emigrants. Joshua and Sally Linsay, pioneer explorers, return to the Datum to make their case for the rights of trolls. China mounts an ambitious expedition to the unseen worlds of its footprint in the Far East. And so it goes, for more than four hundred pages. This really isn't a novel at all, but rather four or five novellas interleaved with one another, where the individual stories barely interact before most of the characters meet at a barbecue in the next to last chapter. When I put down The Long Earth, I concluded that the authors had created a stage in which all kinds of fiction could play out and looked forward to seeing what they'd do with it. What a disappointment! There are a few interesting concepts, such as evolutionary consequences of travel between parallel Earths and technologies which oppressive regimes use to keep their subjects from just stepping away to freedom, but they are few and far between. There is no war! If you're going to title your book The Long War, many readers are going to expect one, and it doesn't happen. I can recall only two laugh-out-loud lines in the entire book, which is hardly what you expect when picking up a book with Terry Pratchett's name on the cover. I shall not be reading the remaining books in the series which, if Amazon reviews are to be believed, go downhill from here.

April 2017

- Houellebecq, Michel. Soumission. Paris: J'ai Lu, [2015] 2016. ISBN 978-2-290-11361-5.

-

If you examine the Pew Research Center's table of

Muslim

Population by Country, giving the percent Muslim population for

countries and territories, one striking thing is apparent. Here

are the results, binned into quintiles.

The distribution in this table is strongly bimodal—instead of the Gaussian (normal, or “bell curve”) distribution one encounters so often in the natural and social sciences, the countries cluster at the extremes: 36 are 80% or more Muslim, 132 are 20% or less Muslim, and only a total of 20 fall in the middle between 20% and 80%. What is going on? I believe this is evidence for an Islamic population fraction greater than some threshold above 20% being an attractor in the sense of dynamical systems theory. With the Islamic doctrine of its superiority to other religions and destiny to bring other lands into its orbit, plus scripturally-sanctioned discrimination against non-believers, once a Muslim community reaches a certain critical mass, and if it retains its identity and coherence, resisting assimilation into the host culture, it will tend to grow not just organically but by making conversion (whether sincere or motivated by self-interest) an attractive alternative for those who encounter Muslims in their everyday life. If this analysis is correct, what is the critical threshold? Well, that's the big question, particularly for countries in Europe which have admitted substantial Muslim populations that are growing faster than the indigenous population due to a higher birthrate and ongoing immigration, and where there is substantial evidence that subsequent generations are retaining their identity as a distinct culture apart from that of the country where they were born. What happens as the threshold is crossed, and what does it mean for the original residents and institutions of these countries? That is the question explored in this satirical novel set in the year 2022, in the period surrounding the French presidential election of that year. In the 2017 election, the Front national narrowly won the first round of the election, but was defeated in the second round by an alliance between the socialists and traditional right, resulting in the election of a socialist president in a country with a centre-right majority. Five years after an election which satisfied few people, the electoral landscape has shifted substantially. A new party, the Fraternité musulmane (Muslim Brotherhood), led by the telegenic, pro-European, and moderate Mohammed Ben Abbes, French-born son of a Tunisian immigrant, has grown to rival the socialist party for second place behind the Front national, which remains safely ahead in projections for the first round. When the votes are counted, the unthinkable has happened: all of the traditional government parties are eliminated, and the second round will be a run-off between FN leader Marine Le Pen and Ben Abbes. These events are experienced and recounted by “François” (no last name is given), a fortyish professor of literature at the Sorbonne, a leading expert on the 19th century French writer Joris-Karl Huysmans, who was considered a founder of the decadent movement, but later in life reverted to Catholicism and became a Benedictine oblate. François is living what may be described as a modern version of the decadent life. Single, living alone in a small apartment where he subsists mostly on microwaved dinners, he has become convinced his intellectual life peaked with the publication of his thesis on Huysmans and holds nothing other than going through the motions teaching his classes at the university. His amorous life is largely confined to a serial set of affairs with his students, most of which end with the academic year when they “meet someone” and, in the gaps, liaisons with “escorts” in which he indulges in the kind of perversion the decadents celebrated in their writings. About the only thing which interests him is politics and the election, but not as a participant but observer watching television by himself. After the first round election, there is the stunning news that in order to prevent a Front national victory, the Muslim brotherhood, socialist, and traditional right parties have formed an alliance supporting Ben Abbes for president, with an agreed division of ministries among the parties. Myriam, François' current girlfriend, leaves with her Jewish family to settle in Israel, joining many of her faith who anticipate what is coming, having seen it so many times before in the history of their people. François follows in the footsteps of Huysmans, visiting the Benedictine monastery in Martel, a village said to have been founded by Charles Martel, who defeated the Muslim invasion of Europe in a.d. 732 at the Battle of Tours. He finds no solace nor inspiration there and returns to Paris where, with the alliance triumphant in the second round of the election and Ben Abbes president, changes are immediately apparent. Ethnic strife has fallen to a low level: the Muslim community sees itself ascendant and has no need for political agitation. The unemployment rate has fallen to historical lows: forcing women out of the workforce will do that, especially when they are no longer counted in the statistics. Polygamy has been legalised, as part of the elimination of gender equality under the law. More and more women on the street dress modestly and wear the veil. The Sorbonne has been “privatised”, becoming the Islamic University of Paris, and all non-Muslim faculty, including François, have been dismissed. With generous funding from the petro-monarchies of the Gulf, François and other now-redundant academics receive lifetime pensions sufficient that they never need work again, but it grates upon them to see intellectual inferiors, after a cynical and insincere conversion to Islam, replace them at salaries often three times higher than they received. Unemployed, François grasps at an opportunity to edit a new edition of Huysmans for Pléiade, and encounters Robert Rediger, an ambitious academic who has been appointed rector of the Islamic University and has the ear of Ben Abbes. They later meet at Rediger's house, where, over a fine wine, he gives François a copy of his introductory book on Islam, explains the benefits of polygamy and arranged marriage to a man of his social standing, and the opportunities open to Islamic converts in the new university. Eventually, François, like France, ends in submission. As G. K. Chesterton never actually said, “When a man stops believing in God he doesn't then believe in nothing; he believes anything.” (The false quotation appears to be a synthesis of similar sentiments expressed by Chesterton in a number of different works.) Whatever the attribution, there is truth in it. François is an embodiment of post-Christian Europe, where the nucleus around which Western civilisation has been built since the fall of the Roman Empire has evaporated, leaving a void which deprives people of the purpose, optimism, and self-confidence of their forbears. Such a vacuum is more likely to be filled with something—anything, than long endure, especially when an aggressive, virile, ambitious, and prolific competitor has established itself in the lands of the decadent. An English translation is available. This book is not recommended for young readers due to a number of sex scenes I found gratuitous and, even to this non-young reader, somewhat icky. This is a social satire, not a forecast of the future, but I found it more plausible than many scenarios envisioned for a Muslim conquest of Europe. I'll leave you to discover for yourself how the clever Ben Abbes envisions co-opting Eurocrats in his project of grand unification.Quintile % Muslim Countries 1 100–80 36 2 80–60 5 3 60–40 8 4 40–20 7 5 20–0 132

May 2017

- Jacobsen, Annie. Phenomena. New York: Little, Brown, 2017. ISBN 978-0-316-34936-9.