« November 2006 | Main | January 2007 »

Thursday, December 28, 2006

Computer Survivalism?

The knowledgeable and wise Peter Gutmann (Department of Computer Science, University of Auckland, New Zealand) has posted a paper entitled “A Cost Analysis of Windows Vista Content Protection” which explores in detail the assorted “content protection” technologies mandated for computer hardware and device driver software by Windows Vista. If you have not been following this topic, you may be aghast at what is coming down the pike in the next generation of PCs designed to deliver “premium media content”. The details are so horrific and and depressing that I will not attempt to summarise them here; go read the article and keep in mind that most of the information therein is derived from the Microsoft documents cited at the end. Apart from the consequences of this insanity for the reliability, performance, software and driver portability, and maintainability of the forthcoming products which will implement it, consider for a moment that if Microsoft are willing to go to such lengths to protect “content” owned by third parties (perhaps with the intent of “own[ing] the distribution channel” as Gutmann suggests in the “Final Thoughts” section), imagine what they will do with the complementary “trusted computing” technologies to defend their de facto monopoly against “unlicensed operating systems” and other competition. One of the most common reactions to The Digital Imprimatur was that the hardware-enforced end to end signatures I was proposing were paranoid, technically infeasible, and unacceptable to customers. But it is nothing compared to what Vista appears to require. What are those of us who use computers to compute and create instead of passively consuming “premium media content” and games to do when the next generation of hardware essentially denies us the prerogatives of ownership of a machine we have purchased, and perhaps mandates the use of software which limits our access to the machine's full functionality? Maybe it's time to take a page from the survivalists' book and stockpile enough generic “white box” computers and spare parts of the last free generation to carry us through the Dark Times ahead. The more generic the components, the easier it will be to obtain spares on the used market should your own supply of spares of a given category be exhausted. I would usually dismiss a suggestion like this as flaming paranoia, but it is difficult to read Gutmann's paper without concluding “they” are out to get us, or at least undertaking to transform personal computers as we have come to know them into locked-down “content delivery platforms” filled with secrets inaccessible to their nominal owners.Tuesday, December 26, 2006

Reading List: On Intelligence

- Hawkins, Jeff with Sandra Blakeslee. On Intelligence. New York: Times Books, 2004. ISBN 0-8050-7456-2.

- Ever since the early days of research into the sub-topic of computer science which styles itself “artificial intelligence”, such work has been criticised by philosophers, biologists, and neuroscientists who argue that while symbolic manipulation, database retrieval, and logical computation may be able to mimic, to some limited extent, the behaviour of an intelligent being, in no case does the computer understand the problem it is solving in the sense a human does. John R. Searle's “Chinese Room” thought experiment is one of the best known and extensively debated of these criticisms, but there are many others just as cogent and difficult to refute. These days, criticising artificial intelligence verges on hunting cows with a bazooka—unlike the early days in the 1950s when everybody expected the world chess championship to be held by a computer within five or ten years and mathematicians were fretting over what they'd do with their lives once computers learnt to discover and prove theorems thousands of times faster than they, decades of hype, fads, disappointment, and broken promises have instilled some sense of reality into the expectations most technical people have for “AI”, if not into those working in the field and those they bamboozle with the sixth (or is it the sixteenth) generation of AI bafflegab. AI researchers sometimes defend their field by saying “If it works, it isn't AI”, by which they mean that as soon as a difficult problem once considered within the domain of artificial intelligence—optical character recognition, playing chess at the grandmaster level, recognising faces in a crowd—is solved, it's no longer considered AI but simply another computer application, leaving AI with the remaining unsolved problems. There is certainly some truth in this, but a closer look gives lie to the claim that these problems, solved with enormous effort on the part of numerous researchers, and with the application, in most cases, of computing power undreamed of in the early days of AI, actually represents “intelligence”, or at least what one regards as intelligent behaviour on the part of a living brain. First of all, in no case did a computer “learn” how to solve these problems in the way a human or other organism does; in every case experts analysed the specific problem domain in great detail, developed special-purpose solutions tailored to the problem, and then implemented them on computing hardware which in no way resembles the human brain. Further, each of these “successes” of AI is useless outside its narrow scope of application: a chess-playing computer cannot read handwriting, a speech recognition program cannot identify faces, and a natural language query program cannot solve mathematical “word problems” which pose no difficulty to fourth graders. And while many of these programs are said to be “trained” by presenting them with collections of stimuli and desired responses, no amount of such training will permit, say, an optical character recognition program to learn to write limericks. Such programs can certainly be useful, but nothing other than the fact that they solve problems which were once considered difficult in an age when computers were much slower and had limited memory resources justifies calling them “intelligent”, and outside the marketing department, few people would remotely consider them so. The subject of this ambitious book is not “artificial intelligence” but intelligence: the real thing, as manifested in the higher cognitive processes of the mammalian brain, embodied, by all the evidence, in the neocortex. One of the most fascinating things about the neocortex is how much a creature can do without one, for only mammals have them. Reptiles, birds, amphibians, fish, and even insects (which barely have a brain at all) exhibit complex behaviour, perception of and interaction with their environment, and adaptation to an extent which puts to shame the much-vaunted products of “artificial intelligence”, and yet they all do so without a neocortex at all. In this book, the author hypothesises that the neocortex evolved in mammals as an add-on to the old brain (essentially, what computer architects would call a “bag hanging on the side of the old machine”) which implements a multi-level hierarchical associative memory for patterns and a complementary decoder from patterns to detailed low-level behaviour which, wired through the old brain to the sensory inputs and motor controls, dynamically learns spatial and temporal patterns and uses them to make predictions which are fed back to the lower levels of the hierarchy, which in turns signals whether further inputs confirm or deny them. The ability of the high-level cortex to correctly predict inputs is what we call “understanding” and it is something which no computer program is presently capable of doing in the general case. Much of the recent and present-day work in neuroscience has been devoted to imaging where the brain processes various kinds of information. While fascinating and useful, these investigations may overlook one of the most striking things about the neocortex: that almost every part of it, whether devoted to vision, hearing, touch, speech, or motion appears to have more or less the same structure. This observation, by Vernon B. Mountcastle in 1978, suggests there may be a common cortical algorithm by which all of these seemingly disparate forms of processing are done. Consider: by the time sensory inputs reach the brain, they are all in the form of spikes transmitted by neurons, and all outputs are sent in the same form, regardless of their ultimate effect. Further, evidence of plasticity in the cortex is abundant: in cases of damage, the brain seems to be able to re-wire itself to transfer a function to a different region of the cortex. In a long (70 page) chapter, the author presents a sketchy model of what such a common cortical algorithm might be, and how it may be implemented within the known physiological structure of the cortex. The author is a founder of Palm Computing and Handspring (which was subsequently acquired by Palm). He subsequently founded the Redwood Neuroscience Institute, which has now become part of the Helen Wills Neuroscience Institute at the University of California, Berkeley, and in March of 2005 founded Numenta, Inc. with the goal of developing computer memory systems based on the model of the neocortex presented in this book. Some academic scientists may sniff at the pretensions of a (very successful) entrepreneur diving into their speciality and trying to figure out how the brain works at a high level. But, hey, nobody else seems to be doing it—the computer scientists are hacking away at their monster programs and parallel machines, the brain community seems stuck on functional imaging (like trying to reverse-engineer a microprocessor in the nineteenth century by looking at its gross chemical and electrical properties), and the neuron experts are off dissecting squid: none of these seem likely to lead to an understanding (there's that word again!) of what's actually going on inside their own tenured, taxpayer-funded skulls. There is undoubtedly much that is wrong in the author's speculations, but then he admits that from the outset and, admirably, presents an appendix containing eleven testable predictions, each of which can falsify all or part of his theory. I've long suspected that intelligence has more to do with memory than computation, so I'll confess to being predisposed toward the arguments presented here, but I'd be surprised if any reader didn't find themselves thinking about their own thought processes in a different way after reading this book. You won't find the answers to the mysteries of the brain here, but at least you'll discover many of the questions worth pondering, and perhaps an idea or two worth exploring with the vast computing power at the disposal of individuals today and the boundless resources of data in all forms available on the Internet.

Sunday, December 24, 2006

Tom Swift in Captivity Now Online

The thirteenth episode in the Tom Swift adventures, Tom Swift in Captivity, is now posted in the Tom Swift and His Pocket Library collection. As usual, HTML, PDF, PDA eReader, and plain ASCII text editions suitable for reading off- or online are available. In this adventure, Tom, Ned Newton, Eradicate Sampson, and Wakefield (“bless my miscellany”) Damon are off to the Amazon in search of big game of a singular nature and end up making the acquaintance of a new member of the Swift household who will figure in subsequent adventures. As usual, I have corrected typographical and formatting errors I spotted in this edition of the text, but have deferred close proofreading until I get around to reading the book on my PDA. Consequently, corrections from eagle-eyed readers are more than welcome. Please note the comments in the main Pocket Library page before reporting archaic spelling (for example, “gasolene”) as an error.Friday, December 22, 2006

Reading List: The Captive Mind

- Milosz, Czeslaw. The Captive Mind. New York: Vintage, [1951, 1953, 1981] 1990. ISBN 0-679-72856-2.

- This book is an illuminating exploration of life in a totalitarian society, written by a poet and acute observer of humanity who lived under two of the tyrannies of the twentieth century and briefly served one of them. The author was born in Lithuania in 1911 and studied at the university in Vilnius, a city he describes (p. 135) as “ruled in turn by the Russians, Germans, Lithuanians, Poles, again the Lithuanians, again the Germans, and again the Russians”—and now again the Lithuanians. An ethnic Pole, he settled in Warsaw after graduation, and witnessed the partition of Poland between Nazi Germany and the Soviet Union at the outbreak of World War II, conquest and occupation by Germany, “liberation” by the Red Army, and the imposition of Stalinist rule under the tutelage of Moscow. After working with the underground press during the war, the author initially supported the “people's government”, even serving as a cultural attaché at the Polish embassies in Washington and Paris. As Stalinist terror descended upon Poland and the rigid dialectical “Method” was imposed upon intellectual life, he saw tyranny ascendant once again and chose exile in the West, initially in Paris and finally the U.S., where he became a professor at the University of California at Berkeley in 1961—imagine, an anti-communist at Berkeley! In this book, he explores the various ways in which the human soul comes to terms with a regime which denies its very existence. Four long chapters explore the careers of four Polish writers he denotes as “Alpha” through “Delta” and the choices they made when faced with a system which offered them substantial material rewards in return for conformity with a rigid system which put them at the service of the State, working toward ends prescribed by the “Center” (Moscow). He likens acceptance of this bargain to swallowing a mythical happiness pill, which, by eliminating the irritations of creativity, scepticism, and morality, guarantees those who take it a tranquil place in a well-ordered society. In a powerful chapter titled “Ketman”—a Persian word denoting fervent protestations of faith by nonbelievers, not only in the interest of self-preservation, but of feeling superior to those they so easily deceive—Milosz describes how an entire population can become actors who feign belief in an ideology and pretend to believe the earnest affirmations of orthodoxy on the part of others while sharing scorn for the few true believers. The author received the 1980 Nobel Prize in Literature.

Monday, December 18, 2006

Linux: Remote backup bandwidth (dump/tar) to LTO3 tape over SSH depends critically upon block size

In getting ready to leave Fourmilab unattended while I'm out of town during the holiday season, I habitually make a site-wide backup tape which I take with me, “just in case”—hey, the first computer I ever used was at Case! This was the first time I've made such backups since installing the new backup configuration, so I expected there would be things to learn in the process, and indeed there were. While routine backups at Fourmilab are done with Bacula, for these cataclysm hedge offsite backups I use traditional utilities such as dump and tar because, in a post-apocalyptic scenario, it will be easier to do a “bare metal” restore from them. (The folks who consider their RAID arrays adequate backup have, in my opinion, little imagination and even less experience with Really Bad Days; not only do you want complete offsite backups on media with a 30 year lifetime, you want copies of them stored on at least three continents in case of the occasional bad asteroid day.) Anyway, this was the first time I went to make such a backup to the LTO Ultrium 3 drive on the new in-house server. I used the same parameters I'd used before to back up over the network to the SDLT drive on the Sun server it replaced, and I was dismayed to discover that the transfer rate was a fraction of what I was used to, notwithstanding the fact that the LTO3 drive is much faster than the SDLT drive on the former server. The only significant difference, apart from the tape drive, is that remote tape access to the old server went over rcmd, while access to the new server is via ssh. Before, I had used a block size in the dump of 64 Kb, as this was said to be universally portable. With this specification, I saw transfer rates on the order of 1300 kilobytes per second, at which rate it would take sixteen hours to back up my development machine. Given that my departure time was only few hours more than that from the time I started the backup and that I had another backup to append to the tape, this was bad. According to a cursory Web search, it appears that the most common recommendation for an optimum block size for LTO3 tape is 256 Kb. Now, if you read the manual page for dump, there is a bit of fear that things may go horribly awry if you set the block size greater than 64 Kb, but I decided to give it a try and see what happened. What happened was glorious! The entire 40 Gb dump completed at an average rate of 4817 Kb/sec; this isn't as fast as Bacula, but considering that everything is going through an encrypted SSH pipeline it's entirely reasonable. The actual command I used to back up a typical file system is like this:

/sbin/dump -0u -b 256 -f server.ratburger.org:/dev/nst0 \

/dev/hda7

Should the need arise to restore from such a backup, it is essential that you specify the “-b 256” option on the command line so that the block size of the dump will be honoured. I verified that I could restore files backed up with these parameters before using them to save other file systems.

Commands like this can be used to back up Unix file systems, but legacy file systems such as VFAT and NTFS partitions on dual-boot machines may have to be backed up using TAR. To back up an NTFS file system on my development machine which I mount with Linux-NTFS, I use the following commands:

cd /c

tar -c --rsh-command /usr/bin/ssh \

-f server.ratburger.org:/dev/nst0 -b 512 .

Thursday, December 14, 2006

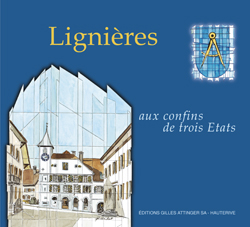

Ship It: Lignières History Book Published

To my mind there are few phrases as satisfying as, when at the conclusion of a protracted, difficult, and exasperating development project the moment comes when you utter the magic words “ship it” and then step back to to await the reaction of the customers. After four years of organising a foundation, fundraising, identifying source documents, recruiting more than thirty authors, photographing everything from a forged Papal Bull (“Papal bulls**t?”) dating from A.D. 1179 to the site where a German bomber crashed in 1940 after violating Swiss airspace and being shot down by a Swiss Army Messerschmitt 109, today the first history published since 1801 of Lignières, Fourmilab's home village, shipped to those who pre-ordered it. Lignières, un village aux confins de trois Etats (ISBN 2-88256-166-X) grew, as many software development projects do, from a modest 160-odd pages to a total of 220, with 168 illustrations, 74 in colour. A DVD Video/ROM hybrid disc is attached to the back cover: if placed in a DVD Video player, a variety of films, both historical and produced expressly for this publication (including my own Les Quatre Saisons) can be viewed. If the disc is loaded in a computer DVD-ROM drive, a large collection of original source documents for the book can be consulted: this is a history book which lets you “look under the hood” and examine the sources, many from obscure archives, which the historians used in writing the book.

forged Papal Bull (“Papal bulls**t?”) dating from A.D. 1179 to the site where a German bomber crashed in 1940 after violating Swiss airspace and being shot down by a Swiss Army Messerschmitt 109, today the first history published since 1801 of Lignières, Fourmilab's home village, shipped to those who pre-ordered it. Lignières, un village aux confins de trois Etats (ISBN 2-88256-166-X) grew, as many software development projects do, from a modest 160-odd pages to a total of 220, with 168 illustrations, 74 in colour. A DVD Video/ROM hybrid disc is attached to the back cover: if placed in a DVD Video player, a variety of films, both historical and produced expressly for this publication (including my own Les Quatre Saisons) can be viewed. If the disc is loaded in a computer DVD-ROM drive, a large collection of original source documents for the book can be consulted: this is a history book which lets you “look under the hood” and examine the sources, many from obscure archives, which the historians used in writing the book.

enseigne of the Hôtel de Commune, which is visible to the left to the projection screen. You can click the close-up image at the left for an enlargement which shows the sign in all its re-gilded glory. Next spring it will take its place in the centre of the village where you can see it, almost a century before (look to the left of the clock tower at the level of the first storey windows), in our Lignières: Then and Now pages—next year the “now” photo will once again include this Lignières landmark!

If you type the ISBN number for this book into an Amazon search box, even at Amazon.fr, it will come up blank: Swiss high-end publishers are not indexed by Amazon, even though seemingly every vanity press and self-published screed in the U.S. is included in their database—go figure. If you're in Switzerland, it's easy to order this book; just visit the page and click “[Commander]” If you're not, I'd suggest you fill out the order form and provide them contact information to arrange payment if you don't have access to the frighteningly efficient Swiss electronic payment system—perhaps a postal money order will work.

enseigne of the Hôtel de Commune, which is visible to the left to the projection screen. You can click the close-up image at the left for an enlargement which shows the sign in all its re-gilded glory. Next spring it will take its place in the centre of the village where you can see it, almost a century before (look to the left of the clock tower at the level of the first storey windows), in our Lignières: Then and Now pages—next year the “now” photo will once again include this Lignières landmark!

If you type the ISBN number for this book into an Amazon search box, even at Amazon.fr, it will come up blank: Swiss high-end publishers are not indexed by Amazon, even though seemingly every vanity press and self-published screed in the U.S. is included in their database—go figure. If you're in Switzerland, it's easy to order this book; just visit the page and click “[Commander]” If you're not, I'd suggest you fill out the order form and provide them contact information to arrange payment if you don't have access to the frighteningly efficient Swiss electronic payment system—perhaps a postal money order will work.

Wednesday, December 13, 2006

Reading List: Fab

- Gershenfeld, Neil. Fab. New York: Basic Books, 2005. ISBN 0-465-02745-8.

-

Once, every decade or so, you encounter a book which empowers

you in ways you never imagined before you opened it, and

ultimately changes your life. This is one of those books.

I am who I am (not to sound too much like Popeye) largely

because in the fall of 1967 I happened to read Daniel McCracken's

FORTRAN book and realised that there was

nothing complicated at all about programming computers—it was a

vocational skill that anybody could learn, much like

operating a machine tool. (Of course, as you get deeper into the

craft, you discover there is a great body of theory to master, but

there's much you can accomplish if you're willing to work hard and

learn on the job before you tackle the more abstract aspects of the

art.) But this was not only something that I could do but,

more importantly, I could learn by doing—and that's how I decided

to spend the rest of my professional life and I've never regretted having

done so. I've never met a genuinely creative person who wished to

spend a nanosecond in a classroom downloading received wisdom at

dial-up modem bandwidth. In fact, I suspect the absence of such

people in the general population is due to the pernicious effects

of the Bismarck worker-bee indoctrination to which the youth of most

“developed” societies are subjected today.

We all know that, some day, society will pass through the nanotechnological

singularity, after which we'll be

eternally free,

eternally young,

immortal, and incalculably rich: hey—works for me! But few

people realise that if

the age of globalised mass production is analogous to that of

mainframe computers

and if the

desktop

nano-fabricator is

equivalent to today's personal supercomputer, we're already

in the equivalent of the minicomputer age of personal fabrication.

Remember minicomputers? Not too large, not too small, and hence difficult

to classify: too expensive for most people to buy, but within the

budget of groups far smaller than the governments and large

businesses who could afford mainframes.

The minicomputer age of personal fabrication is as messy as the

architecture of minicomputers of four decades before: there are lots

of different approaches, standards, interfaces, all mutually

incompatible: isn't innovation wonderful? Well, in this sense

no!

But it's here, now. For a sum in the tens of

thousands of U.S. dollars, it is now possible to equip a

“Fab Lab” which can make “almost anything”.

Such a lab can fit into a modestly sized room, and, provided with

electrical power and an Internet connection, can empower whoever

crosses its threshold to create whatever their imagination can

conceive. In just a few minutes, their dream can become

tangible hardware in the real world.

The personal computer revolution empowered almost anybody (at least

in the developed world) to create whatever information processing

technology their minds could imagine, on their own, or in collaboration

with others. The Internet expanded the scope of this collaboration

and connectivity around the globe: people who have never met one another

are now working together to create software which will be used by

people who have never met the authors to everybody's mutual benefit. Well,

software is cool, but imagine if this extended to stuff. That's

what Fab is about. SourceForge

currently hosts more than 135,500 software development projects—imagine

what will happen when StuffForge.net (the name is still available, as I

type this sentence!) hosts millions of OpenStuff things you can

download to your local Fab Lab, make, and incorporate

into inventions of your own imagination. This is the grand roll-back of

the industrial revolution, the negation of globalisation: individuals,

all around the world, creating for themselves products tailored to their

own personal needs and those of their communities, drawing upon the freely

shared wisdom and experience of their peers around the globe. What a beautiful

world it will be!

Cynics will say, “Sure, it can work at MIT—you have one of the most

talented student bodies on the planet, supported by a faculty which excels in

almost every discipline, and an industrial plant with bleeding edge fabrication

technologies of all kinds.” Well, yes, it works there. But the most inspirational

thing about this book is that it seems to work everywhere: not just at MIT

but also in South Boston, rural India, Norway far north of the Arctic Circle,

Ghana, and Costa Rica—build it and they will make. At times the

author seems unduly amazed that folks without formal education and the advantages

of a student at MIT can imagine, design, fabricate, and apply a solution to

a problem in their own lives. But we're human beings—tool-making

primates who've prospered by figuring things out and finding ways to make

our lives easier by building tools. Is it so surprising that putting the

most modern tools into the hands of people who daily confront the most

fundamental problems of existence (access to clean water, food, energy, and

information) will yield innovations which surprise even professors at

MIT?

This book is so great, and so inspiring, that I will give the author a

pass on his clueless attack on AutoCAD's (never attributed) DXF file

format on pp. 46–47, noting simply that the answer to why

it's called “DXF” is that Lotus had already used

“DIF” for their spreadsheet interchange files and

we didn't want to create confusion with their file format, and that

the reason there's more than one code for an X co-ordinate is that

many geometrical objects require more than one X co-ordinate to define them

(well, duh).

The author also totally gets what I've been talking about

since Unicard and

even before that as “Gizmos”, that

every single device in the world, and every button on every

device will eventually have its own (IPv6) Internet address and be

able to interact with every other such object in every way that makes

sense. I envisioned MIDI networks as the cheapest way to implement

this bottom-feeder light-switch to light-bulb network; the author,

a decade later, opts for a PCM “Internet 0”—works for

me. The medium doesn't matter; it's that the message makes it end

to end so cheaply that you can ignore the cost of the interconnection

that ultimately matters.

The author closes the book with the invitation:

Finally, demand for fab labs as a research project, as a collection of capabilities, as a network of facilities, and even as a technological empowerment movement is growing beyond what can be handled by the initial collection of people and institutional partners that were involved in launching them. I/we welcome your thoughts on, and participation in, shaping their future operational, organizational, and technological form.

Well, I am but a humble programmer, but here's how I'd go about it. First of all, I'd create a “Fabrication Trailer“ which could visit every community in the United States, Canada, and Mexico; I'd send it out on the road in every MIT vacation season to preach the evangel of “make” to every community it visited. In, say, one of eighty of such communities, one would find a person who dreamed of this happening in his or her lifetime who was empowered by seeing it happen; provide them a template which, by writing a cheque, can replicate the fab and watch it spread. And as it spreads, and creates wealth, it will spawn other Fab Labs. Then, after it's perfected in a couple of hundred North American copies, design a Fab Lab that fits into an ocean cargo container and can be shipped anywhere. If there isn't electricity and Internet connectivity, also deliver the diesel generator or solar panels and satellite dish. Drop these into places where they're most needed, along with a wonk who can bootstrap the locals into doing things with these tools which astound even those who created them. Humans are clever, tool-making primates; give us the tools to realise what we imagine and then stand back and watch what happens! The legacy media bombard us with conflict, murder, and mayhem. But the future is about creation and construction. What does An Army of Davids do when they turn their creativity and ingenuity toward creating solutions to problems perceived and addressed by individuals? Why, they'll call it a renaissance! And that's exactly what it will be. For more information, visit the Web site of The Center for Bits and Atoms at MIT, which the author directs. Fab Central provides links to Fab Labs around the world, the machines they use, and the open source software tools you can download and start using today.

Tuesday, December 12, 2006

Applying Check Point FireWall-1 Hotfixes on a Nokia IP265 Network Appliance

(Note: The information in this item is so specialised it is probable that not a single regular reader of this chronicle will find it of interest. Why post it then? Because every time I publish such an item I receive feedback from people who found it with a search engine who write to thank me for pointing them to the solution of the obscure problem in question. That's why I try to give such items long titles with keywords to direct those searching for such information to them.) Last year at about this time Fourmilab's firewall was upgraded to dual redundant diskless Nokia IP265 network appliances which run Check Point FireWall-1 software. The two Nokia machines are configured as an active/backup high availability cluster using the Virtual Router Redundancy Protocol (VRRP) so that if the active firewall fails, the backup, which constantly monitors its status and mirrors connection information, can take over without even dropping active TCP connections. All of this worked pretty much as expected, but unfortunately I soon discovered a horrific bungle in the VRRP fail-over implementation. When the active firewall went down, the backup took over, then relinquished control back to the primary unit once it came back up: all well and good. But if you rebooted the backup, the active firewall would cease to forward traffic until the backup returned to service! The meant your entire “high availability” cluster and access to all of the machines behind it was vulnerable to the failure of the backup firewall—what a mess! After researching this problem for some time, I discovered that Check Point had issued a “hot fix” to correct a problem in which the reboot of a VRRP backup machine would send a bogus gratuitous ARP packet which “could block cluster connectivity”; that certainly sounded like the problem I was having. Check Point periodically releases what they call “Hotfix Accumulators” which are like Sun's omnibus “rollup patches” for Solaris: a large collection of independent patches said to be mutually compatible which, together, constitute a minor release of the software to which they pertain. I downloaded the current such package, which was released in June of 2006 (although its documentation was most recently revised in mid-September 2006) and proceeded to try to install it first on the backup firewall, allowing the primary to remain in production so as not to disrupt access to the site. Because the Nokia IP265 is a flash-based diskless machine, its file system structure is rather curious. It runs an operating system called IPSO, which is based on FreeBSD, and the output of the “df -k” command is as follows:xl5[admin]# df -k Filesystem 1K-blocks Used Avail Capacity Mounted on /dev/wd0f 127151 40525 76454 35% / v9fs 120224 41752 78472 35% /image/IPSO-3.8.1-BUILD028-12.02.2004-222502-1518/rfs /dev/wd0a 31775 33 29200 0% /config /dev/wd0h 317903 154047 138424 53% /preserve procfs 4 4 0 100% /proc v9fs 88712 10240 78472 12% /var mfs:83 7607 0 6998 0% /var/tmp2/upgrade v9fs 174448 95976 78472 55% /optThe file systems on the various partitions of “/dev/wd0” are stored in the non-volatile flash memory. At boot time, they are decompressed and copied into the RAM file systems from which the system runs; changes to the “v9fs” file systems are lost at the next reboot. The Hotfix Accumulator I wished to install was 22.8 Mb GZIP compressed. I decided to copy it to the volatile “/var” file system, with about 80 Mb of free space, for installation. I copied it, decompressed and extracted the archive, and still had what I thought was plenty of free space on /var. How wrong I was. The process of installing one of these updates uses a huge amount of intermediate storage, and the installation script does not bother to check whether there's enough space to complete the task before commencing it. Worse, when it does exhaust the free space on the file system, it just keeps blundering on, truncating files and destroying information, and then, as a final boot in the system administrator's face, reports that everything has completed successfuly. When you reboot the firewall after this process, you begin to appreciate the extent of the damage. Essentially nothing works; your installation consists of about an equal mix of old, new, and truncated files, and since the backup files were lost in the disc full incident, you cannot even reverse the process to restore the status quo ante. After surveying the wreckage, I decided the most expeditous course would be to restore the entire contents of the /preserve/opt/packages/installed directory from the most recent backup. (If you haven't previously installed any patches, you can restore it from the “IPSO Wrapper” for the software version you're using.) After restoring the contents of this directory, I was able to reboot the backup firewall and have it resume its backup rôle running the old version. For the next attempt, I decided to place the update files on the /preserve file system, which is the largest on the machine. Copying them there filled this file system to the 50% level from a starting point of 37%, but that still left far more free space than on /var. The first attempt to install the patch failed, claiming that it was already installed. The first failed attempt had corrupted the registry (shudder) and so I had to edit /preserve/var/opt/CPshared-R55p/registry/HKLM_registry.data and remove the two instances of:

: (HotFixes

:HOTFIX_HFA_R55P_08 (1)

)

left there by the failed installation before the update would apply successfully. During the installation I kept an eye on free space, and at the high-water mark /preserve reached the white-knuckle level of 87% of capacity. But after the installation was complete, it dropped back to just a few percent above where it started.

After the installation was complete, I rebooted the backup firewall, halted the primary, and allowed the new version of the software on the backup to enter production. After a day with no problems, I repeated the process to install the update on the primary, restoring the site to a fully redundant configuration. After all of this I was almost afraid to try the obvious test of rebooting the backup to see if the problem which launched me on this adventure had, in fact. been fixed—I'm not sure I could have maintained my composure if all of this had been for nought. But, after all, system administrators are known more for their ill-tempered meat cleavers than even tempered composure, so I went ahead and rebooted the backup and, lo and behold, the problem had indeed been fixed (cue choirs of angels singing hallelujahs).

The lesson to take away from this is that when you're installing Check Point Hotfix Accumulators on a Nokia IP265, always place the update directory

on the /preserve file system, not one of the others with less capacity. And, of course, be sure you have a complete, current backup of the entire machine (not just the configuration files backed up Nokia Voyager, which do not include the critical package files modified by the Hotfix installation) before attempting the installation.

Sunday, December 10, 2006

Reading List: Mercury

- Bova, Ben. Mercury. New York: Tor, 2005. ISBN 0-7653-4314-2.

-

I hadn't read anything by

Ben Bova in years—certainly

not since 1990. I always used to think of him as a journeyman

science fiction writer, cranking out enjoyable stories mostly

toward the hard end of the science fiction spectrum, but not

a grand master of the calibre of, say, Heinlein, Clarke, and Niven.

His stint as editor of

Analog

was admirable, proving himself a worthy successor to John W. Campbell, who

developed the authors of the golden age of science

fiction. Bova is also a prolific essayist on science, writing, and

other topics, and his January 1965 Analog article

“It's Done with Mirrors” with William F. Dawson may have

been one of the earliest proposals of a multiply-connected

small universe cosmological model.

I don't read a lot of fiction these days, and tend to lose

track of authors, so when I came across this book in an

airport departure lounge and noticed it was published in

2005, my first reaction was, “Gosh, is he still writing?”

(Bova was born in 1932, and his first novel was published in

1959.) The

U.K. paperback edition was featured

in a “buy one, get one free” bin, so how could I

resist?

I ought to strengthen my resistance. This novel is so execrably bad

that several times in the process of reading it I was tempted to rip

it to bits and burn them to ensure nobody else would have to

suffer the experience. There is nothing whatsoever redeeming

about this book. The plot is a conventional love triangle/revenge

tale. The only thing that makes it science fiction at all is

that it's set in the future and involves bases on Mercury, space

elevators, and asteroid mining, but these are just backdrops for a

story which could take place anywhere. Notwithstanding the

title, which places it within the author's “Grand Tour”

series, only about half of the story takes place on Mercury,

whose particulars play only a small part.

Did I mention the writing? No, I guess I was trying to forget it.

Each character, even throw-away figures who appear only in a

single chapter, is introduced by a little sketch which reads

like something produced by filling out a form. For example,

Jacqueline Wexler was such an administrator. Gracious and charming in public, accommodating and willing to compromise at meetings, she nevertheless had the steel-hard will and sharp intellect to drive the ICU's ramshackle collection of egos toward goals that she herself selected. Widely known as ‘Attila the Honey,’ Wexler was all sweetness and smiles on the outside, and ruthless determination within.

After spending a third of page 70 on this paragraph, which makes my teeth ache just to re-read, the formidable Ms. Wexler walks off stage before the end of p. 71, never to re-appear. But fear not (or fear), there are many, many more such paragraphs in subsequent pages. An Earth-based space elevator, a science fiction staple, is central to the plot, and here Bova bungles the elementary science of such a structure in a laugh-out-loud chapter in which the three principal characters ride the elevator to a platform located at the low Earth orbit altitude of 500 kilometres. Upon arrival there, they find themselves weightless, while in reality the force of gravity would be imperceptibly less than on the surface of the Earth! Objects in orbit are weightless because their horizontal velocity cancels Earth's gravity, but a station at 500 kilometres is travelling only at the speed of the Earth's rotation, which is less than 1/16 of orbital velocity. The only place on a space elevator where weightlessness would be experienced is the portion where orbital velocity equals Earth's rotation rate, and that is at the anchor point at geosynchronous altitude. This is not a small detail; it is central to the physics, engineering, and economics of space elevators, and it figured prominently in Arthur C. Clarke's 1979 novel The Fountains of Paradise which is alluded to here on p. 140. Nor does Bova restrain himself from what is becoming a science fiction cliché of the first magnitude: “nano-magic”. This is my term for using the “nano” prefix the way bad fantasy authors use “magic”. For example, Lord Hacksalot draws his sword and cuts down a mighty oak tree with a single blow, smashing the wall of the evil prince's castle. The editor says, “Look, you can't cut down an oak tree with a single swing of a sword.” Author: “But it's a magic sword.” On p. 258 the principal character is traversing a tether between two parts of a ship in the asteroid belt which, for some reason, the author believes is filled with deadly radiation. “With nothing protecting him except the flimsy…suit, Bracknell felt like a turkey wrapped in a plastic bag inside a microwave oven. He knew that high-energy radiation was sleeting down on him from the pale, distant Sun and still-more-distant stars. He hoped that suit's radiation protection was as good as the manufacturer claimed.” Imaginary editor (who clearly never read this manuscript): “But the only thing which can shield you from heavy primary cosmic rays is mass, and lots of it. No ‘flimsy suit’ however it's made, can protect you against iron nuclei incoming near the speed of light.” Author: “But it's a nano suit!” Not only is the science wrong, the fiction is equally lame. Characters simply don't behave as people do in the real world, nor are events and their consequences plausible. We are expected to believe that the causes of and blame for a technological catastrophe which killed millions would be left to be decided by a criminal trial of a single individual in Ecuador without any independent investigation. Or that a conspiracy to cause said disaster involving a Japanese mega-corporation, two mass religious movements, rogue nanotechnologists, and numerous others could be organised, executed, and subsequently kept secret for a decade. The dénouement hinges on a coincidence so fantastically improbable that the plausibility of the plot would be improved were the direct intervention of God Almighty posited instead. Whatever became of Ben Bova, whose science was scientific and whose fiction was fun to read? It would be uncharitable to attribute this waste of ink and paper to age, as many science fictioneers with far more years on the clock have penned genuine classics. But look at this! Researching the author's biography, I discovered that in 1996, at the age of 64, he received a doctorate in education from California Coast University, a “distance learning” institution. Now, remember back when you were in engineering school struggling with thermogoddamics and fluid mechanics how you regarded the student body of the Ed school? Well, I always assumed it was a selection effect—those who can do, and those who can't…anyway, it never occurred to me that somewhere in that dark, lowering building they had a nano brain mushifier which turned the earnest students who wished to dedicate their careers to educating the next generation into the cognitively challenged classes they graduated. I used to look forward to reading anything by Ben Bova; I shall, however, forgo further works by the present Doctor of Education.

Tuesday, December 5, 2006

Computing: Feedback Form Updated

I have just posted version 1.1 of the Fourmilab Feedback Form, which has been in production test since last week. Since no problems have been noted, either by users sending feedback or from scrutiny of the Web server error log, I'm releasing the source code for folks who wish to install it on their own sites, along with the corresponding documentation update. Although the documentation for the feedback form was updated to XHTML 1.0 some time ago, the form itself, its confirmation and error messages, and (if configured) the HTML mail it sent remained sloppy old HTML 3.2. The new version generates XHTML 1.0 (Transitional) for all of these documents, and all have been validated for compliance by the W3C Markup Validator. In addition, a few potential “HTML injection” vulnerabilities have been corrected. These are circumstances in which a clever (or in some cases, brow-ridged knuckle-walking obvious) use of HTML mark-up in user-specified input could pass through to the HTML returned to the user or sent in HTML mail to the designated recipient of the feedback. This can create what is called a “cross-site scripting” vulnerability, but, since in this case users can only send the injected HTML back to themselves or the unspecified address to which the feedback is sent, the potential damage was limited compared to the general case. Still, 'twere better fixed, and 'tis this very day. Although the documentation was already in XHTML 1.0, it now uses CSS for more of the presentation specifications, and Unicode entities for special characters such as opening and closing quotes and dashes.Sunday, December 3, 2006

Wretched Excess: Blue LED Christmas Tree

I'm on record as saying, several years ago, that the high intensity blue LED is the design cliché of the oughties. No matter where you look, there's one of these short-wavelength eyes glaring at you: from your Web server, firewall, television, or toaster; you may sleep—but these unblinking LEDs do not.

A couple of years ago I encouraged folks to migrate from irritating series-string incandescent Christmas lights to the coruscating, actinic, partially nuclear-powered lights which grace Fourmilab's windows from Advent through the New Year. But little did I imagine it would come to this….

This twelve metre Christmas tree outside a shopping centre down the hill from Fourmilab is decorated exclusively with high-intensity blue LEDs. Note how directional they are—the few which happen to be pointed directly at the camera appear much more intense than those just a few degrees off axis. This is apparent with my own LED strings, but I hope to mitigate the effect in this year's display by dipping the LEDs in beer and Epsom salt (really!).

And I will never, ever, use blue LEDs, although I reserve the right to deploy high intensity red and green emitters in creative ways along with the

traditional white.

where you look, there's one of these short-wavelength eyes glaring at you: from your Web server, firewall, television, or toaster; you may sleep—but these unblinking LEDs do not.

A couple of years ago I encouraged folks to migrate from irritating series-string incandescent Christmas lights to the coruscating, actinic, partially nuclear-powered lights which grace Fourmilab's windows from Advent through the New Year. But little did I imagine it would come to this….

This twelve metre Christmas tree outside a shopping centre down the hill from Fourmilab is decorated exclusively with high-intensity blue LEDs. Note how directional they are—the few which happen to be pointed directly at the camera appear much more intense than those just a few degrees off axis. This is apparent with my own LED strings, but I hope to mitigate the effect in this year's display by dipping the LEDs in beer and Epsom salt (really!).

And I will never, ever, use blue LEDs, although I reserve the right to deploy high intensity red and green emitters in creative ways along with the

traditional white.

Saturday, December 2, 2006

Safetyland: New Frontiers in Cosmetics

CNN.com recently posted an article from Reuters entitled “Americans: 60's are the new middle age”. According to the last two paragraphs:French beauty group Clarins will launch in January what it says is the world's first spray to protect skin from the electromagnetic radiation created by mobile phones and electronic devices such as laptops. It says the spray contains molecules derived from microorganisms living near undersea volcanoes and from plants which survive in extreme conditions such as alongside motorways and in Siberia.Wow—what a concept. I wonder if I can beat them to the filing window with a patent application on conductive hair-spray which eliminates the need to wear a tin-foil hat!

Friday, December 1, 2006

Reading List: The Plot Against America

- Roth, Philip. The Plot Against America. New York: Vintage, 2004. ISBN 1-4000-7949-7.

- Pulitzer Prize-winning mainstream novelist Philip Roth turns to alternative history in this novel, which also falls into the genre Rudy Rucker pioneered and named “transreal”—autobiographical fiction, in which the author (or a character clearly based upon him) plays a major part in the story. Here, the story is told in the first person by the author, as a reminiscence of his boyhood in the early 1940s in Newark, New Jersey. In this timeline, however, after a deadlocked convention, the Republican party chooses Charles Lindbergh as its 1940 presidential candidate who, running on an isolationist platform of “Vote for Lindbergh or vote for war”, defeats FDR's bid for a third term in a landslide. After taking office, Lindbergh's tilt toward the Axis becomes increasingly evident. He appoints the virulently anti-Semitic Henry Ford as Secretary of the Interior, flies to Iceland to sign a pact with Hitler, and a concludes a treaty with Japan which accepts all its Asian conquests so far. Further, he cuts off all assistance to Britain and the USSR. On the domestic front, his Office of American Absorption begins encouraging “urban” children (almost all of whom happen to be Jewish) to spend their summers on farms in the “heartland” imbibing “American values”, and later escalates to “encouraging” the migration of entire families (who happen to be Jewish) to rural areas. All of this, and its many consequences, ranging from trivial to tragic, are seen through the eyes of young Philip Roth, perceived as a young boy would who was living through all of this and trying to make sense of it. A number of anecdotes have nothing to do with the alternative history story line and may be purely autobiographical. This is a “mood novel” and not remotely a thriller; the pace of the story-telling is languid, evoking the time sense and feeling of living in the present of a young boy. As alternative history, I found a number of aspects implausible and unpersuasive. Most exemplars of the genre choose one specific event at which the story departs from recorded history, then spin out the ramifications of that event as the story develops. For example, in 1945 by Newt Gingrich and William Forstchen, after the attack on Pearl Harbor, Germany does not declare war on the United States, which only goes to war against Japan. In Roth's book, the point of divergence is simply the nomination of Lindbergh for president. Now, in the real election of 1940, FDR defeated Wendell Willkie by 449 electoral votes to 82, with the Republican carrying only 10 of the 48 states. But here, with Lindbergh as the nominee, we're supposed to believe that FDR would lose in forty-six states, carrying only his home state of New York and squeaking to a narrow win in Maryland. This seems highly implausible to me—Lindbergh's agitation on behalf of America First made him a highly polarising figure, and his apparent sympathy for Nazi Germany (in 1938 he accepted a gold medal decorated with four swastikas from Hermann Göring in Berlin) made him anathema in much of the media. All of these negatives would have been pounded home by the Democrats, who had firm control of the House and Senate as well as the White House, and all the advantages of incumbency. Turning a 38 state landslide into a 46 state wipeout simply by changing the Republican nominee stretches suspension of disbelief to the limit, at least for this reader, especially as Americans are historically disinclined to elect “outsiders” to the presidency. If you accept this premise, then most of what follows is reasonably plausible and the descent of the country into a folksy all-American kind of fascism is artfully told. But then something very odd happens. As events are unfolding at their rather leisurely pace, on page 317 it's like the author realised he was about to run out of typewriter ribbon or something, and the whole thing gets wrapped up in ten pages, most of which is an unconfirmed account by one of the characters of behind-the-scenes events which may or may not explain everything, and then there's a final chapter to sort out the personal details. This left me feeling like Charlie Brown when Lucy snatches away the football; either the novel should be longer, or else the pace of the whole thing should be faster rather than this whiplash-inducing discontinuity right before the end—but who am I to give advice to somebody with a Pulitzer? A postscript provides real-world biographies of the many historical figures who appear in the novel, and the complete text of Lindbergh's September 1941 Des Moines speech to the America First Committee which documents his contemporary sentiments for readers who are unaware of this unsavoury part of his career.