« December 2008 | Main | February 2009 »

Tuesday, January 27, 2009

Reading List: Hitler's War Directives

- Trevor-Roper, Hugh. Hitler's War Directives. Edinburgh: Birlinn, [1964] 2004. ISBN 978-1-84341-014-0.

- This book, originally published in 1964, contains all of Adolf Hitler's official decrees on the prosecution of the European war, from preparations for the invasion of Poland in 1939 to his final exhortation to troops on the Eastern Front of 15th April 1945 to stand in place or die. The author introduces each of the translated orders with an explanation of the situation at the time, and describes subsequent events. A fifteen page introduction explains the context of these documents and the structure of the organisations to which they were directed. For those familiar with the history of the period, there are few revelations to be gained from these documents. It is interesting to observe the extent to which Hitler was concerned with creating and substantiating the pretexts for his aggression in both the East and West, and also how when the tide turned and the Wehrmacht was rolled back from Stalingrad to Berlin, he focused purely upon tactical details, never seeming to appreciate (at least in these orders to the military, state, and party) the inexorable disaster consuming them all. As these are decrees at the highest level, they are largely composed of administrative matters and only occasionally discuss operational items; as such one's eyes may glaze over reading too much in one sitting. The bizarre parallel structure of state and party created by Hitler is evident in a series of decrees issued during the defensive phase of the war in which essentially the same orders were independently issued to state and party leaders, subordinating each to military commanders in battle areas. As the Third Reich approached collapse, the formal numbering of orders was abandoned, and senior military commanders issued orders in Hitler's name. These are included here using a system of numbering devised by the author. Appendices include lists of code names for operations, abbreviations, and people whose names appear in the orders. If you aren't well-acquainted with the history of World War II in Europe, you'll take away little from this work. While the author sketches the history of each order, you really need to know the big picture to understand the situation the Germans faced and what they knew at the time to comprehend the extent to which Hitler's orders evidenced cunning or denial. Still, one rarely gets the opportunity to read the actual operational orders issued during a major conflict which ended in annihilation for the person giving them and the nation which followed him, and this book provides a way to understand how ambition, delusion, and blind obedience can lead to tragic catastrophe.

Sunday, January 25, 2009

Gnome-o-gram: "A new, unprecedented era in financial history"

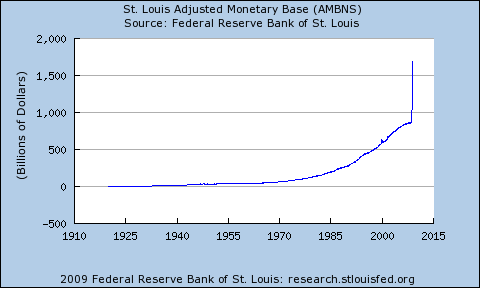

A “new era”—that's what they always say just before something terrible happens and everybody wakes up and realises they're living in the same old eternal era, but disabused of a mass delusion which has just been popped by the persistent pin of reality. The following is a chart of the Adjusted Monetary Base of the United States, compiled by the Federal Reserve Bank of St. Louis, from the start of 1920 through the start of December 2008. That little blip you see around 2000 is the “Y2K liquidity flood” which some saw, at the time, as a dire departure from the historical trend. But as you can see, it's been “onward and upward” from there…until the last few months when the curve goes vertical, thanks to “bailouts” and “stimulus packages”—and that's not counting the trillions still yet to be enacted. In the space of a few months, the monetary base of the United States has essentially doubled its entire growth since the creation of the Federal Reserve System in 1913.

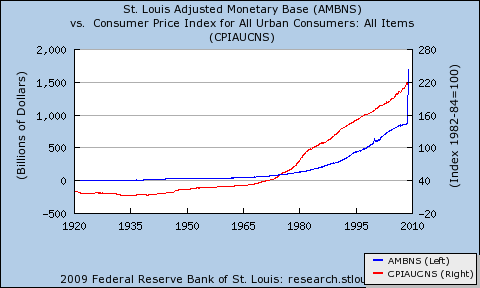

Whenever you see a vertical line on a financial chart, it's an indication that something extraordinary is happening. Such events almost always end badly. Growth in the monetary base has historically been strongly correlated with inflation as measured by the Consumer Price Index (CPI).

That little blip you see around 2000 is the “Y2K liquidity flood” which some saw, at the time, as a dire departure from the historical trend. But as you can see, it's been “onward and upward” from there…until the last few months when the curve goes vertical, thanks to “bailouts” and “stimulus packages”—and that's not counting the trillions still yet to be enacted. In the space of a few months, the monetary base of the United States has essentially doubled its entire growth since the creation of the Federal Reserve System in 1913.

Whenever you see a vertical line on a financial chart, it's an indication that something extraordinary is happening. Such events almost always end badly. Growth in the monetary base has historically been strongly correlated with inflation as measured by the Consumer Price Index (CPI).

CPI inflation tends to lag growth in the monetary base, as it takes a while for the newly created money to “work its way through the python” and emerge as price increases. Also, in a deflationary environment, money creation may have no immediate inflationary effect, but when the pendulum swings back the other way, all of that new money will still be in the system, bidding up prices. So, the question is this: if the money creation by the Fed since its inception has caused the purchasing power of the dollar to depreciate by about 95% between 1913 and 2008, then what will an almost instantaneous spike almost equal in magnitude to that of the preceding 95 years do in the near future?

CPI inflation tends to lag growth in the monetary base, as it takes a while for the newly created money to “work its way through the python” and emerge as price increases. Also, in a deflationary environment, money creation may have no immediate inflationary effect, but when the pendulum swings back the other way, all of that new money will still be in the system, bidding up prices. So, the question is this: if the money creation by the Fed since its inception has caused the purchasing power of the dollar to depreciate by about 95% between 1913 and 2008, then what will an almost instantaneous spike almost equal in magnitude to that of the preceding 95 years do in the near future?

Friday, January 23, 2009

Reading List: Roswell, Texas

- Smith, L. Neil, Rex F. May, Scott Bieser, and Jen Zach. Roswell, Texas. Round Rock, TX: Big Head Press, [2007] 2008. ISBN 978-0-9743814-5-9.

- I have previously mentioned this story and even posted a puzzle based upon it. This was based upon the online edition, which remains available for free. For me, reading anything, including a comic book (sorry—“graphic novel”), online a few pages a week doesn't count as reading worthy of inclusion in this list, so I deferred listing it until I had time to enjoy the trade paperback edition, which has been sitting on my shelf for several months after its June 2008 release. This rollicking, occasionally zany, alternative universe story is set in the libertarian Federated States of Texas, where, as in our own timeline, something distinctly odd happens on July 4th, 1947 on a ranch outside the town of Roswell. As rumours spread around the world, teams from the Federated States, the United States, the California Republic, the Franco-Mexican Empire, Nazi Britain, and others set out to discover the truth and exploit the information for their own benefit. Involved in the scheming and race to the goal are this universe's incarnations of Malcolm Little, Meir Kahane, Marion Morrison, Eliot Ness, T. E. Lawrence, Walt Disney, Irène Joliot-Curie, Karol Wojtyla, Gene Roddenberry, and Audie Murphy, among many others. We also encounter a most curious character from an out of the way place L. Neil Smith fans will recall fondly. The graphic format works very well with the artfully-constructed story. Be sure to scan each panel for little details—there are many, and easily missed if you focus only on the text. The only disappointment in this otherwise near-perfect entertainment is that readers of the online edition will be dismayed to discover that all of the beautiful colour applied by Jen Zach has been flattened out (albeit very well) into grey scale in the print edition. Due to the higher resolution of print, you can still make out things in the book edition which aren't discernible online, but it's a pity to lose the colour. The publisher has explained the economic reasons which compelled this decision, which make perfect sense. Should a “premium edition” come along, I'll be glad to part with US$40 for a full colour copy.

Thursday, January 22, 2009

Reading List: Damp Squid

- Butterfield, Jeremy. Damp Squid. Oxford: Oxford University Press, 2008. ISBN 978-0-19-923906-1.

- Dictionaries attempt to capture how language (or at least the words of which it is composed) is used, or in some cases should be used according to the compiler of the dictionary, and in rare examples, such as the monumental Oxford English Dictionary (OED), to trace the origin and history of the use of words over time. But dictionaries are no better than the source material upon which they are based, and even the OED, with its millions of quotations contributed by thousands of volunteer readers, can only sample a small fraction of the written language. Further, there is much more to language than the definitions of words: syntax, grammar, regional dialects and usage, changes due to the style of writing (formal, informal, scholarly, etc.), associations of words with one another, differences between spoken and written language, and evolution of all of these matters and more over time. Before the advent of computers and, more recently, the access to large volumes of machine-readable text afforded by the Internet, research into these aspects of linguistics was difficult, extraordinarily tedious, and its accuracy suspect due to the small sample sizes necessarily used in studies. Computer linguistics sets out to study how a language is actually used by collecting a large quantity of text (called a corpus), tagged with identifying information useful for the intended studies, and permitting measurement of the statistics of the content of the text. The first computerised corpus was created in 1961, containing the then-staggering number of one million words. (Note that since a corpus contains extracts of text, the word count refers to the total number of words, not the number of unique words—as we'll see shortly, a small number of words accounts for a large fraction of the text.) The preeminent research corpus today is the Oxford English Corpus which, in 2006, surpassed two billion words and is presently growing at the rate of 350 million words a year—ain't the Web grand, or what? This book, which is a pure delight, compelling page turner, and must-have for all fanatic “wordies”, is a light-hearted look at the state of the English language today: not what it should be, but what it is. Traditionalists and fussy prescriptivists (among whom I count myself) will be dismayed at the battles already lost: “miniscule” and “straight-laced” already outnumber “minuscule” and “strait-laced”, and many other barbarisms and clueless coinages are coming on strong. Less depressing and more fascinating are the empirical research on word frequency (Zipf's Law is much in evidence here, although it is never cited by name)—the ten most frequent words make up 25% of the corpus, and the top one hundred account for fully half of the text—word origins, mutation of words and terms, association of words with one another, idiomatic phrases, and the way context dictates the choice of words which most English speakers would find almost impossible to distinguish by definition alone. This amateur astronomer finds it heartening to discover that the most common noun modified by the adjective “naked” is “eye” (1398 times in the corpus; “body” is second at 1144 occurrences). If you've ever been baffled by the origin of the idiom “It's raining cats and dogs” in English, just imagine how puzzled the Welsh must be by “Bwrw hen wragedd a ffyn” (“It's raining old women and sticks”). The title? It's an example of an “eggcorn” (p. 58–59): a common word or phrase which mutates into a similar sounding one as speakers who can't puzzle out its original, now obscure, meaning try to make sense of it. Now that the safetyland culture has made most people unfamiliar with explosives, “damp squib” becomes “damp squid” (although, if you're a squid, it's not being damp that's a problem). Other eggcorns marching their way through the language are “baited breath”, “preying mantis”, and “slight of hand”.

Wednesday, January 21, 2009

Welcome to America: Click to Enter!

I used to think Microsoft had retired the prize for the most absurd pop-up dialogue boxes, but one must never count out the U.S. government when it comes to scaling heights never previously imagined achievable. “If they can land a man on the Moon”…certainly they can make a dialogue box that puts to shame the private sector obfuscators of Redmond. Case in point: citizens of countries eligible for the U.S. “Visa Waiver Program” are able to travel to the U.S. without previously applying for a visa, just as citizens of most countries in Europe have been able to do for travel to other European countries for decades. But with the U.S., there's always another turn of the screw—most recently the “Electronic System for Travel Authorization” which requires citizens of “visa waiver” countries to provide intrusive personal information at least 72 hours before departure or be denied boarding on flights to that destination whence fewer and fewer people want to go in any case, given the ever-ratcheting pain of entering the country and routine harassment and humiliation of travellers on domestic flights. So visit that site: here's the pop-up your browser will display: “Welcome to America! Your rights are important to us. Press 1 to forfeit them and visit our country. Press 2 to retain them and stay at home. Para español…”

“Welcome to America! Your rights are important to us. Press 1 to forfeit them and visit our country. Press 2 to retain them and stay at home. Para español…”

Saturday, January 17, 2009

Reading List: Dragon's Teeth. Vol. 2

- Sinclair, Upton. Dragon's Teeth. Vol. 2. Safety Harbor, FL: Simon Publications, [1942] 2001. ISBN 978-1-931313-15-5.

- This is the second half of the third volume in Upton Sinclair's grand-scale historical novel covering the years from 1913 through 1949. Please see my notes on the first half for details on the series and this novel. The second half, comprising books four through six of the original novel (this is a print on demand facsimile edition, in which each of the original novels is split into two parts due to constraints of the publisher), covers the years 1933 and 1934, as Hitler tightens his grip on Germany and persecution of the Jews begins in earnest. The playboy hero Lanny Budd finds himself in Germany trying to arrange the escape of Jewish relatives from the grasp of the Nazi tyranny, meets Goebbels, Göring, and eventually Hitler, and discovers the depth of the corruption and depravity of the Nazi regime, and then comes to experience it directly when he becomes caught up in the Night of the Long Knives. This book was published in January 1942, less than a month after Pearl Harbor. It is remarkable to read a book written in a time when the U.S. and Nazi Germany were at peace and the swastika flag flew from the German embassy in Washington which got the essence of the Nazis so absolutely correct (especially the corruption of the regime, which was overlooked by so many until Albert Speer's books decades later). This is very much a period piece, and enjoyable in giving a sense of how people saw the events of the 1930s not long after they happened. I'm not, however, inclined to slog on through the other novels in the saga—one suffices for me.

Thursday, January 15, 2009

The Hacker's Diet Online: Unicode Character Disaster

The first major disaster since the recent server update has struck The Hacker's Diet Online, causing non-ASCII Unicode characters (for example, accented letters; Greek, Cyrillic, and other alphabets; and Chinese and Japanese characters) appearing in comment fields of log forms and in other contexts such as account information) to be garbled. In addition, users whose user names and/or passwords contained such characters may have had problems logging in. It turns out (based on a preliminary analysis, performed under the gun, which may be revised as I investigate further) that the Perl function decode_utf8 which, under Perl 5.8.5 on the old server, did precisely what its name implies: decode a string containing byte codes in UTF-8 encoding into a Unicode string, now, on Perl 5.8.8, has become “smart” (in the Microsoft sense of the word) and decides whether or not to do what you invoked it to do based upon whether the string argument it was passed was tagged as having been in UTF-8 encoding. When processing arguments to CGI scripts on Web sites, there's a bit of a problem in handling encoding: normally you want to decode UTF-8 arguments, but in the case of file uploads, the decoding must be suppressed lest binary files be corrupted. Further, arguments passed via the QUERY-STRING from get requests and from standard input for post requests require different handling of encoding. Stir “smartness” on the part of a Perl function into this mess and you're asking for a disaster—which was duly delivered. I have applied a one-line patch to The Hacker's Diet Online which, based upon my testing so far, appears to fix the problem. Since there are so many forms in the application which accept Unicode characters and a variety of types of input (text fields, passwords, uploaded files), it will take some time to verify that everything is now working correctly. I'm pretty confident that the clamant problem of corruption of log comment fields is now corrected. Users who have had their comment fields wrecked due to this problem and do not wish to retype the affected comments should contact me via the feedback form, and I'll try to restore any comments which have been corrupted from the daily backup tapes of the server farm.Tuesday, January 13, 2009

Reading List: Energiya-Buran

- Hendrickx, Bart and Bert Vis. Energiya-Buran. Chichester, UK: Springer Praxis, 2007. ISBN 978-0-387-69848-9.

- This authoritative history chronicles one of the most bizarre episodes of the Cold War. When the U.S. Space Shuttle program was launched in 1972, the Soviets, unlike the majority of journalists and space advocates in the West who were bamboozled by NASA's propaganda, couldn't make any sense of the economic justification for the program. They worked the numbers, and they just didn't work—the flight rates, cost per mission, and most of the other numbers were obviously not achievable. So, did the Soviets chuckle at this latest folly of the capitalist, imperialist aggressors and continue on their own time-proven path of mass-produced low-technology expendable boosters? Well, of course not! They figured that even if their wisest double-domed analysts were unable to discern the justification for the massive expenditures NASA had budgeted for the Shuttle, there must be some covert military reason for its existence to which they hadn't yet twigged, and hence they couldn't tolerate a shuttle gap and consequently had to build their own, however pointless it looked on the surface. And that's precisely what they did, as this book so thoroughly documents, with a detailed history, hundreds of pictures, and technical information which has only recently become available. Reasonable people can argue about the extent to which the Soviet shuttle was a copy of the American (and since the U.S. program started years before and placed much of its design data into the public domain, any wise designer would be foolish not to profit by using it), but what is not disputed is that (unlike the U.S. Shuttle) Energiya was a general purpose heavy-lift launcher which had the orbiter Buran as only one of its possible payloads and was one of the most magnificent engineering projects of the space programs of any nation, involving massive research and development, manufacturing, testing, integrated mission simulation, crew training, and flight testing programs. Indeed, Energiya-Buran was in many ways a better-conceived program for space access than the U.S. Shuttle program: it integrated a heavy payload cargo launcher with the shuttle program, never envisioned replacing less costly expendable boosters with the shuttle, and forecast a development program which would encompass full reusability of boosters and core stages and both unmanned cargo and manned crew changeout missions to Soviet space stations. The program came to a simultaneously triumphant and tragic end: the Energiya booster and the Energiya-Buran shuttle system performed flawless missions (the first Energiya launch failed to put its payload into orbit, but this was due to a software error in the payload: the launcher performed nominally from ignition through payload separation). In the one and only flight of Buran (launch and landing video, other launch views) the orbiter was placed into its intended orbit and landed on the cosmodrome runway at precisely the expected time. And then, in the best tradition not only of the Communist Party of the Soviet Union but of the British Labour Party of the 1970s, this singular success was rewarded by cancellation of the entire program. As an engineer, I have almost unlimited admiration for my ex-Soviet and Russian colleagues who did such masterful work and who will doubtless advance technology in the future to the benefit of us all. We should celebrate the achievement of those who created this magnificent space transportation system, while encouraging those inspired by it to open the high frontier to all of those who exulted in its success.

Sunday, January 11, 2009

Moonrise at the Lignières Corral

Click image to enlarge.

No, you're not dreaming—two Fourmilog postings in one day! Had to test the image uploading and thumbnail generation in MovableType 4.23. Don't worry: we aren't going all Instapundit here…heh.Fourmilab Server Update

At 22:30 UTC on 2009-01-10 I put the updated server configuration into production at Fourmilab. The load balancer was directed to route all public requests to the machine which was previously the backup server, and has now been designated the production server. This machine is running CentOS 5.2 (GNU/Linux 2.6.18-92.1.22.el5PAE) and all publicly-accessible server programs and CGI applications have been rebuilt with current compilers and libraries. I will be watching the error logs like a hawk for the next few days—it's amazing how many long-obsolete requests pop up when you supplant some file that's been knocking around the Web for decade or so in links and message boards. This is the first message posted to Fourmilog from the new server with Movable Type 4.23 and the MySQL backing store.Tuesday, January 6, 2009

Frosted Forest

Click image for an enlargement.

Monday, January 5, 2009

Reading List: The Black Swan

- Taleb, Nassim Nicholas. The Black Swan. New York: Random House, 2007. ISBN 978-1-4000-6351-2.

- If you are interested in financial markets, investing, the philosophy of science, modelling of socioeconomic systems, theories of history and historicism, or the rôle of randomness and contingency in the unfolding of events, this is a must-read book. The author largely avoids mathematics (except in the end notes) and makes his case in quirky and often acerbic prose (there's something about the French that really gets his goat) which works effectively. The essential message of the book, explained by example in a wide variety of contexts is (and I'll be rather more mathematical here in the interest of concision) is that while many (but certainly not all) natural phenomena can be well modelled by a Gaussian (“bell curve”) distribution, phenomena in human society (for example, the distribution of wealth, population of cities, book sales by authors, casualties in wars, performance of stocks, profitability of companies, frequency of words in language, etc.) are best described by scale-invariant power law distributions. While Gaussian processes converge rapidly upon a mean and standard deviation and rare outliers have little impact upon these measures, in a power law distribution the outliers dominate. Consider this example. Suppose you wish to determine the mean height of adult males in the United States. If you go out and pick 1000 men at random and measure their height, then compute the average, absent sampling bias (for example, picking them from among college basketball players), you'll obtain a figure which is very close to that you'd get if you included the entire male population of the country. If you replaced one of your sample of 1000 with the tallest man in the country, or with the shortest, his inclusion would have a negligible effect upon the average, as the difference from the mean of the other 999 would be divided by 1000 when computing the average. Now repeat the experiment, but try instead to compute mean net worth. Once again, pick 1000 men at random, compute the net worth of each, and average the numbers. Then, replace one of the 1000 by Bill Gates. Suddenly Bill Gates's net worth dwarfs that of the other 999 (unless one of them randomly happened to be Warren Buffett, say)—the one single outlier dominates the result of the entire sample. Power laws are everywhere in the human experience (heck, I even found one in AOL search queries), and yet so-called “social scientists” (Thomas Sowell once observed that almost any word is devalued by preceding it with “social”) blithely assume that the Gaussian distribution can be used to model the variability of the things they measure, and that extrapolations from past experience are predictive of the future. The entry of many people trained in physics and mathematics into the field of financial analysis has swelled the ranks of those who naïvely assume human action behaves like inanimate physical systems. The problem with a power law is that as long as you haven't yet seen the very rare yet stupendously significant outlier, it looks pretty much like a Gaussian, and so your model based upon that (false) assumption works pretty well—until it doesn't. The author calls these unimagined and unmodelled rare events “Black Swans”—you can see a hundred, a thousand, a million white swans and consider each as confirmation of your model that “all swans are white”, but it only takes a single black swan to falsify your model, regardless of how much data you've amassed and how long it has correctly predicted things before it utterly failed. Moving from ornithology to finance, one of the most common causes of financial calamities in the last few decades has been the appearance of Black Swans, wrecking finely crafted systems built on the assumption of Gaussian behaviour and extrapolation from the past. Much of the current calamity in hedge funds and financial derivatives comes directly from strategies for “making pennies by risking dollars” which never took into account the possibility of the outlier which would wipe out the capital at risk (not to mention that of the lenders to these highly leveraged players who thought they'd quantified and thus tamed the dire risks they were taking). The Black Swan need not be a destructive bird: for those who truly understand it, it can point the way to investment success. The original business concept of Autodesk was a bet on a Black Swan: I didn't have any confidence in our ability to predict which product would be a success in the early PC market, but I was pretty sure that if we fielded five products or so, one of them would be a hit on which we could concentrate after the market told us which was the winner. A venture capital fund does the same thing: because the upside of a success can be vastly larger than what you lose on a dud, you can win, and win big, while writing off 90% of all of the ventures you back. Investors can fashion a similar strategy using options and option-equivalent investments (for example, resource stocks with a high cost of production), diversifying a small part of their portfolio across a number of extremely high risk investments with unbounded upside while keeping the bulk in instruments (for example sovereign debt) as immune as possible to Black Swans. There is much more to this book than the matters upon which I have chosen to expound here. What you need to do is lay your hands on this book, read it cover to cover, think it over for a while, then read it again—it is so well written and entertaining that this will be a joy, not a chore. I find it beyond charming that this book was published by Random House.

Friday, January 2, 2009

Home Cinema, 1930-Style

This week's new pages for the 1930 Allied Radio Catalogue bring the total pages posted up to 79, and include this home cinema system which was, I dare say, a tad before its time and launched under particularly inauspicious economic circumstances. The cost (less “[s]peaker and tubes for amplifier”) of US$132.50 is equivalent to US$1685 depreciated 2008 Yankee dollars. And look at the cost of the “content”! A 200 foot subject which would have had a running time of about six minutes cost US$13.20 gold dollars, or about US$168 in contemporary fiat currency. But, hey, there weren't any region codes or DRM!Thursday, January 1, 2009

It's International Write Like a Moron Day!

While I was hoping to write something in commemoration of International Write Like a Moron Day, I humbly concede my incapacity to scale such heights as those reached by this sign, displayed in a rally in New York City on December 28th, 2008.