Fifty Years of Programming

and Moore's Law

by John Walker

November 4th, 2017

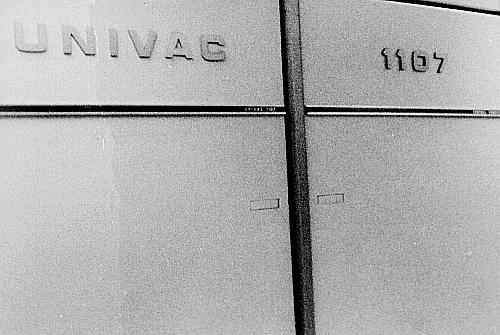

As best as I can determine, it was around fifty years

ago, within a week or so, that I wrote my first computer program,

directing my life's trajectory onto the slippery slope which

ended in my present ignominy. This provides an opportunity for

reflection on how computing has changed in the past half century, which

is arguably a technological transition unprecedented in the human

experience. But more about that later. First, let me say a few words

about the computer on which I ran that program, the Univac 1107 at Case

Institute of Technology, in the fall of 1967.

As best as I can determine, it was around fifty years

ago, within a week or so, that I wrote my first computer program,

directing my life's trajectory onto the slippery slope which

ended in my present ignominy. This provides an opportunity for

reflection on how computing has changed in the past half century, which

is arguably a technological transition unprecedented in the human

experience. But more about that later. First, let me say a few words

about the computer on which I ran that program, the Univac 1107 at Case

Institute of Technology, in the fall of 1967.

It Was Fifty Years Ago Today…

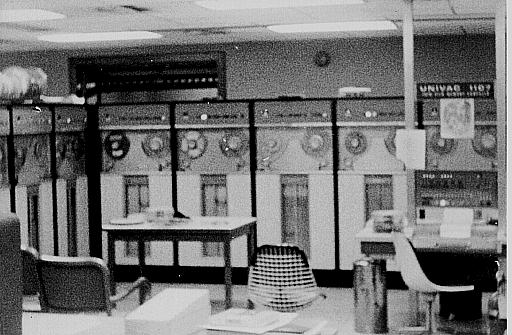

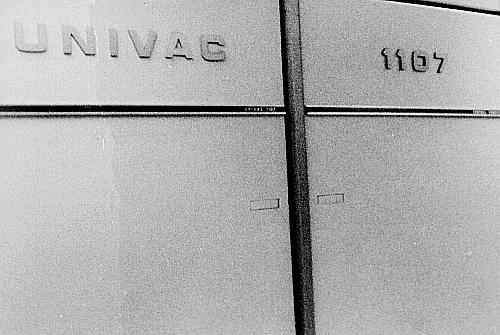

In the 1960s, the Univac® 1107 provided the main

computing facility for Case Institute of Technology in Cleveland Ohio

in the United States. Operated by the Andrew R. Jennings Computing

Center, the “Seven” enticed a generation into the world of

computing and, with the innovative “fast turnaround batch,”

“open shop” access pioneered at Case, provided a standard

of service to a large community of users almost unheard of at the time.

The Case 1107 was immortalised by being included by Case alumnus Donald

Knuth in the calculation of the decimal representation of the

MIX

1009 computer in

The Art of Computer Programming.

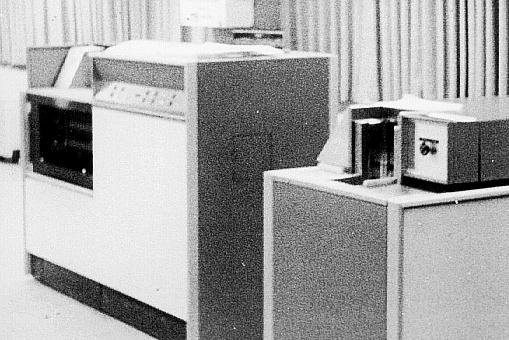

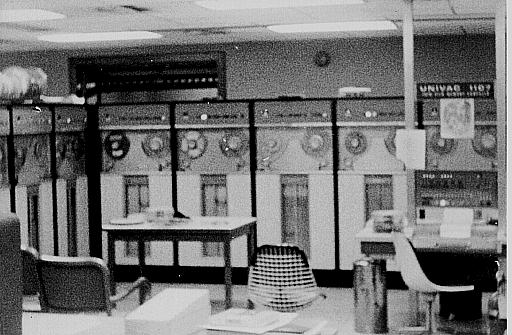

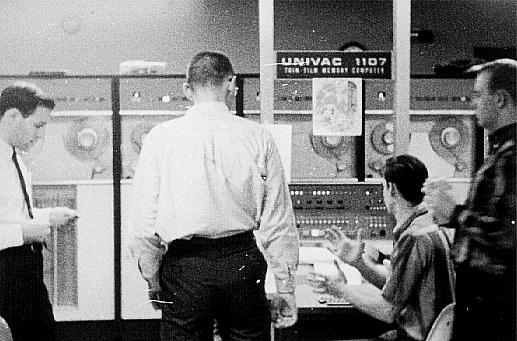

In the view above, we see the system console at the right, in front

of the bank of UNISERVO™ II-A tape drives. The rounded cabinet at the

very left of the frame is part of the TRW 530 “Logram

computer”, which was used primarily to convert tapes between

UNISERVO II-A 200 BPI format and the 800 BPI IBM-compatible format used

by the UNISERVO VIII-C drives on the 1108 at Chi Corporation.

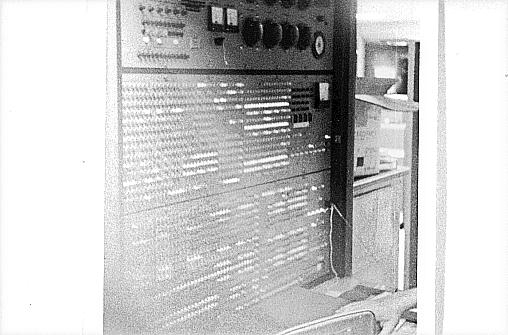

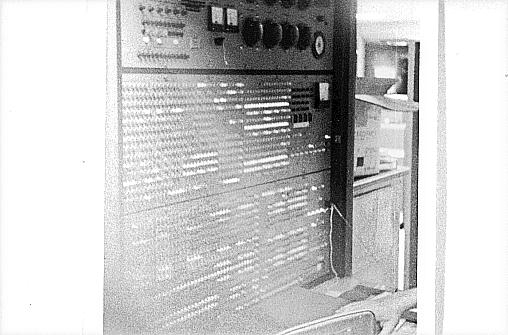

The Univac 1107 was massive, but quiet. All its main cabinets went

from floor to ceiling, with air conditioned air injected under the

raised floor and exit air exhausted over the dropped ceiling. Most of

the central computer cabinets were walk-in. This one contained the main

processor logic and the 65,536 36-bit words (about 256 K bytes) of core

memory. Memory access time was 8 microseconds per word, but

interleaving of instruction and data accesses allowed average access

time to approach 4 microseconds in ideal conditions.

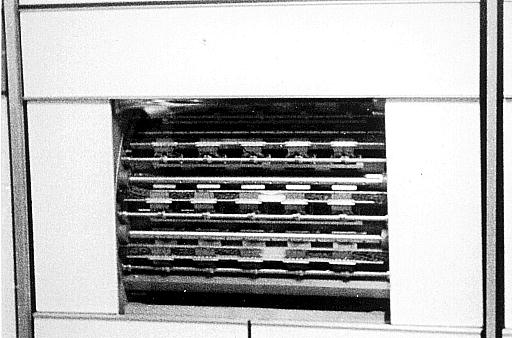

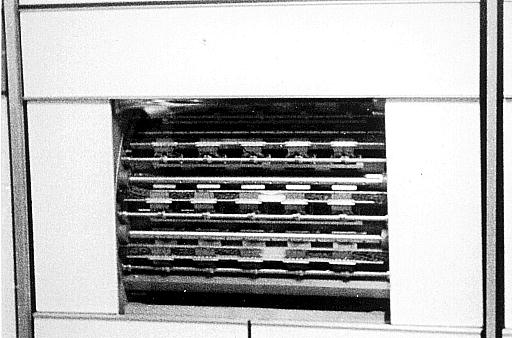

Mass storage for the single job run at a time, and for queueing

waiting jobs and printer output for those already complete, was

provided by the two FH-880 magnetic drums, one of which is shown

above, which together provided about 6 megabytes of random

access storage.

The central processor was a 36 bit architecture, capable

of executing most simple arithmetic instructions in one 4 microsecond

cycle time. Multiplication of two 36-bit integers took 12 microseconds,

and division of a 72-bit dividend by a 36-bit divisor 31.3

microseconds. The processor performed 36-bit single precision floating

point arithmetic in hardware, but did not implement double precision

floating point.

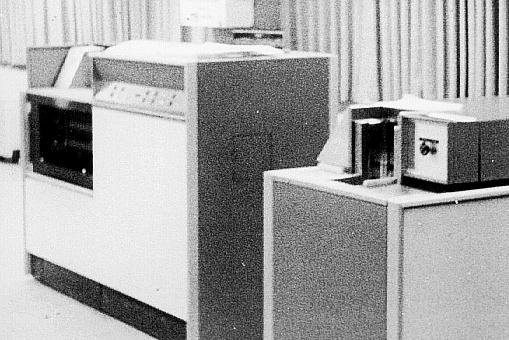

The high speed printer and card reader were connected directly to

1107 I/O channels. The printer was a rotating drum and hammer device

which printed 600 lines per minute. The card reader read 600 cards per

minute with two sets of brushes to read and verify the card; if they

did not agree, reading would halt. Cards were grasped by a

vacuum-equipped feed arm and fed into the mechanism.

The “card eater” was notorious for jamming, sometimes

demolishing student card decks which had been fed through numerous

times in the process of debugging a program. Note the prominent

Emergency Stop button to the right of the throat which ingested

the card deck. Fortunately, students in the “open shop”

environment in which the 1107 operated quickly learned how to

field-strip the card reader and remove the remains of their programming

projects when this happened.

The high speed printer

was derived directly from a printer used on the Univac I. Its hammers

were driven by a bank of

thyratron

(gas-filled power switching) tubes which were visible when the back of

the printer was removed. When the drum advanced so the desired

character was approaching the hammer, the tube would fire and whack the

paper against the drum from the hammer bank behind the page. The

printer incorporated a feature known as the “all out

detector” which, if one or more hammers failed to fire on a given

line, leaving their corresponding drive tubes not “out”

(you could see the gas in them glowing until they fired), halted the

printer, permitting the operator to examine the printer controller,

note the missing characters, open the printer carriage, write them into

the columns they belonged with a pencil or pen, then restart the

printer. The pace of life was so much slower then….

The high speed printer

was derived directly from a printer used on the Univac I. Its hammers

were driven by a bank of

thyratron

(gas-filled power switching) tubes which were visible when the back of

the printer was removed. When the drum advanced so the desired

character was approaching the hammer, the tube would fire and whack the

paper against the drum from the hammer bank behind the page. The

printer incorporated a feature known as the “all out

detector” which, if one or more hammers failed to fire on a given

line, leaving their corresponding drive tubes not “out”

(you could see the gas in them glowing until they fired), halted the

printer, permitting the operator to examine the printer controller,

note the missing characters, open the printer carriage, write them into

the columns they belonged with a pencil or pen, then restart the

printer. The pace of life was so much slower then….

Also memorable about the high speed printer was that, due to its

both consuming and storing relatively large amounts of energy, along

with a supply of paper, its front panel was equipped with a Fire

indicator light and, directly below, an Extinguish button. I was

a very different person in those distant days of yore, and never pushed

Extinguish to see what, if anything, it did. However, one Friday

night while the system programmers and system programmer wannabes

(myself falling into the latter category) were watching Star

Trek in the hardware engineers' room (first airing of

The Original Series, you understand!) the Extinguish button

lighted up spontaneously without any obvious effect; a few switch flips

turned it off.

All jobs (“runs”) were initiated by reading in decks of

punch

cards from a card reader. Above is the main keypunch room where

most users prepared their programs. This room was open to anybody, 24

hours per day, and was equipped with IBM model 026 manual card punches.

The individual at the keypunch is unidentified. Visible through the

window at the end of the keypunch room is the computer room, with the

Univac 1004 card reader/punch and printer unit in the foreground.

Here's a view of the 1004, with the keypunch room in the background.

This machine read 300 cards per minute and printed 300 lines per

minute. Though slower than the high speed reader and printer, it was

more reliable and its output generally more legible, so many preferred

it. Its card punch (at the left) was an awesome machine that punched an

entire 80 column IBM card all at once. A huge electric motor

powered the beast, and the GRRRrumble and then

whump, whump, whump when it came to life brings back

memories even today. The reader/printer unit is to the right. Punch

cards were introduced into a hopper to the right of the printer and

followed a tortuous path before emerging vertically in the bin visible

at the right of the unit. This bin was slanted upward slightly and a

weight with wheels on the bottom held the cards in place as the deck

filled the exit bin. If you happened to feed a deck into the 1004 when

this weight wasn't in place, the cards would spray out of the reader

through the air every which way, completely scrambling the contents.

This was extremely amusing to watch, as long as the cards weren't

yours.

The 1004 jammed less frequently than the high speed reader, but when

it did jam it could do so heroically. The high speed reader never

destroyed more than a single card at a time, but the 1004 could jam in

ways not detected by the machinery, which continued to feed more and

more cards to their certain doom, until their torn remains sufficiently

clogged the works to bring things to a halt. As I recall, the record

for the number of crumpled and shredded cards removed from a single

1004 jam was 17, but I believe that was a 1004-II which ran twice as

fast and was even more prone to jamming. Still, it was not unusual to

lose four or five cards in a 1004 jam.

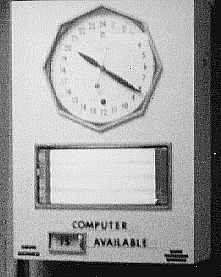

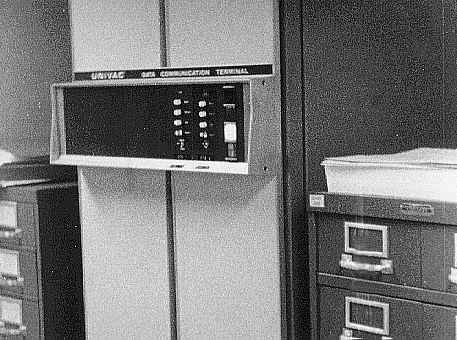

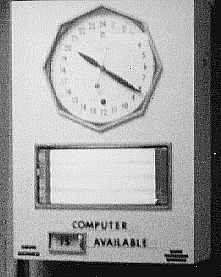

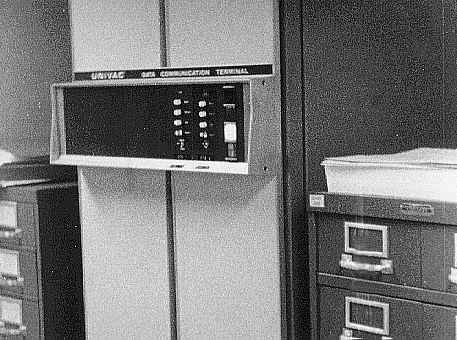

The Case 1107 was one of the first computers on Earth to provide

access from remote locations both at other places on the campus and as

far afield as Erie, Pennsylvania. This is the 2400 baud leased line

modem used for one of those connections. Now, if you have a modem, you

need a port to hook it up to. Here's a pair of 2400 baud serial ports,

1960s style.

This floor-to-ceiling box (the file drawers for punch cards on either

side provide a sense of scale) was called the CTS—short for

“Communication Terminal, Synchronous”. It could contain

either one or two synchronous serial ports, each on a dedicated I/O

channel. This CTS has two ports; the vertical rows of round lights show

input and output activity on each port. Not only did the CTS consume an

entire I/O channel for each port (the 1107 only had 16

channels—you could put 16 tape drives on a channel

or…one serial port), it was a prodigious memory hog.

Input and output were stored with a single six bit character in each 36

bit word. If you transmitted and received small blocks of 1024

characters, and ran half-duplex so you didn't need separate input and

output buffers, the data buffer for a single remote terminal would

still consume 1/64th of the entire 65536 word memory of the 1107.

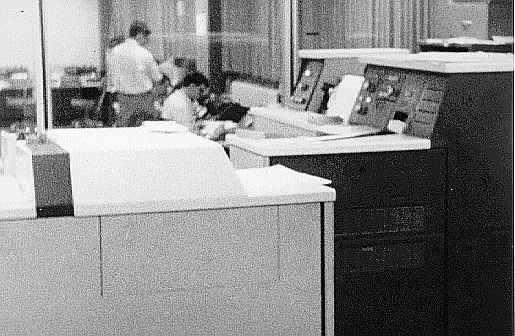

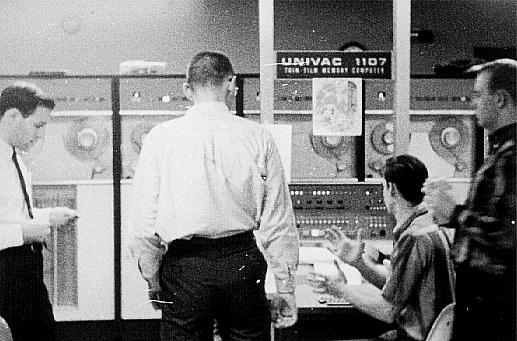

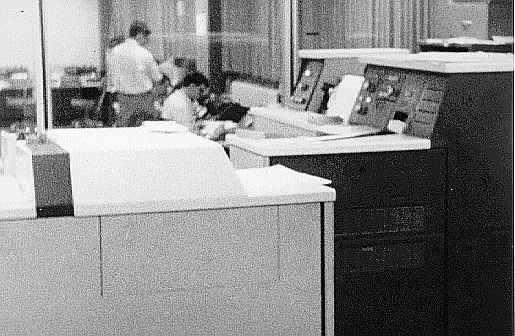

Day and night, the 1107 attracted individuals fired by the sense of

wonder of what such a machine and its descendents, properly programmed,

might do during their lifetimes. In this picture, from left to right,

are John Richards, Ken Walter, John Langner, and Gene Hughes. John

Walker snapped this candid picture.

The 1107 was installed on the first floor of the Quail Building,

shown above in a photo taken around 1966 by Bill Patterson. This is the

same building which previously housed Case's Univac I and Burroughs 220

computers. The keypunch room was on the near end of the left face of

the building in this view, and the 1107 occupied the far half of the

ground floor.

A Sense of Scale

The Univac 1107 I used in 1967 (which was, at the time, reaching the

end of its service life, and would be replaced the next year by its

successor, the Univac 1108) had a fundamental instruction clock rate of

250 kHz: it could execute simple instructions such as add, subtract,

and jump in around 4 microseconds, or 250,000 such instructions per

second. By the standards of 1967, this was pretty impressive: heck,

it's a lot faster than I can do such things, and it was

sufficient to meet the need for research and instructional computing

for a university with around 3,000 students. Let's look back at it from

the perspective of today's computers.

I am writing this on a modest laptop whose processor runs at 3 GHz:

three billion instructions per second. Simply based upon the

clock rate, my current machine, fifty years after I ran my first

program, is twelve thousand times faster than the first computer I

used. But that isn't the whole story. The Univac 1107 had a single

processor, made of discrete transistors on circuit boards in a cabinet

the size of a kitchen. My laptop has eight processors, able to

all run in parallel, and if I manage to keep them all busy at the same

time, I have 96,000 times the computing power at my fingertips.

Further, the instructions are more powerful: the 1107 was limited to 36

bit integer and floating point computations: more precision required

costly software multiple precision, while my laptop has direct hardware

support for 64 bit quantities. This is probably worth another factor of

between two and four for applications such as graphics, multimedia, and

scientific computation.

I am writing this on a modest laptop whose processor runs at 3 GHz:

three billion instructions per second. Simply based upon the

clock rate, my current machine, fifty years after I ran my first

program, is twelve thousand times faster than the first computer I

used. But that isn't the whole story. The Univac 1107 had a single

processor, made of discrete transistors on circuit boards in a cabinet

the size of a kitchen. My laptop has eight processors, able to

all run in parallel, and if I manage to keep them all busy at the same

time, I have 96,000 times the computing power at my fingertips.

Further, the instructions are more powerful: the 1107 was limited to 36

bit integer and floating point computations: more precision required

costly software multiple precision, while my laptop has direct hardware

support for 64 bit quantities. This is probably worth another factor of

between two and four for applications such as graphics, multimedia, and

scientific computation.

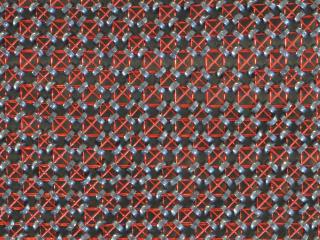

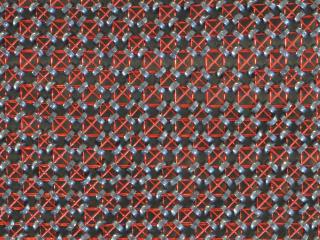

What about memory (or, as I prefer,

storage)?

You can't do all that much with computing power if you don't have the

space to store the data you're crunching. In 1967, most programming was

a constant battle to work around the constraints of limited storage.

Every bit in a core memory plane had to be wired by a human, peering

through a magnifying glass, threading almost invisible wires through

tiny ferrite toroidal cores—no wonder these memory devices

cost

a fortune. The Univac 1107 had 65536 words (“64K”) of

36 bit core memory or, roughly converting into present-day terminology,

256 kilobytes of RAM (random-access memory). And my laptop in 2017?

Well, it has 64 gigabytes of RAM. Let me write these out with all of

the digits to make this clear. The Univac 1107 in 1967: 256,000 bytes;

my laptop in 2017: 64,000,000,000 bytes. That's 250,000 times as much

memory. Imagine how many cat videos I can store!

What about memory (or, as I prefer,

storage)?

You can't do all that much with computing power if you don't have the

space to store the data you're crunching. In 1967, most programming was

a constant battle to work around the constraints of limited storage.

Every bit in a core memory plane had to be wired by a human, peering

through a magnifying glass, threading almost invisible wires through

tiny ferrite toroidal cores—no wonder these memory devices

cost

a fortune. The Univac 1107 had 65536 words (“64K”) of

36 bit core memory or, roughly converting into present-day terminology,

256 kilobytes of RAM (random-access memory). And my laptop in 2017?

Well, it has 64 gigabytes of RAM. Let me write these out with all of

the digits to make this clear. The Univac 1107 in 1967: 256,000 bytes;

my laptop in 2017: 64,000,000,000 bytes. That's 250,000 times as much

memory. Imagine how many cat videos I can store!

Now, let's look at what we used to call mass storage, although

by present standards it was laughable. The Univac 1107's two

FH-880 magnetic drums provided a total of around 6 megabytes of

storage, which was mostly used to buffer input and output to the

computer and provide scratch storage to programs. My laptop has

three solid state drives with a total of three

terabytes (trillions of bytes) of storage:

500,000 times that of the 1107 (in fact, the laptop has so much

storage I've opted to mirror all of my data on two drives,

halving the available storage in the interest of redundancy

should one drive fail). And my laptop is a few years old: today

I can buy a six terabyte hard drive at the supermarket.

Floating Point Benchmark

All of these big numbers—megas, gigas, and teras—tend to

make one's eyes glaze over. Let me provide a personal perspective on

the revolution in computing power during my career. Ever since the

1970s, my strategy has been to identify applications which, in order to

be useful, required more computing power than was presently available

to its potential customers, and then begin development with the goal

of, when the product was ready to bring to market, the exponential

growth in computing power at constant cost would make machines

available which could run it. This was the central strategy of

Autodesk,

Inc., the company I co-founded in 1982, and whose first product,

AutoCAD, only came into its own with the introduction of the

IBM

PC/AT and other

80286

machines in 1984. In order to keep track of the evolution of computer

power, in 1980, I created a

floating

point benchmark which measures the performance of various computers

on a scientific computation task which was a model for those I intended

to bring to market. A

“benchmark”,

in computing, is a program, usually simple and easy to adapt to various

computing environments, which models the performance of those systems

on more complex tasks you're interested in running. My benchmark, based

upon

ray

tracing to analyse the performance of optical systems, ended up

being uncannily accurate in predicting how fast a given computer and

software environment would run AutoCAD. If you were interested in word

processing, crunching large databases, or other applications, its

results weren't useful, but as a model for scientific and engineering

computation, it was

unreasonably

effective.

I first ran the benchmark in 1984 on an IBM PC/AT. Now, bear in

mind, this machine was more than three times faster than the original

IBM PC on which we had launched AutoCAD in 1982. Running the benchmark

for the standard of 1000 iterations, it ran in 3290 seconds in

Microsoft BASICA and 2132 seconds in the C language in which we

implemented AutoCAD. Note that there are 3600 seconds in an hour. Not

long after, Apple announced the Macintosh, and their Macintosh Plus ran

the benchmark in 1598 seconds, less than half the original Microsoft

figure. Amusingly, my

Marinchip

machine, first delivered in 1978, beat the much-vaunted Macintosh at

1582 seconds.

Then things really began to take off. Microsoft optimised their

BASIC, and QuickBASIC cut the run time to 404 seconds. The introduction

of the 80287

floating point coprocessor allowed a C compiler to complete the

benchmark in 165 seconds. Workstation machines and increasingly

optimised personal computer hardware and software contended throughout

the 1980s, and by the end of the decade the

Intel

80486 was running the benchmark in 1.56 seconds—more than two

thousand times faster than in 1984. But, notwithstanding the prophets

of stagnation who said, “surely, this must end”, the

exponential curve continued to climb to the sky, and before long the

one second barrier had been breached by the

RISC

processors of the 1990s, and then even those marks were dwarfed by the

runaway increase in clock rates in the 2000s. My current laptop, which

is far from the highest performance machine available today, runs the

benchmark in 0.00862 seconds. What used to take almost an hour in 1984

now can be completed in less than a hundredth of a second: this is an

increase of speed of a factor of 381,671 times, and the laptop which

delivers this performance costs a fraction, in constant dollars, of the

personal computer in 1984.

But wait, there's more. Through most of this history, computers had

only a single processor, or computing element. They processed the tasks

they were assigned one at a time. They may have flitted from one to

another to provide the illusion of concurrency, but that didn't get

things done any faster than if they'd done them in order. In the 2010s,

it became more difficult to increase the clock rate (fundamental speed)

of microprocessors, but the ability to put more and more transistors on

a silicon chip continued to increase. As a result, we began to see the

emergence of processors with multiple “cores”: separate

processors on a single chip, which could simultaneously execute

different tasks independently. Now, you could watch a cat video, build

your killer app, and produce your next YouTube hit simultaneously on

one machine because different processor cores were working on these

jobs at the same time. Fourmilab's main in-house server has 64

processor cores, all running at 3 GHz. If I get them all running at the

same time on my floating point benchmark, it completes in (normalised

to the original execution time) 0.00027 seconds, or 12,115,113 times

faster than the first machine to run the benchmark in 1984: a factor of

more than twelve million in thirty-three years.

To trot out a tired analogy, had automotive technology advanced at

the same rate as computing, the successor to the 1972 red Volkswagen

bus I drove in 1984 would be able to cruise at one billion miles per

hour (one and a half times the speed of light), get 200 million miles per

gallon (about the diameter of the Earth's orbit around the Sun) and, if

it used any Microsoft products, explode randomly every few days,

killing everybody on board.

Moore's Law

What's going on here? In 1965,

Gordon

Moore, co-founder and director of research and development at

Fairchild Semiconductor, and later co-founder of Intel, forecast that,

over the next ten years, the number of components it would be possible

to cram onto a silicon integrated circuit (chip) of constant size and

at constant cost, could be expected to roughly double every year. He

wrote, in an editorial in Electronics magazine,

The complexity for minimum component costs has increased at a rate of

roughly a factor of two per year. Certainly over the short term this

rate can be expected to continue, if not to increase. Over the longer

term, the rate of increase is a bit more uncertain, although there is

no reason to believe it will not remain nearly constant for at least 10

years.

In 1975, Moore revisited his prediction, which so far had been borne

out by progress in integrated circuit design and fabrication, and

further predicted that complexity at constant cost would continue to

double until around 1980, after which it was expected to slow, but

continue to double every two years thereafter. He placed no upper bound

on this forecast, although obviously there are ultimate limits: the

components fabricated on integrated circuits are composed of atoms, and

atoms have a finite size. At some point, as you make things smaller and

smaller, you'll reach a scale where device geometries approach the

atomic scale and there are just aren't enough atoms to fabricate the

structures you're trying to make (and, in addition, quantum effects

become significant and change how electrons behave). But those limits

were far in the future when Moore made his forecasts, and they're still

far away from our present technology. Shortly after Moore's revised

1975 forecast, Caltech professor

Carver

Mead coined the term

“Moore's

law” for this anticipated compounded exponential growth in

transistor density in integrated circuits.

Now, everybody understood at the time that “Moore's law”

was not a law of nature like Newton's laws of motion or gravitation,

but an observation about technological progress and that there was no

apparent law of physics which prevented the increase of component

density from continuing to grow exponentially over a period spanning

decades. For this to actually happen, however, engineers would have to

become ever more clever at designing smaller devices, figuring out how

to fabricate them on silicon at geometries which would approach and

then decrease below the wavelength of visible light, and fund the

research and development required to figure out how to do all of this.

And this presupposed that there would be applications and markets for

these increasingly powerful and sophisticated devices which would

generate the sales needed to fund their development. From the

perspective of the 1970s, it was entirely possible that sometime in the

1980s the market would decide that computers were fast enough for

everything people wanted to do with them, that after every office

desktop had a computer there was no further mass market that sought

more computing power, and consequently the investment required to keep

Moore's law going wouldn't be forthcoming. In the early 1980s, I bet my

career that this wouldn't happen. And it didn't. What did happen?

This….

Seldom in the human

experience has compounded exponential growth such as this over a period

of decades been experienced. Moore's forecast, originally made more

than half a century ago (two years before I wrote my first computer

program) has been proved to be almost exactly precise over time. Note

that the chart above is on a

semi-logarithmic

scale: the time scale at the bottom is linear, but the transistor

count scale at the left is logarithmic: each equal interval represents

a power of ten in the number of transistors per chip.

“All right”, you ask, “every two years or so we can

shoehorn twice as many transistors onto a chip at around the same

price. But what does that mean?” It is central to the

technological revolution through which we're living. For computer

memory it's pretty simple to understand: for about the same money,

every two years you can buy a digital camera memory card, USB drive, or

other storage device that holds twice as much data as the previous

generation. Over time, this compounded growth is flabbergasting. I

remember when I thought a 48 megabyte compact flash memory card for my

digital camera was capacious. The most recent card I bought is a much

smaller secure digital card with a capacity of 256 gigabytes: more than

five thousand times greater. This same growth applies to the main

memory in our computers and mobile gadgets:

my

first personal computer in 1976 had a RAM capacity of 256 bytes. As

I noted above, my current machine's capacity is 64 gigabytes: two

hundred and fifty million times larger.

But when you make

transistors smaller, they don't just shrink: the

Dennard

scaling theorem kicks in. It's a win-win-win-win proposition:

not only can you put more transistors on the chip, allowing more

storage and increased complexity in computer processors, each

transistor can switch faster and uses less power for each switch, thus

dissipating less heat. (This is pretty easy to understand: as the size

of the transistor decreases there are fewer electrons which have to

flow through it when it switches, so it takes less time for them to

flow in and out, and they require less supply current and release less

heat with each switch—this is oversimplified [I don't want to get

into device capacitance here], but it should be sufficient to explain

the idea.) So, you don't just have more memory: processors can be more

complicated, adding instructions for things like floating point,

graphics, and multimedia operations, adding computing cores to allow

multiple tasks to run simultaneously, and employing a multitude of

tricks, clean and dirty, to speed up their operation, all without

paying a cost in higher power consumption or waste heat generation.

Again, let me put some numbers on this. That first personal computer in

1976 ran at a clock rate of 0.5 MHz, executing an around 500,000

instructions per second. My current laptop has a clock rate of 3 GHz,

running around 3,000,000,000 instructions per second on each of its 8

processor cores, for a total of 24,000,000,000 instructions per second

(if I can keep them all busy, which is not the way to bet). Thus, the

laptop is 48,000 times faster than my first machine.

But when you make

transistors smaller, they don't just shrink: the

Dennard

scaling theorem kicks in. It's a win-win-win-win proposition:

not only can you put more transistors on the chip, allowing more

storage and increased complexity in computer processors, each

transistor can switch faster and uses less power for each switch, thus

dissipating less heat. (This is pretty easy to understand: as the size

of the transistor decreases there are fewer electrons which have to

flow through it when it switches, so it takes less time for them to

flow in and out, and they require less supply current and release less

heat with each switch—this is oversimplified [I don't want to get

into device capacitance here], but it should be sufficient to explain

the idea.) So, you don't just have more memory: processors can be more

complicated, adding instructions for things like floating point,

graphics, and multimedia operations, adding computing cores to allow

multiple tasks to run simultaneously, and employing a multitude of

tricks, clean and dirty, to speed up their operation, all without

paying a cost in higher power consumption or waste heat generation.

Again, let me put some numbers on this. That first personal computer in

1976 ran at a clock rate of 0.5 MHz, executing an around 500,000

instructions per second. My current laptop has a clock rate of 3 GHz,

running around 3,000,000,000 instructions per second on each of its 8

processor cores, for a total of 24,000,000,000 instructions per second

(if I can keep them all busy, which is not the way to bet). Thus, the

laptop is 48,000 times faster than my first machine.

In short, the number of transistors we can put on a chip is a

remarkably effective proxy for the computing power available at a

constant cost. And as that continues to double every two years, it

changes things, profoundly.

The Roaring Twenties

Where's it all going? We have experienced a spectacular period of

exponential growth over the last fifty years. In fact, I cannot think

of a single precedent for such a prolonged period of smooth progress in

a technology. Usually, technologies advance in a herky-jerky fashion:

sitting on a plateau for a while, then rushing ahead when a

technological innovation occurs, and then once again stagnating until

the next big idea. For example, consider aviation. The first

Wright

brothers' airplanes were slower than contemporary trains. It

wasn't until the development of metal, multi-engine airplanes decades

later that commercial aviation became viable. Progress was slow and

incremental until the advent of jet propulsion, developed during World

War II but not widely applied until the 1950s. While great progress has

been made in reliability, safety, and efficiency, today's jetliners fly

no faster than the Boeing 707 in 1957. Supersonic transport was shown

possible by the Concorde, but proved to be an economic dead end. By

comparison, in computing the beat has just gone on, year after year,

decade after decade (indeed, if you extend the plot backward to the

development of

Hollerith

machines and mechanical calculators in the 1800s, for more than a

century), with hardly a break. Technologies have come and gone:

electromechanical machines, vacuum tubes, discrete transistors, and

integrated circuits of ever-increasing density, but that straight line

on the semi-log plot just continues to climb toward the sky.

At every point in the last fifty years, there were many people who

predicted, often with detailed justification based in physics or

economics, why Moore's law would fail at some point in the future. And

so far, all of these forecasts have been proved to be wrong. As

David

Deutsch likes to say, “Problems are inevitable”, but

“Problems are soluble.” Engineers live to solve problems,

and so far they haven't encountered one they can't surmount. Everybody

who has bet against Moore's law so far has lost, and those of us who

have bet our careers on it have won. Some day, it will come to an end

but, so far, this is not that day.

What happens if it goes on for, say, at least another decade? Well,

that's interesting. It's what I've been calling “The Roaring

Twenties”. Just to be conservative, let's use the computing power

of my current laptop as the base, assume it's still the norm in 2020,

and extrapolate that over the next decade. If we assume the doubling

time for computing power and storage continues to be every two years,

then by 2030 your personal computer and handheld (or implanted) gadgets

will be 32 times faster with 32 times more memory than those you have

today.

So, imagine a personal computer which runs everything 32 times faster

and can effortlessly work on data sets 32 times larger than your

current machine. This is, by present-day standards, a supercomputer,

and you'll have it on your desktop or in your pocket. Such a computer

can, by pure brute force computational power (without breakthroughs in

algorithms or the fundamental understanding of problems) beat to death

a number of problems which people have traditionally assumed

“Only a human can….”. This means that a number of

these problems posted on the wall in the cartoon are going fall to the

floor some time in the Roaring Twenties. Self-driving cars will become

commonplace, and the rationale for owning your own vehicle will

decrease when you can summon transportation as a service any time you

need it and have it arrive wherever you are in minutes. Airliners will

be autonomous, supervised by human pilots responsible for eight or more

flights. Automatic language translation, including real-time audio

translation which people will inevitably call the

Babel

fish, will become reliable (at least among widely-used languages)

and commonplace. Question answering systems and machine learning based

expert systems will begin to displace the lower tier of professions

such as medicine and the law: automated clinics in consumer emporia

will demonstrate better diagnosis and referral to human specialists

than most general practitioners, and lawyers who make their living from

wills and conveyances will see their business dwindle.

So, imagine a personal computer which runs everything 32 times faster

and can effortlessly work on data sets 32 times larger than your

current machine. This is, by present-day standards, a supercomputer,

and you'll have it on your desktop or in your pocket. Such a computer

can, by pure brute force computational power (without breakthroughs in

algorithms or the fundamental understanding of problems) beat to death

a number of problems which people have traditionally assumed

“Only a human can….”. This means that a number of

these problems posted on the wall in the cartoon are going fall to the

floor some time in the Roaring Twenties. Self-driving cars will become

commonplace, and the rationale for owning your own vehicle will

decrease when you can summon transportation as a service any time you

need it and have it arrive wherever you are in minutes. Airliners will

be autonomous, supervised by human pilots responsible for eight or more

flights. Automatic language translation, including real-time audio

translation which people will inevitably call the

Babel

fish, will become reliable (at least among widely-used languages)

and commonplace. Question answering systems and machine learning based

expert systems will begin to displace the lower tier of professions

such as medicine and the law: automated clinics in consumer emporia

will demonstrate better diagnosis and referral to human specialists

than most general practitioners, and lawyers who make their living from

wills and conveyances will see their business dwindle.

The factor of 32 will also apply to supercomputers, which will begin to

approach the threshold of the computational power of the human brain.

This is a difficult-to-define and controversial issue since the brain's

electrochemical computation and digital circuits work so differently,

but toward the end of the 2020s, it may be possible, by pure

emulation

of scanned human brains, to re-instantiate them within a computer.

(My guess is that this probably won't happen until around 2050,

assuming Moore's law continues to hold, but you never know.) The advent

of artificial general intelligence, whether it happens due to clever

programmers inventing algorithms or slavish emulation of our

biologically-evolved brains, may be

our

final invention.

References and Further Reading

Photo and image credits:

- Photos of the Case Univac 1107 by John Walker are in the public domain.

- The photograph of the Quail Building at Case Institute of

Technology is © 1966 Bill Patterson, used by permission.

- Photo of a Dell laptop from the vendor's Web site, edited to

show Fourmilab logo on the screen.

- Photo of a Univac 1108 core memory plane from the Fourmilab Museum

by John Walker is in the public domain.

- Microprocessor transistor count drawing

by Wikipedia user

Wgsimon

used under the

Creative

Commons

Attribution-Share

Alike 3.0 Unported license.

- Photo of an Intel SDK-80 single-board computer from the Fourmilab

Museum by John Walker is in the public domain.

- Cartoon from Ray Kurzweil's

How

to Create a Mind, used under the doctrine of fair use in a

citation of the work.

As best as I can determine, it was around fifty years

ago, within a week or so, that I wrote my first computer program,

directing my life's trajectory onto the slippery slope which

ended in my present ignominy. This provides an opportunity for

reflection on how computing has changed in the past half century, which

is arguably a technological transition unprecedented in the human

experience. But more about that later. First, let me say a few words

about the computer on which I ran that program, the Univac 1107 at Case

Institute of Technology, in the fall of 1967.

As best as I can determine, it was around fifty years

ago, within a week or so, that I wrote my first computer program,

directing my life's trajectory onto the slippery slope which

ended in my present ignominy. This provides an opportunity for

reflection on how computing has changed in the past half century, which

is arguably a technological transition unprecedented in the human

experience. But more about that later. First, let me say a few words

about the computer on which I ran that program, the Univac 1107 at Case

Institute of Technology, in the fall of 1967.

The high speed printer

was derived directly from a printer used on the Univac I. Its hammers

were driven by a bank of

The high speed printer

was derived directly from a printer used on the Univac I. Its hammers

were driven by a bank of

I am writing this on a modest laptop whose processor runs at 3 GHz:

three billion instructions per second. Simply based upon the

clock rate, my current machine, fifty years after I ran my first

program, is twelve thousand times faster than the first computer I

used. But that isn't the whole story. The Univac 1107 had a single

processor, made of discrete transistors on circuit boards in a cabinet

the size of a kitchen. My laptop has eight processors, able to

all run in parallel, and if I manage to keep them all busy at the same

time, I have 96,000 times the computing power at my fingertips.

Further, the instructions are more powerful: the 1107 was limited to 36

bit integer and floating point computations: more precision required

costly software multiple precision, while my laptop has direct hardware

support for 64 bit quantities. This is probably worth another factor of

between two and four for applications such as graphics, multimedia, and

scientific computation.

I am writing this on a modest laptop whose processor runs at 3 GHz:

three billion instructions per second. Simply based upon the

clock rate, my current machine, fifty years after I ran my first

program, is twelve thousand times faster than the first computer I

used. But that isn't the whole story. The Univac 1107 had a single

processor, made of discrete transistors on circuit boards in a cabinet

the size of a kitchen. My laptop has eight processors, able to

all run in parallel, and if I manage to keep them all busy at the same

time, I have 96,000 times the computing power at my fingertips.

Further, the instructions are more powerful: the 1107 was limited to 36

bit integer and floating point computations: more precision required

costly software multiple precision, while my laptop has direct hardware

support for 64 bit quantities. This is probably worth another factor of

between two and four for applications such as graphics, multimedia, and

scientific computation.

So, imagine a personal computer which runs everything 32 times faster

and can effortlessly work on data sets 32 times larger than your

current machine. This is, by present-day standards, a supercomputer,

and you'll have it on your desktop or in your pocket. Such a computer

can, by pure brute force computational power (without breakthroughs in

algorithms or the fundamental understanding of problems) beat to death

a number of problems which people have traditionally assumed

“Only a human can….”. This means that a number of

these problems posted on the wall in the cartoon are going fall to the

floor some time in the Roaring Twenties. Self-driving cars will become

commonplace, and the rationale for owning your own vehicle will

decrease when you can summon transportation as a service any time you

need it and have it arrive wherever you are in minutes. Airliners will

be autonomous, supervised by human pilots responsible for eight or more

flights. Automatic language translation, including real-time audio

translation which people will inevitably call the

So, imagine a personal computer which runs everything 32 times faster

and can effortlessly work on data sets 32 times larger than your

current machine. This is, by present-day standards, a supercomputer,

and you'll have it on your desktop or in your pocket. Such a computer

can, by pure brute force computational power (without breakthroughs in

algorithms or the fundamental understanding of problems) beat to death

a number of problems which people have traditionally assumed

“Only a human can….”. This means that a number of

these problems posted on the wall in the cartoon are going fall to the

floor some time in the Roaring Twenties. Self-driving cars will become

commonplace, and the rationale for owning your own vehicle will

decrease when you can summon transportation as a service any time you

need it and have it arrive wherever you are in minutes. Airliners will

be autonomous, supervised by human pilots responsible for eight or more

flights. Automatic language translation, including real-time audio

translation which people will inevitably call the